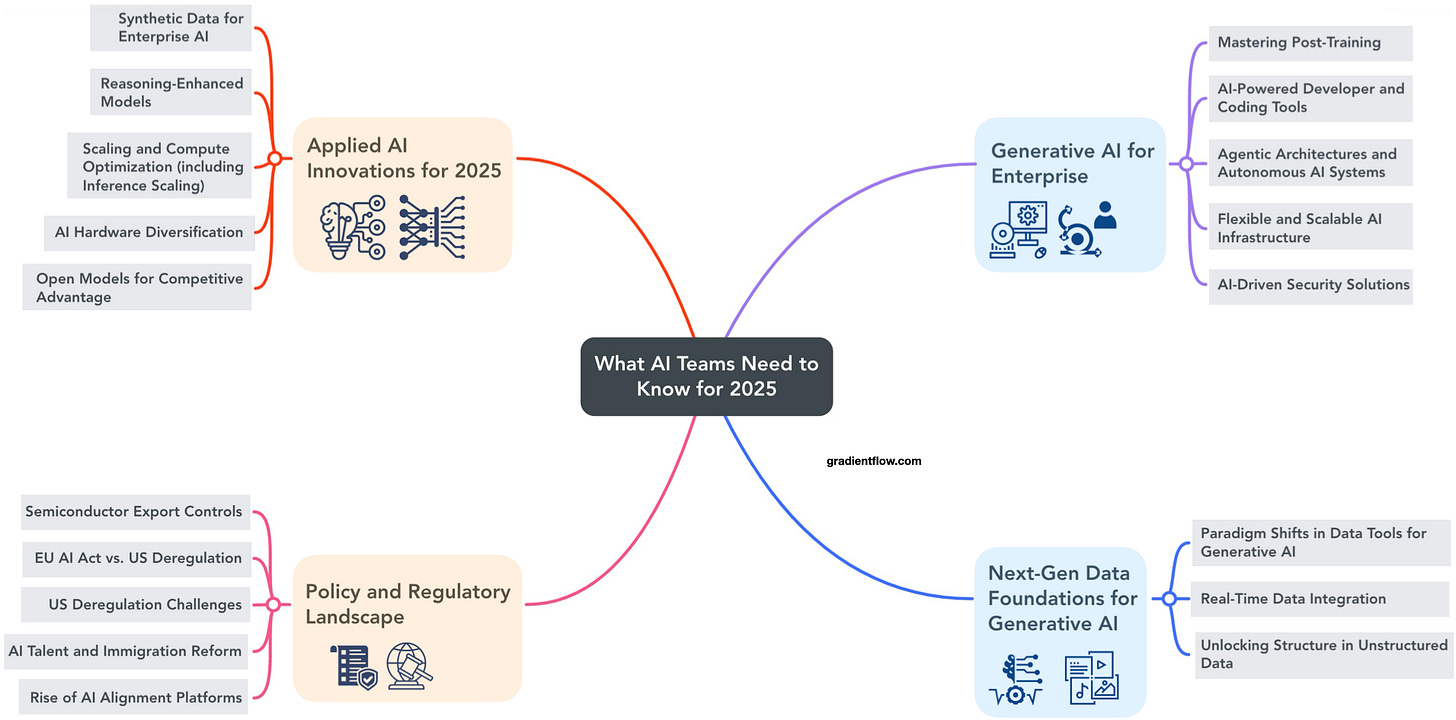

What AI Teams Need to Know for 2025

I've been watching the AI landscape closely, and I'm convinced that 2025 will be a pivotal year. We're moving beyond the hype and into a phase of real, tangible impact. This list isn't just about what's happening; it's about what's next.

Generative AI for Enterprise

Mastering Post-Training. As most AI teams won’t be training models from scratch, mastering post-training techniques becomes crucial. This includes fine-tuning with domain-specific datasets, RLHF, and optimization methods like quantization and distillation. Companies must build robust post-training infrastructure and, importantly, develop their own targeted evaluation frameworks. Traditional academic benchmarks often fail to predict real-world enterprise performance - as demonstrated by recent studies - making it essential for organizations to create domain-specific benchmarks that actually reflect their use cases. Those who excel at both post-training capabilities and evaluation methodologies will have a significant competitive advantage.

AI-Powered Developer and Coding Tools. AI coding assistants are transforming software development by boosting productivity, reducing review times, and improving issue detection. Recent developer surveys show overwhelming enthusiasm, with over 80% of professionals reporting a productivity boost. Beyond coding suggestions, multiple AI models can now be blended—one to propose new code, another to debug or navigate large codebases. In the near future, these tools will likely guide entire workflows end-to-end, from prototype to deployment. While current AI models are powerful productivity enhancers, they should be viewed as augmentation rather than replacement for experienced engineering expertise.

Agentic Architectures and Autonomous AI Systems. AI agents will evolve from narrow tasks to end-to-end workflows, streamlining operations, and reducing costs. With hundreds of vendors developing AI agent solutions, the infrastructure for building, evaluating, and managing these systems will mature significantly. Multi-modal foundation models will expand agents' capabilities beyond text, enabling them to handle visual tasks and interact with graphical user interfaces—a crucial capability for knowledge work automation. As agents take on more critical tasks, expect increased focus on risk management frameworks and evaluation protocols.

Flexible and Scalable AI Infrastructure. Scalable infrastructure is key to deploying generative AI at scale, modernizing legacy systems, and integrating diverse foundation models. Companies will increasingly recognize the need for robust platforms capable of processing massive amounts of unstructured data and handling computationally intensive tasks like embedding calculations. By ensuring infrastructure can adapt to varying workloads and support post-training experiments, businesses can deploy AI solutions confidently and optimize performance under real-world conditions.

AI-Driven Security Solutions. AI is both a threat and a solution in cybersecurity. As attackers increasingly adopt real-time deepfake generation and other sophisticated methods, organizations are turning to AI-driven solutions not just to combat cyberattacks, but also to protect their own AI systems against malicious use. The emergence of real-time deepfake detection tools will be particularly significant in 2025, driven by the evolution of real-time deepfake generation capabilities, especially in voice synthesis.

Applied AI Innovations for 2025

Synthetic Data for Enterprise AI. Synthetic data offers a vital solution for addressing scarce or sensitive data requirements. This trend is accelerating as major AI companies exhaust available internet data for training. Teams can already leverage foundation models to generate synthetic data for specific use cases, while larger organizations may combine synthetic data with their proprietary datasets. Look for improved synthetic data generation tools emerging from major AI labs, making this technology more accessible to practitioners. Consider exploring data augmentation strategies and partnerships to complement synthetic data initiatives.

Reasoning-Enhanced AI Models. Foundation models are evolving from intuition-driven models—remarkable at pattern recognition but often unreliable and prone to errors—to systems with built-in, iterative reasoning. While they can be more resource-intensive and slower, these reasoning-enhanced models will self-correct, detect hallucinations, and make more reliable decisions, reducing the need for constant human oversight and enhancing enterprise applications. Expect a wave of competition—as seen with offerings from players like OpenAI, Google, Alibaba, and Deep Seek—further accelerating the shift toward agentic, reasoning-focused AI solutions despite higher operational costs.

Scaling and Compute Optimization for AI. Cost-effective deployment of complex AI models depends on efficient scaling and compute optimization. As these reasoning-enhanced systems systematically “think harder” through techniques like Monte Carlo Tree Search or best-of-N sampling, they demand significantly more compute during inference, not just training. I expect new hardware accelerators and software approaches in 2025 to mitigate this “inference-time scaling” challenge, unlocking further performance gains while requiring careful calibration to avoid issues like over-optimization and distribution shift.

AI Hardware Diversification. While Nvidia will remain the leader, 2025 will see increased competition in AI hardware. AMD’s GPUs, supported by its open-source ROCm software, are gradually gaining traction—though recent performance studies emphasize the need for software maturity. Meanwhile, Apple, Broadcom, and Arm are eyeing more energy-efficient AI chips, particularly for on-device inference. Nvidia's rapid pace of releases (GB300, B300) is beginning to focus on improved performance for reasoning-enhanced model inference. With necessity spurring invention, competition from AI-specific ASICs such as Cerebras, coupled with cost-saving breakthroughs reminiscent of DeepSeek, is primed to make advanced AI hardware both more affordable and more sustainable.

Open Models for Competitive Advantage. Open models will remain competitive with proprietary foundation models, enabling enterprises to build model-agnostic AI apps. This includes emerging categories like reasoning-enhanced models and visual-language models, where open alternatives are already showing promise. By keeping AI applications model-agnostic, enterprises can lower costs, maintain greater control, and avoid vendor lock-in—all while seamlessly integrating new capabilities as they emerge. This agility will be critical in 2025, positioning open models as a strategic option for organizations seeking flexibility and competitive advantage in a rapidly evolving AI landscape.

Next-Gen Data Foundations for Generative AI

Paradigm Shifts in Data Tools for Generative AI. Generative AI is transforming data processing from SQL to AI-centric frameworks designed to handle multimodal data (text, images, audio, and video). New architectures optimized for CPU/GPU efficiency will enable scalable, flexible AI systems that integrate diverse data for superior performance.

Real-Time Data Integration for AI Applications. To excel, AI applications and agents require real-time access to the right data from the right sources. Traditional ETL pipelines are too slow. New tools are emerging to enable seamless, real-time data integration without requiring major infrastructure changes, improving agility and decision-making.

Unlocking Structure in Unstructured Data. Identifying and leveraging structure in unstructured data is vital for enhancing generative AI’s accuracy and interpretability. Techniques such as knowledge graph integration (GraphRAG), information extraction, and advanced data curation allow models to uncover hidden patterns and improve contextual relevance in AI outputs.

Policy and Regulatory Landscape

Semiconductor Export Controls. US chip export controls to China are tightening, impacting China’s AI hardware access (GPUs, high-bandwidth memory, chip tools). Going forward, policymakers will likely weigh options ranging from sweeping, country-wide bans to targeted, precision-based restrictions. The risk of tech stockpiling and retaliation remains high, particularly if the US cannot sustain alignment with key manufacturing partners like Europe, Taiwan, South Korea, and Japan. With a new administration on the horizon, expect uncertainty around how aggressively—or selectively—these controls will be enforced.

EU AI Act vs. US Deregulation. Clashing regulatory philosophies—strict EU rules and the US push for deregulation—will shape the global AI landscape. The outgoing head of the U.S. Department of Homeland Security warns that Europe’s more adversarial stance with tech companies could create security vulnerabilities and compliance headaches for AI firms straddling both regions. Meanwhile, the incoming Trump administration signals an even more aggressive deregulatory agenda than the current Biden administration's relatively measured approach. Companies will need to navigate these diverging regulatory environments while maintaining consistent global operations..

US Deregulation Challenges. While US deregulation might be welcomed by those who found previous policies overly restrictive—especially in areas like AI and crypto—concerns remain about favoritism or targeting of specific companies. The incoming administration has signaled a sweeping deregulatory agenda, including the proposed removal of crash reporting requirements for self-driving cars, prompting safety worries. Ensuring an even-handed approach with clear, consistent standards will be critical to maintaining both innovation and public trust.

AI Talent and Immigration Reform. Immigration policies will be a key determinant of how well the US sustains its AI leadership. Foreign-born researchers have historically comprised large portions of major American science efforts (e.g., over 20% in the Manhattan Project), and estimates suggest much higher percentages in modern AI labs. This is particularly crucial given that most foundation models—which the global AI ecosystem depends on—originate from U.S. labs. While early signals from Trump’s tech sector advisers suggest welcoming policies for skilled tech talent, teams should closely monitor impending policy shifts—any shift towards more restrictive immigration policies could rapidly erode U.S. AI competitiveness.

Rise of AI Alignment Platforms. Legal signoff will be essential for AI deployments, requiring formal approval from legal teams before model deployment. Fragmented US state AI rules, such as Colorado's AI Act, will complicate compliance for US companies, regardless of overseas developments like the EU AI Act. Historically, organizations have adopted the strictest regional laws as de facto global standards, but this approach is no longer scalable due to the complexity of cross-team coordination and varying compliance requirements. AI alignment platforms will be crucial for achieving unified compliance, enabling better coordination between teams and streamlining the compliance process across multiple jurisdictions.

Subscribe to our YouTube channel and join us on January 16th for an in-depth video presentation exploring each of these AI trends in detail.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.

Great write-up. Thanks for always keeping the community updated with the latest AI trends.