Unleashing LLMs in Cybersecurity: A Playbook for All Industries

Large Language Models in Cybersecurity

Despite the fact that large language models (LLMs) are still in their infancy, organizations across all industries and domains are already showing considerable interest in them. I thought that it would be useful to describe how LLMs will be implemented within companies by focusing on cybersecurity, a specialized area of activity within IT that is rapidly adopting AI. Cybersecurity provides a useful example of how LLMs can be implemented in other domains. The result is a general playbook for how LLMs can be used in other domains and industries.

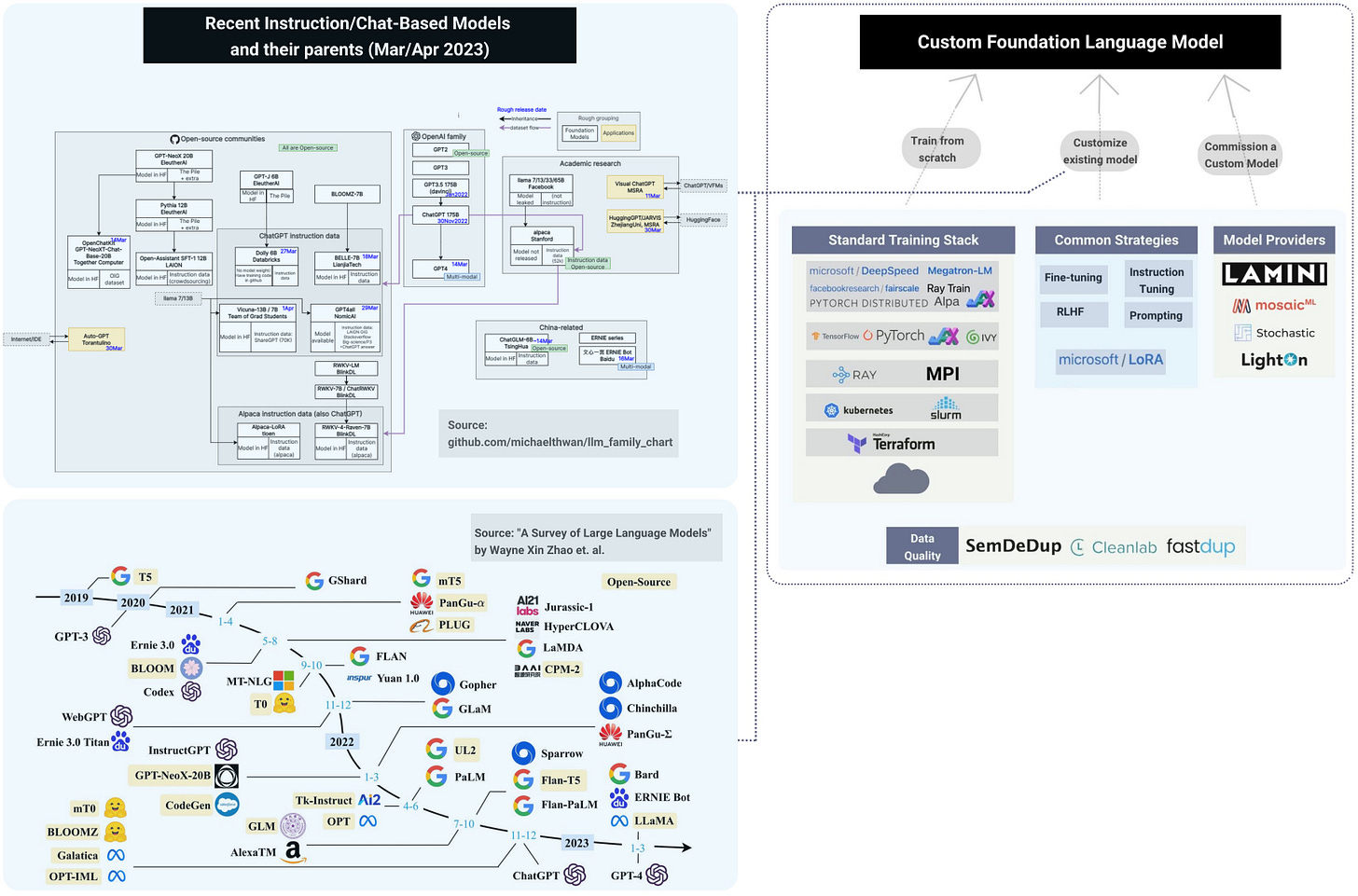

Custom LLMs

In a previous post, I outlined different options available to teams interested in building their own custom LLMs. Since we currently find ourselves in the early stages of crafting bespoke large language models, it is understandable that specialized versions specifically designed for cybersecurity have not yet materialized. Nevertheless, I believe the immense array of potential applications for custom LLMs within the cybersecurity domain will lead to their emergence in the not-so-distant future.

I was eager to find custom LLMs for cybersecurity at the upcoming RSA conference, but the agenda appears to lack technical sessions on this topic. The encouraging news is that numerous recent research projects have concentrated on developing language models explicitly designed for cybersecurity applications:

CySecBERT (2022)

SecureBERT (2022)

CyBERT (2021)

Chatbots

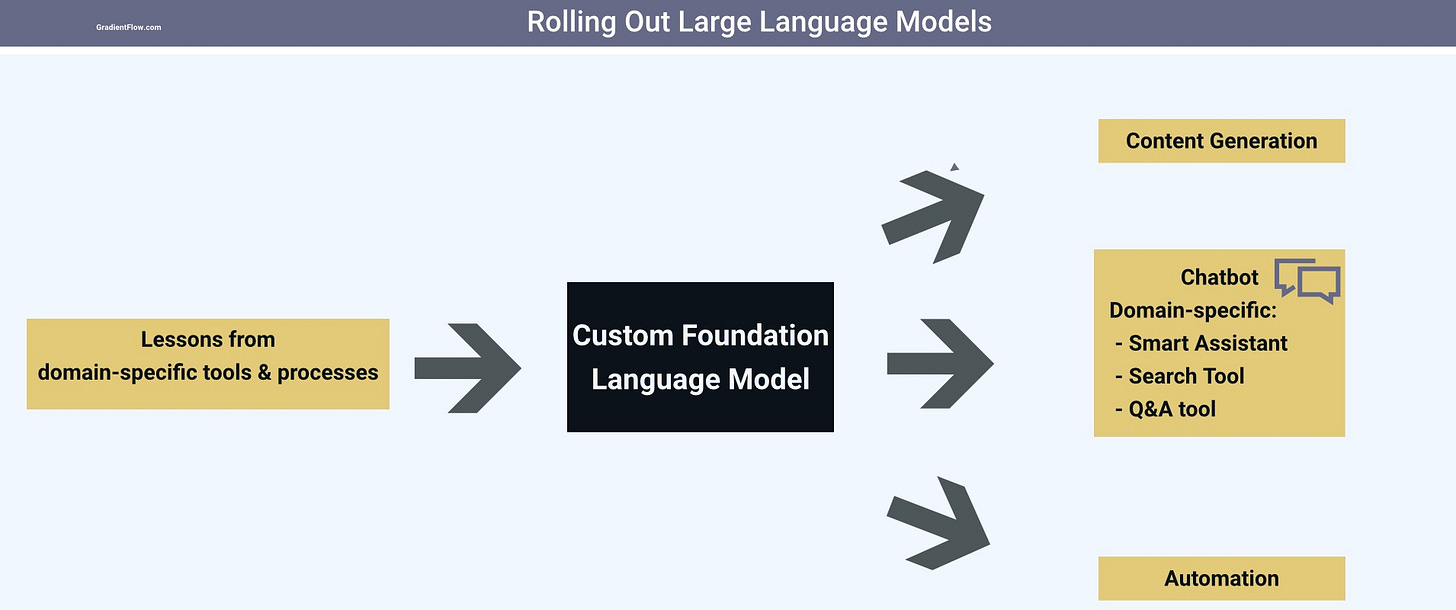

With a custom LLM established, the pinnacle application is a domain-specific chatbot emulating ChatGPT (OpenAI), Bard (Google), or Ernie Bot (Baidu), with leading cybersecurity, technology, and cloud computing companies poised to introduce their own specialized solutions. These new chatbots support many use cases and are distinguished by their ability to handle a wider range of tasks and requests.

In fact, Microsoft recently introduced Security Copilot, an AI-driven security analysis tool that empowers analysts to rapidly address potential threats. This intelligent chatbot can generate PowerPoint slides that concisely summarize security incidents, detail the extent of exposure to active vulnerabilities, and identify the specific accounts implicated in an exploit, all in response to a user's text prompt. The system combines the power of GPT-4 with the security expertise of Microsoft's security-specific model, which is built using daily activity data that Microsoft gathers.

Content Generation

LLMs are part of a wave of generative AI technologies that are notable for their ability to generate novel, human-like output that goes beyond simply describing or interpreting existing information. Researchers are exploring the use of content generation capabilities to enhance and fortify existing cybersecurity systems, with several early examples demonstrating their potential:

Honeyword generation (2022): Honeywords are fictitious passwords added to databases to detect breaches. The challenge lies in creating honeywords that closely resemble real passwords. Researchers recently proposed a system that uses off-the-shelf pre-trained language models to generate honeywords without further training on real passwords.

Phishing emails (2022) deceive people into sharing sensitive information or performing actions that benefit attackers. Researchers were recently able to use LLMs to create hard-to-detect phishing emails with varying success rates, depending on the model and training data. This artificial phishing data can enhance current systems and training.

Human-readable explanations of difficult-to-parse commands: Decoding command lines can be a challenging and lengthy process, even for those experienced in security matters. The security company Sophos recently developed an application using GPT-3 technology that can convert command lines into clear, everyday language descriptions, making them more accessible for non-experts.

Automation

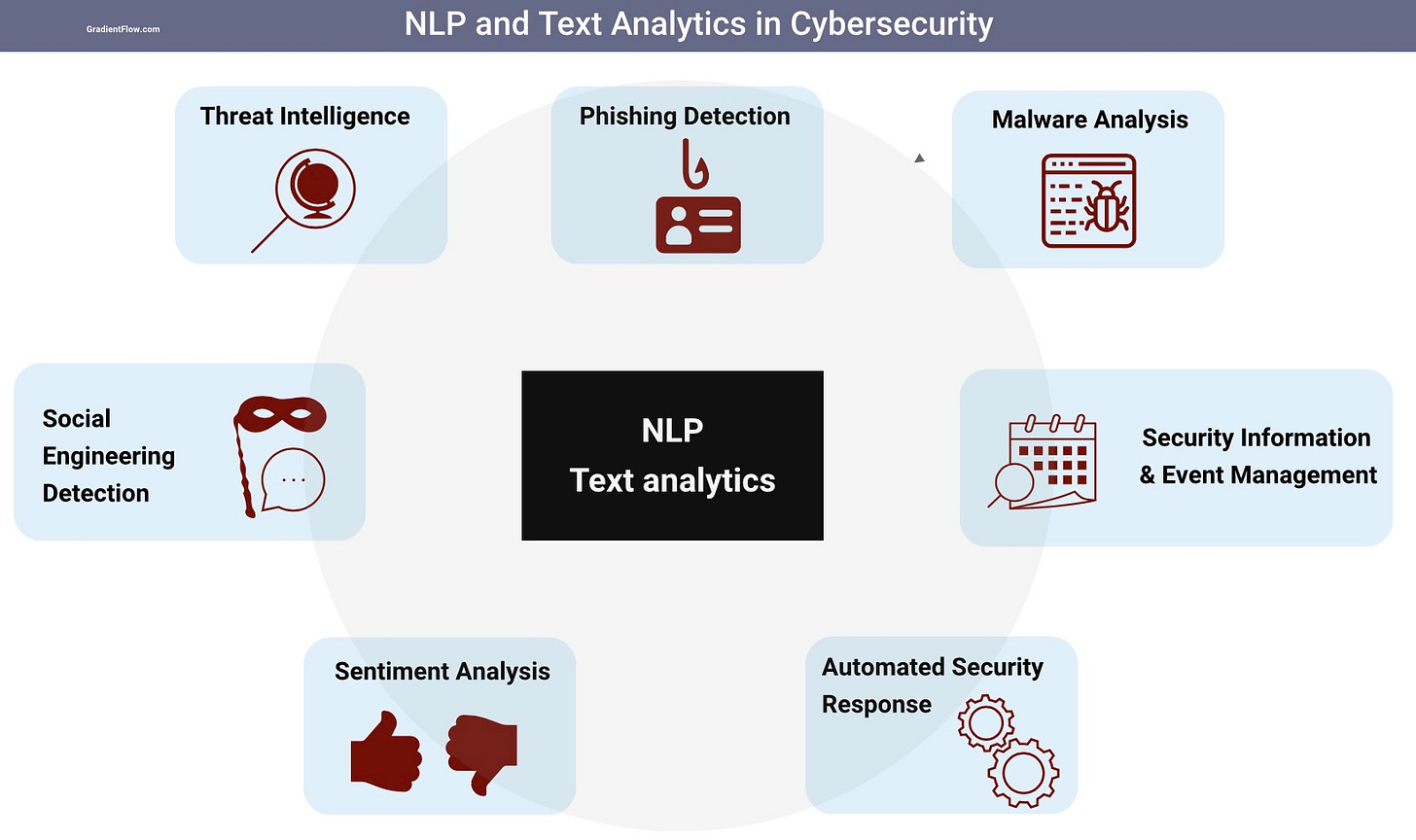

NLP as we know it has been disrupted! LLMs hold the potential to automate a multitude of processes, particularly those that have already reaped the benefits of advancements in NLP and text analytics. In recent months, I have transitioned from utilizing NLP libraries with pre-trained models to predominantly employing LLM APIs. While this shift resulted in a minor trade-off in terms of latency and cost (replacing free-to-use libraries), it offered significant advantages in terms of accuracy and convenience.

Companies already benefiting from the automation offered by NLP and text analytics will increasingly migrate their text applications and models to custom LLMs. Cybersecurity teams are poised to follow this trend and many companies already use NLP in cybersecurity for a variety of reasons. The primary objective is to enhance their security posture by analyzing and processing large volumes of textual data, which can contain critical information about potential threats, vulnerabilities, and cyber attacks.

Domain-specific lessons and processes

When appropriate, teams should proactively adapt systems and processes from their domain to optimize and refine their custom LLMs. For instance, financial services teams can leverage insights gained from adhering to SR 11-7, while healthcare professionals can utilize privacy tools and sector-specific concepts to enhance their custom LLMs.

In this context, cybersecurity teams work in an incredibly vast and diverse discipline to draw upon. Here are a few common practices from cybersecurity & business continuity planning that can be used to improve LLM-backed applications:

Penetration testing proactively uncovers vulnerabilities, helping organizations minimize risks from malicious actors. Meanwhile, jailbreaking specifically bypasses device restrictions, granting access to unauthorized features and functionalities. Illustrative examples include a recent project focused on investigating injection attacks targeting apps supported by LLMs, and a project to jailbreak LLMs.

Data protection and securing sensitive data is a priority in cybersecurity. Techniques include data anonymization, encryption, and secure data storage. A more targeted example is Last Stop - a new open source project designed to help teams to mitigate prompts that get sent to LLMs.

Adversarial simulation is a technique used in order to identify weaknesses in security. For example, DeepMind recently introduced a method to automatically generate inputs that trigger harmful text from language models. They achieved this by using language models to generate these inputs.

Summary

I examined LLM integration in cybersecurity, highlighting its potential in chatbots, content generation, and automation. The rapid development and simplification of LLM tools suggests that they will soon have a profound impact on cybersecurity and other domains, despite the fact that we are still in the early stages of adoption. While the focus of this analysis is on cybersecurity, the lessons learned and strategies developed can be easily adapted to other domains and industries, opening up a world of possibilities for LLMs in various sectors.

Spotlight

Aim + LangChain = LLM 🚀. These open-source projects make working with large language models easier and more efficient, making them indispensable components of my toolkit. Aim streamlines the visualization and debugging of LangChain executions while effectively tracking the inputs, outputs, and actions of LLMs, tools, and agents.

AI Functions in Databricks SQL. This enables SQL analysts to effortlessly incorporate LLMs into their workflows, streamlining development and production. Data teams can use this feature to summarize text, translate languages, and assist with other tasks without requiring intricate pipelines or specialized knowledge.

Building an LLM open source search engine in 100 lines using LangChain and Ray. Learn how to create an LLM-based open source search engine with LangChain and Ray in a mere 100 lines of code. This post highlights the simplicity of merging these powerful tools to boost processing speed, harness cloud resources, and circumvent the hassles of maintaining separate machines or handling API keys.

StableLM. This open-source LLM, trained on an experimental 1.5 trillion-token dataset, delivers decent performance in conversational and coding tasks, despite its modest 3-7 billion parameters. The GitHub repository features checkpoints (saved model states) which are regularly updated.

Data Exchange Podcast

2023 AI Index. I recently caught up with Raymond Perrault, a renowned Computer Scientist at SRI International and Co-Director of the AI Index Steering Committee.

Custom Foundation Models. Hagay Lupesko is a seasoned machine learning engineer who presently holds the position of VP Engineering at MosaicML. This new startup empowers teams to effortlessly train bespoke foundation models using their data within a secure and private ecosystem.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, or Twitter, or Mastodon, or on Post. This newsletter is produced by Gradient Flow.

Your are touching on the most booming field right now, I think more researches will go into this field. I am eager to read more posts of you regarding this integration!