Lessons from the Frontlines of AI Training

Inside the Data Strategies of Top AI Labs

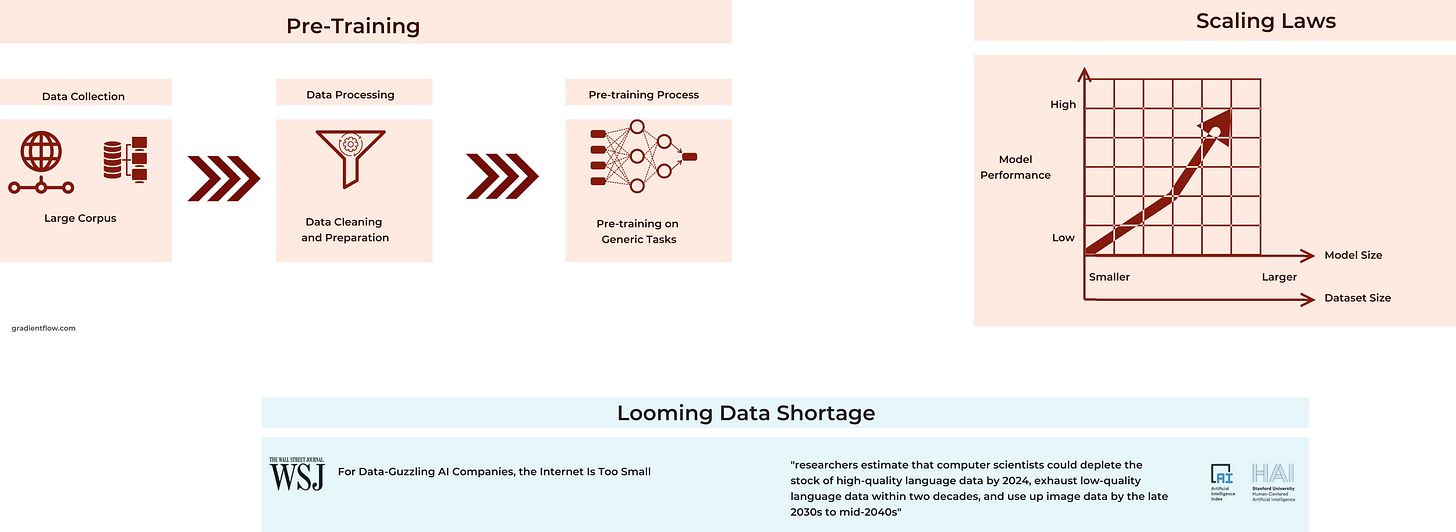

In the AI arms race, data remains the ultimate fuel, and the hunger for it is insatiable. Indeed, as someone who monitors this space closely, I can tell you that scaling laws continue to be the North Star for top AI labs. The equation is simple: bigger models + more data + more compute = better performance. It's a formula that's proven fruitful time and time again.

But here's the kicker: the teams behind the leading LLMs are confronting a significant data challenge. Experts predict a potential high-quality data drought as early as 2026. This impending scarcity underscores the importance of examining the innovative data strategies these labs are developing to maintain momentum in AI progress.

You might be thinking, "I'm not pre-training the next GPT, why should I care about fancy AI data strategies?" It's crucial to recognize that regardless of whether you're fine-tuning models or implementing Retrieval-Augmented Generation (RAG), your success fundamentally depends on the quality and relevance of your data. In this article, I’ll describe how top AI labs are tackling data quality, augmentation, and management – insights that can benefit any team looking to level-up their AI game.

Quality Over Quantity: The Data Connoisseur's Credo

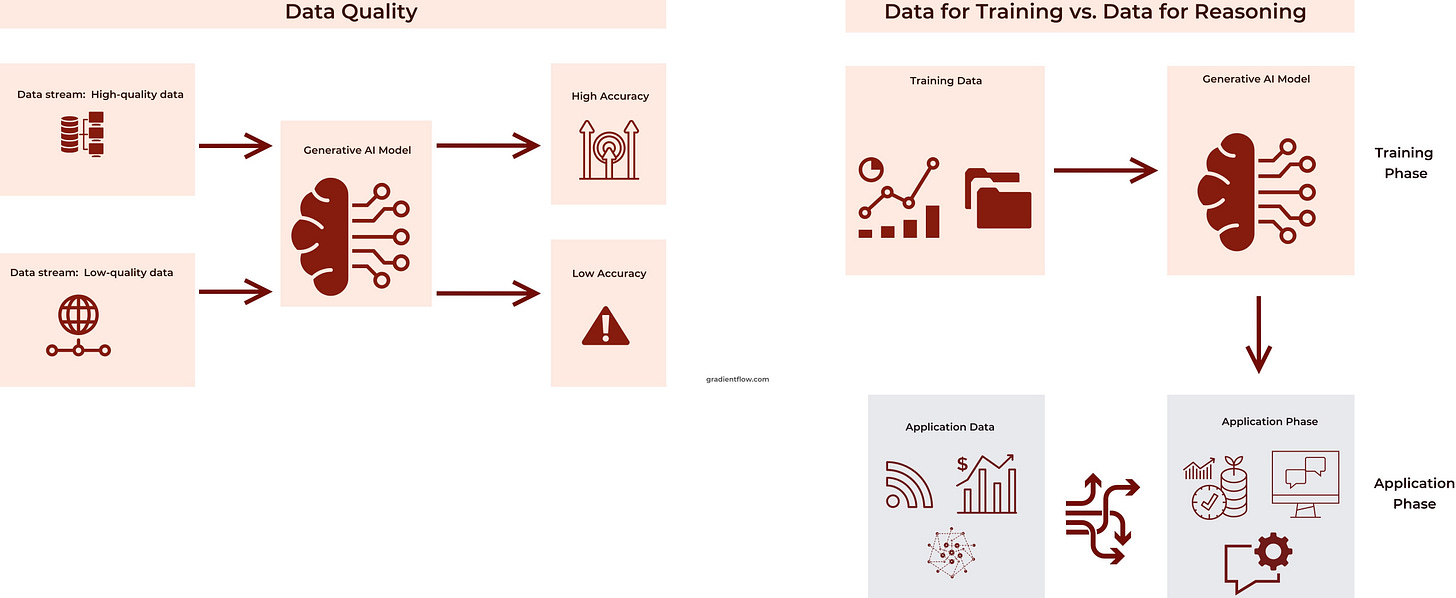

In the early days of AI, researchers operated under the 'more is better' philosophy for training data. However, experience has taught a more nuanced lesson: quality trumps quantity. Notably, Mistral AI was one of the first teams to publicly emphasize the importance of data quality, highlighting their use of high-quality datasets and negotiated licensing agreements as key differentiators in their approach to model training.

Consider the case of a medical diagnosis AI. A small, meticulously curated dataset of accurate patient records will yield far superior results compared to a massive, but inconsistent and error-ridden corpus of web-scraped medical information. This principle holds true across diverse fields, from finance to customer service chatbots, where a focused dataset of real customer interactions proves more valuable than a vast but messy collection of generic online text.

The emphasis on quality necessitates significant investment in data curation processes and the careful selection of relevant data sources. For AI teams, this means developing expertise not just in algorithms and model architectures, but in the art and science of data curation itself. This is particularly true as high-quality, domain-specific data emerges as a key competitive advantage. In regulated industries like finance or healthcare, proprietary datasets can be the difference between a mediocre model and a game-changing application.

Another critical distinction is between data used for training models and data required for reasoning and decision-making in applications. AI systems devour historical data during training, but they thrive on fresh, real-time information when tackling real-world challenges.

For example, a financial AI might be trained on years of historical stock market data, but it uses today's live market information to make investment recommendations. In a similar vein, a customer service chatbot learns from past conversations but responds to your specific query using the information you provide. Likewise, a self-driving car is trained on millions of hours of driving footage, but it uses real-time sensor data to navigate the actual road conditions it encounters.

For AI teams, it's essential to design data architectures that support both training and application data needs. It helps them create AI that can both learn broadly and respond accurately to specific situations. Models should be trained on comprehensive datasets, but systems must also be in place to fetch, process, and use real-time data efficiently. Additionally, robust measures to protect user data and ensure compliance with regulations are crucial for building trust in AI solutions.

Data Augmentation

Synthetic data mimics real-world patterns and supplements existing datasets, addressing gaps where real data might be limited. As teams who pre-train LLMs have come to realize, synthetic data should be viewed as a supplement, not a replacement. Leading AI teams have found that extensive use of AI-generated data in training datasets can lead to 'model collapse', diminishing output quality and affecting the generalizability of AI models, highlighting the importance of maintaining access to and balancing with real-world, human-generated data in training processes.

Among various techniques for synthetic data generation, Large-scale Alignment for chatBots (LAB) is one approach that enables strategic creation of task-specific data. LAB focuses on generating synthetic data tailored to specific tasks, ensuring that the synthetic data enhances, rather than replaces, the value of real-world data. This targeted approach allows for the expansion of AI capabilities without compromising the grounding and reliability that real-world data provides.

Data augmentation techniques, such as adding variations like noise or rotating images, can also enhance existing datasets. This exposure to a broader range of scenarios improves model robustness and performance. For example, teaching a self-driving car to recognize pedestrians in varying lighting conditions and environments enhances its reliability.

Building upon these general augmentation techniques, researchers have developed more specialized methods to further enhance model performance and accuracy. Introducing deliberate errors during training, such as intentionally misspelling words for a grammar checker, and employing weakly-supervised data augmentation to create instruction-response pairs with varying degrees of accuracy, can also significantly boost a model's performance. These methods enable AI systems to distinguish between high-quality and low-quality responses, improving overall performance.

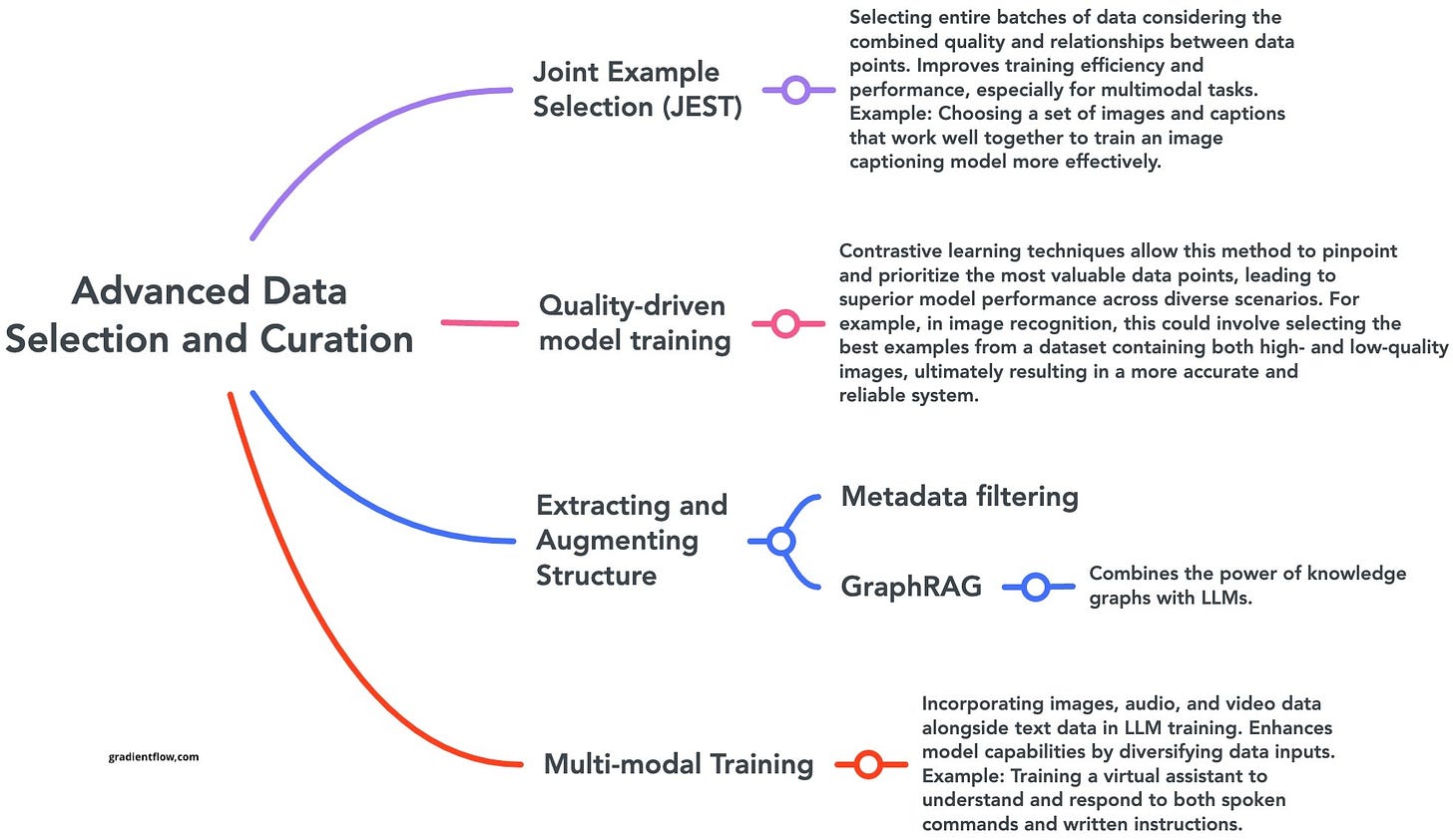

The Art of Selection: Curating the Perfect Dataset

Effective data selection and curation are crucial for training high-performance AI models. New techniques like Joint Example Selection (JEST) optimize the training process by selecting entire batches of data based on both quality and inter-data relationships. This approach significantly enhances training efficiency and model performance, particularly for complex multimodal tasks.

Quality-driven model training is another approach used to develop robust and versatile AI systems. By leveraging contrastive learning techniques, this method identifies and prioritizes the most valuable data points, ensuring superior model output across diverse scenarios. For instance, in image recognition, this approach might involve selecting the best examples from a mixed dataset of high and low-quality images, resulting in a more accurate and reliable system.

GraphRAG combines the power of knowledge graphs with LLMs. This technique enhances traditional Retrieval Augmented Generation (RAG) by structuring data as nodes and relationships within a knowledge graph, enabling LLMs to access and understand information more efficiently and accurately. This is particularly valuable for complex queries requiring a nuanced grasp of concepts across large datasets, leading to more precise and contextually relevant AI-generated outputs.

Multi-modal training is another leap forward in AI development. By incorporating diverse data types such as images, audio, and video alongside text, models gain a more comprehensive understanding of their environment. For instance, training a virtual assistant to process and respond to both spoken commands and written instructions creates a more adaptable and user-friendly AI system. This multi-faceted approach to data integration enables the development of AI models with unprecedented versatility and real-world applicability.

The Data Economy: New Models for a New Era

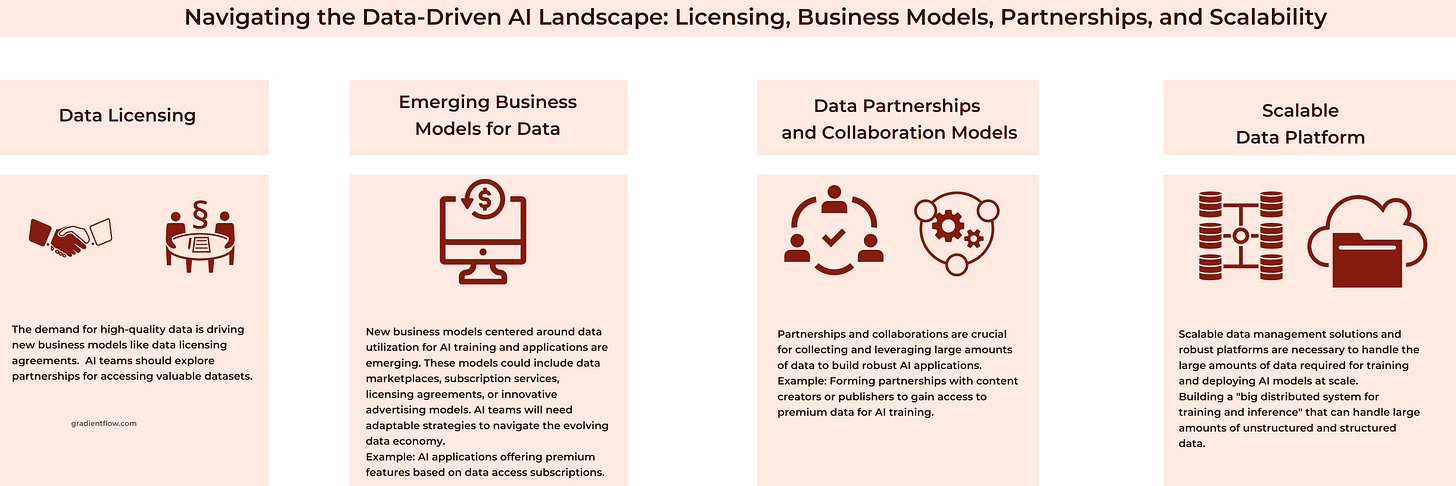

The increasing value of high-quality data is driving new models focused on data licensing and partnerships. AI teams must navigate this evolving economy by forming alliances to access valuable datasets and explore new monetization strategies. This shift requires adaptable strategies to leverage these opportunities effectively. This shift towards strategic data partnerships is exemplified by OpenAI's recent licensing deals with media companies like Reddit, AP, and the Financial Times, highlighting the critical role of data access in shaping the future of AI.

Strategic collaborations and partnerships can also fuel the development of highly specialized, domain-specific models. For instance, a pharmaceutical company might partner with a medical research database to train a model on a vast collection of scientific papers, leading to a more accurate and relevant AI-powered drug discovery tool. A real-world example of this is the collaboration between DeepMind and the European Bioinformatics Institute, which resulted in the AlphaFold system that can predict protein structures with unprecedented accuracy.

Flexible data monetization strategies include data marketplaces, subscription services, and licensing agreements. These models open up new possibilities for AI applications to offer premium features. For example, access to a high-quality, constantly updated dataset of legal documents could be offered as a premium subscription, giving legal professionals using an AI-powered research tool a significant advantage. This creates new revenue streams for data providers while ensuring ethical data usage.

This emphasis on high-quality, specialized data underscores the need for robust and scalable data management solutions. AI teams must prioritize building or leveraging platforms capable of efficiently handling the massive datasets required for training and deploying sophisticated AI models. This might involve creating a "big distributed system for training and inference," capable of processing vast quantities of unstructured data, or partnering with software providers offering such infrastructure. With data volume and complexity exploding, scalable and efficient data management systems are no longer optional—they are a strategic imperative for competitive AI teams.

Analysis and Recommendations

The insights gained from pre-training foundation models offer practical guidance for anyone building AI applications. Here's how to apply those lessons when building Generative AI applications and systems:

Prioritize Data Quality Over Quantity. Invest in robust data curation processes and focus on selecting high-quality data sources. High-value, relevant data is critical for robust and accurate model performance.

Embrace Synthetic Data with Caution. Use synthetic data to supplement real-world data, enhancing the diversity and robustness of your datasets. However, avoid relying solely on synthetic sources to maintain the integrity and quality of outputs.

Implement Data Augmentation Techniques. Enhance datasets with variations, such as deliberate error insertion, to improve model robustness and expose AI systems to a broader range of scenarios. This can significantly boost model performance in diverse conditions.

Adopt Advanced Data Selection Methods. Utilize techniques like JEST and quality-driven training to identify and select the most beneficial data points. This approach optimizes training efficiency and improves overall model performance.

Leverage and Extract Structure from Data. Incorporate metadata filtering and new techniques like GraphRAG to enhance dataset quality and model capabilities. This is especially valuable for complex queries, leading to more precise and contextually relevant AI-generated outputs.

Develop Scalable Data Management Solutions. Build robust, scalable platforms capable of handling large datasets efficiently. Reliable data processing is crucial for maintaining the performance and scalability of AI applications.

Form Strategic Data Partnerships. Collaborate with industry partners to access diverse and valuable datasets. These partnerships can accelerate AI research and enhance the capabilities of your models by providing access to unique and high-quality data.

Customize Domain-Specific Models. Train models on industry-specific data to improve accuracy and relevance in specialized applications. Tailored models can deliver better performance in niche areas by leveraging domain-specific insights.

Utilize Efficient Model Adaptation Techniques. Employ lightweight, pretrained scorers to enhance the performance of larger language models (LLMs) without extensive fine-tuning. This strategy reduces costs and resource requirements, making it more feasible to keep models up-to-date.

The future of AI extends far beyond mastering foundation models; it demands a comprehensive understanding of data strategy. As intelligent routers and autonomous agents become central to AI applications, the ability to strategically acquire, refine, and structure high-quality data becomes paramount. These advanced systems rely heavily on well-organized, relevant information to function effectively.

The magic happens when data quality meets strategic augmentation. Beyond traditional data curation, innovative methods like iterative improvement and targeted augmentation can significantly boost model performance. Techniques such as LLM-based data augmentation and LLM2LLM strategies demonstrate how models can be refined through continuous feedback loops. By zeroing in on the weaknesses of student models and generating precisely targeted data, AI teams can achieve faster learning and more efficient use of data.

The real winners in the AI race will be those who excel not just in building models, but in the art and science of data curation, strategic sourcing, and extracting structure from data. These interdisciplinary teams, combining AI expertise, domain knowledge, and data science, will set the pace for the next wave of AI breakthroughs across industries.

Data Exchange Podcast

From Preparation to Recovery: Mastering AI Incident Response. This podcast episode explores AI incident response with Andrew Burt, co-founder of Luminos.Law and Luminos.ai. It covers the unique challenges of AI incidents, the importance of preparation and containment plans, and the phases of effective incident response, while also touching on regulatory considerations in the rapidly evolving AI landscape.

Monthly Roundup: Navigating the Peaks and Valleys of Generative AI Technology. This monthly conversation with Paco Nathan, covers recent developments in LLMs, AI-assisted software development, generative AI risks, and the environmental impact of AI. The conversation also explores innovative applications of AI in scientific research, and the importance of interfaces and UX design.

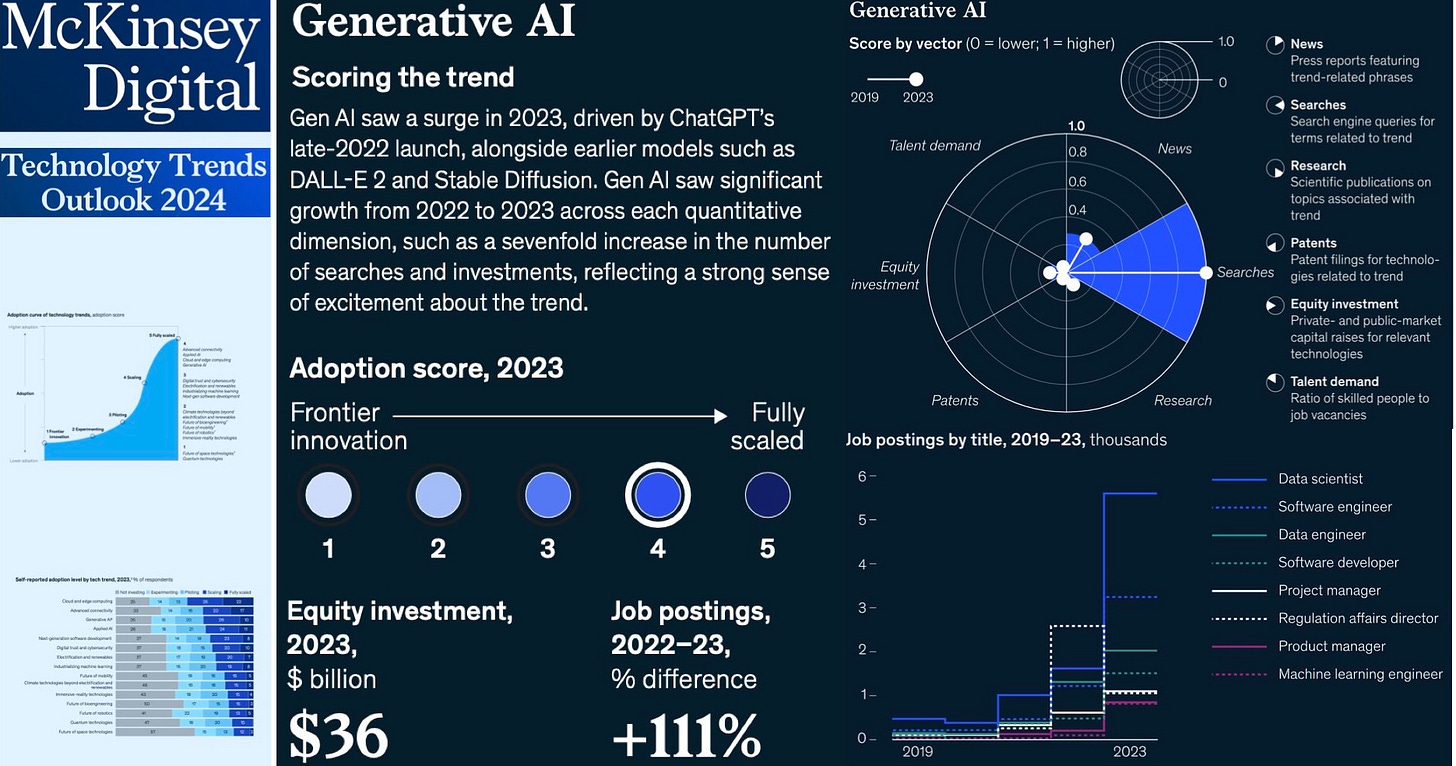

As a member of the McKinsey Technology Council, I contributed to this new report highlighting key technology trends for 2024. The report highlights the transformative potential of generative AI in automating creative and analytical tasks across industries, applied AI's role in enhancing decision-making and operations, and the importance of high-quality datasets in AI. It also covers advancements in hardware acceleration, cloud and edge computing, the industrialization of machine learning (MLOps), responsible and ethical AI practices, and the urgency of data security in the quantum era.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Twitter, Reddit, or Mastodon. This newsletter is produced by Gradient Flow.