Unpacking Model Collaboration: Ensembles, Routers, and Merging

Generative AI models are constantly evolving, and so are the strategies we use to improve their performance. Retrieval-Augmented Generation (RAG) and its advanced iterations, such as GraphRAG and Mixture of Memory Experts, represent approaches that incorporate external knowledge to enhance model capabilities.

Combining the strengths of different models has proven to be highly effective. Approaches such as ensembles, routers, and model merging have demonstrated their capacity to significantly enhance efficiency and accuracy. Through practical application, I've observed that each of these strategies offers unique benefits, allowing them to address different scenarios and team requirements effectively. This post explores how these methods are impacting AI innovation, particularly in building versatile and robust applications.

Ensembles and Cascades: Strength in Numbers

Ensemble methods are often the first tool in an AI practitioner's kit. The idea is straightforward: use multiple models in parallel to make predictions, with the final output being a combination of these predictions. The principle behind ensembles is that a group of diverse models can make better predictions collectively than any individual model. This "wisdom of the crowd" approach has proven incredibly effective in my work, where combining proprietary and open LLMs often leads to more robust and accurate results. Prototypical use cases include high-stakes decision-making, and data-scarce scenarios where enhanced accuracy and robustness are paramount.

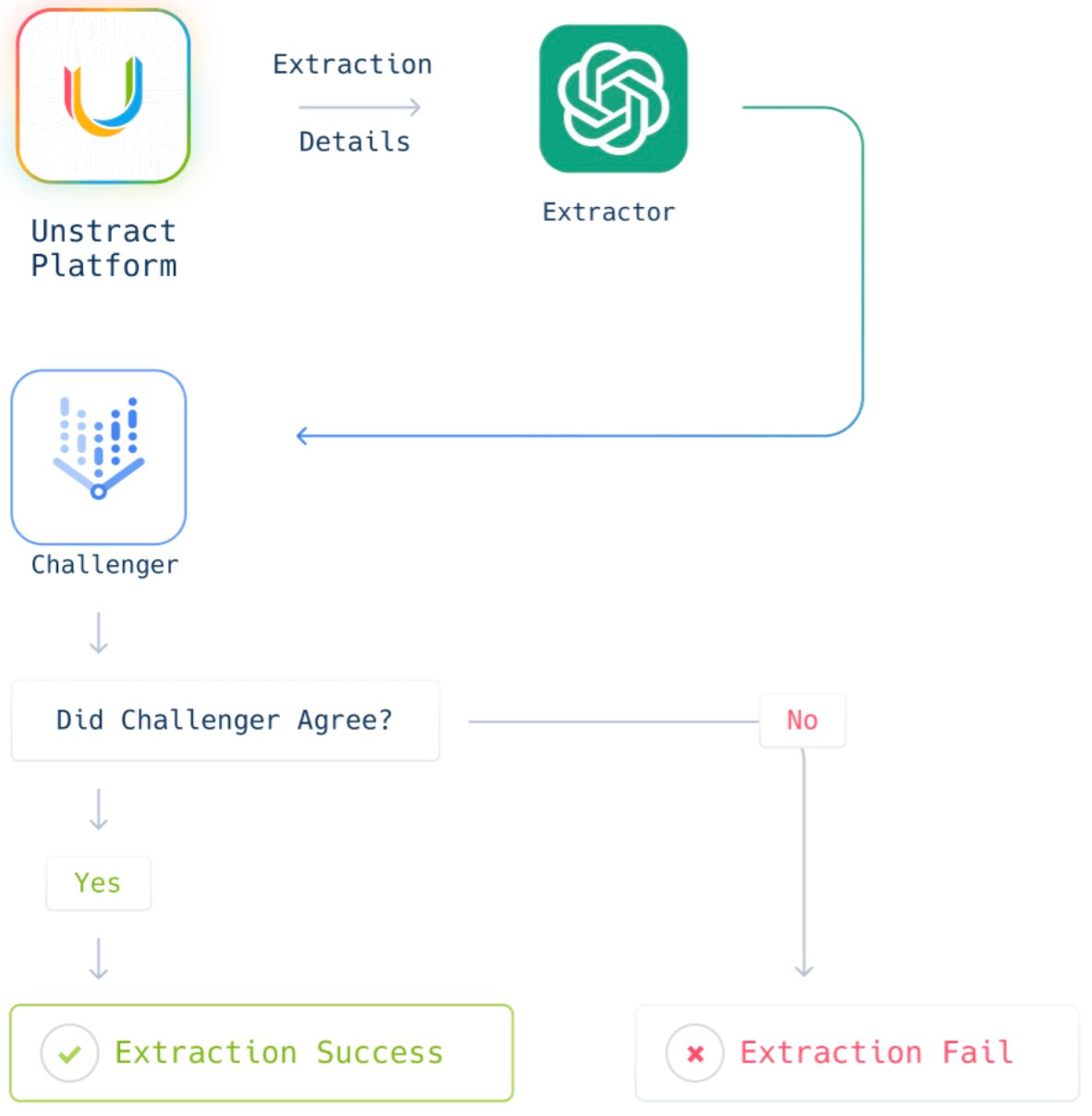

In contrast to parallel methods, some systems like Unstract's LLMChallenge opt for a sequential model execution strategy. This method involves using two different LLMs sequentially to extract and verify information from unstructured data. The first model extracts the information, while the second acts as a validator, challenging the initial extraction. This technique ensures a higher level of accuracy and reliability, as both models must agree on the output for it to be accepted. A cascading pattern is particularly effective in scenarios where accuracy is paramount, such as processing legal or insurance documents. Cascading offers enhanced reliability but requires careful consideration of trade-offs between accuracy, computational resources, and processing time.

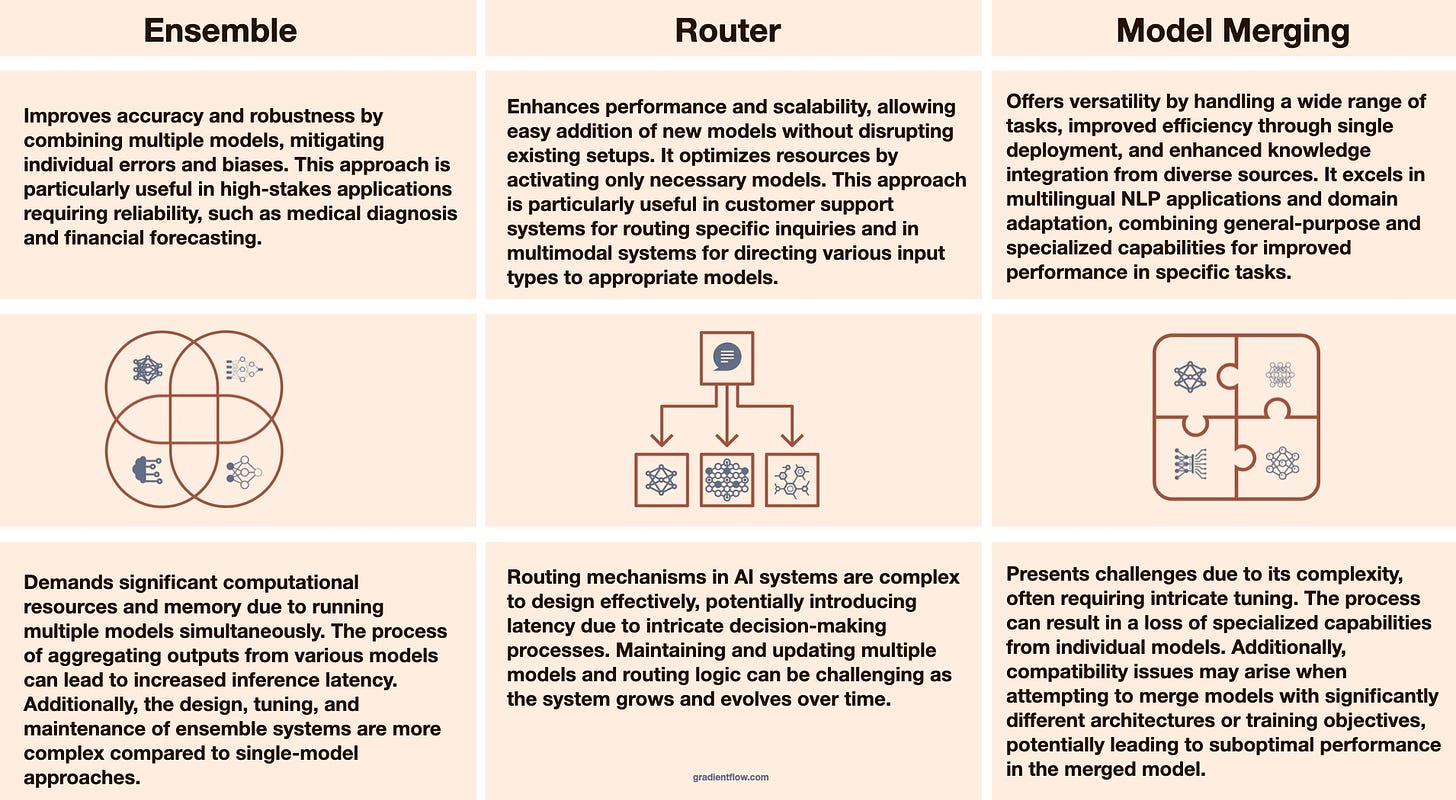

While ensembles and cascades are relatively easy to implement and can significantly improve accuracy and robustness, they do come with trade-offs. Managing multiple models can be computationally expensive and complex, especially during inference. This approach also risks overfitting if the models are too similar and lack diversity. Nonetheless, for high-stakes applications, ensembles remain a go-to strategy.

Routers: Tailoring Model Responses

Fine-tuning LLMs has become straightforward with many providers now offering services quite simple to use. These services allow teams to focus on curating high-quality datasets for fine-tuning, rather than grappling with the technical intricacies of the process itself. This development has made it easier for teams to create specialized LLMs tailored to specific tasks or domains.

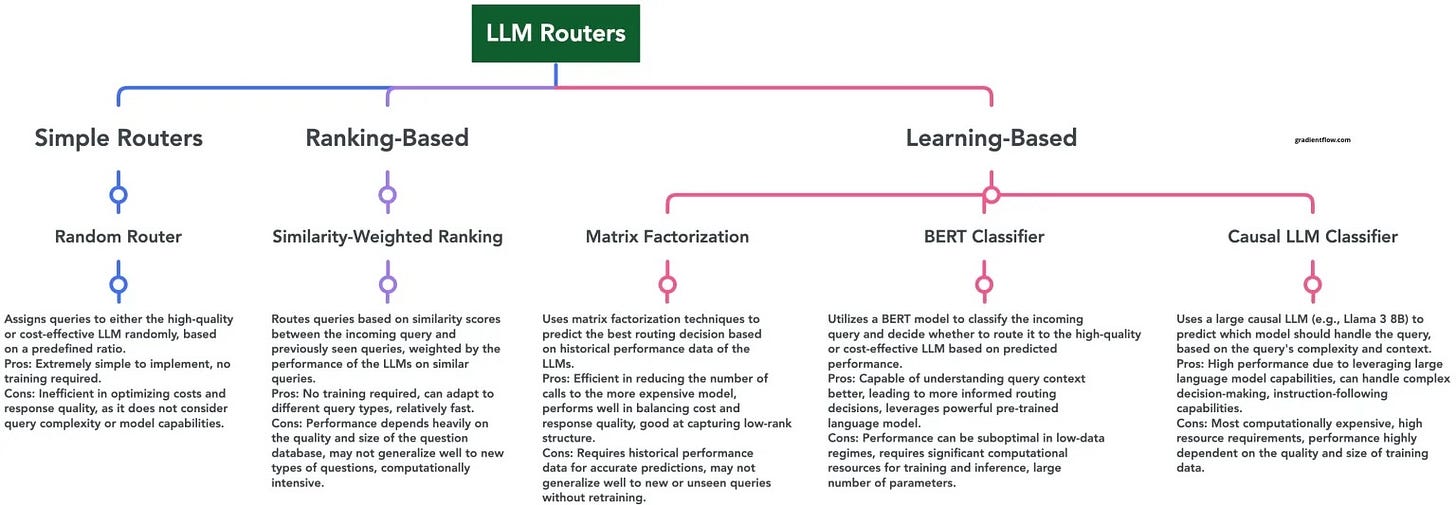

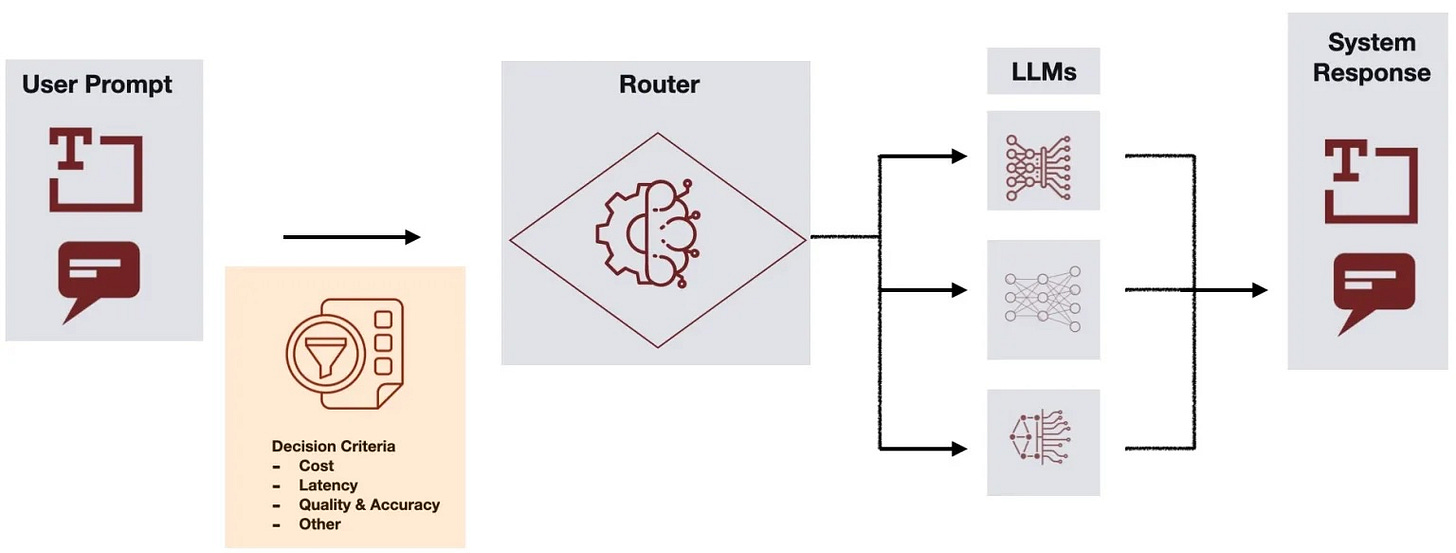

Once you've fine-tuned multiple specialized models, the next challenge is determining which model to use for a given input. This is where routing comes into play. Routing involves dynamically selecting the most appropriate model based on the input, optimizing performance by leveraging the strengths of different foundation models for different tasks or input types. Prototypical use cases include multi-task systems, multimodal systems, and personalized response systems.

The field of LLM routing is rapidly evolving, with solutions becoming increasingly accessible. Startups like Unify and OpenRouter offer easy-to-use routing services, while detailed examples like the Causal-LLM Classifier from Anyscale provide insights into building sophisticated routing systems. Open-source projects like RouteLLM are also emerging, offering frameworks for implementing and evaluating various routing strategies.

What makes routing particularly appealing is its adaptability. You can easily add new models or adjust routing criteria as new data or tasks emerge, making it a scalable solution. However, the complexity of designing an effective routing mechanism cannot be overlooked, as it requires careful consideration of how inputs are matched to models.

Model Merging: Integrating Strengths

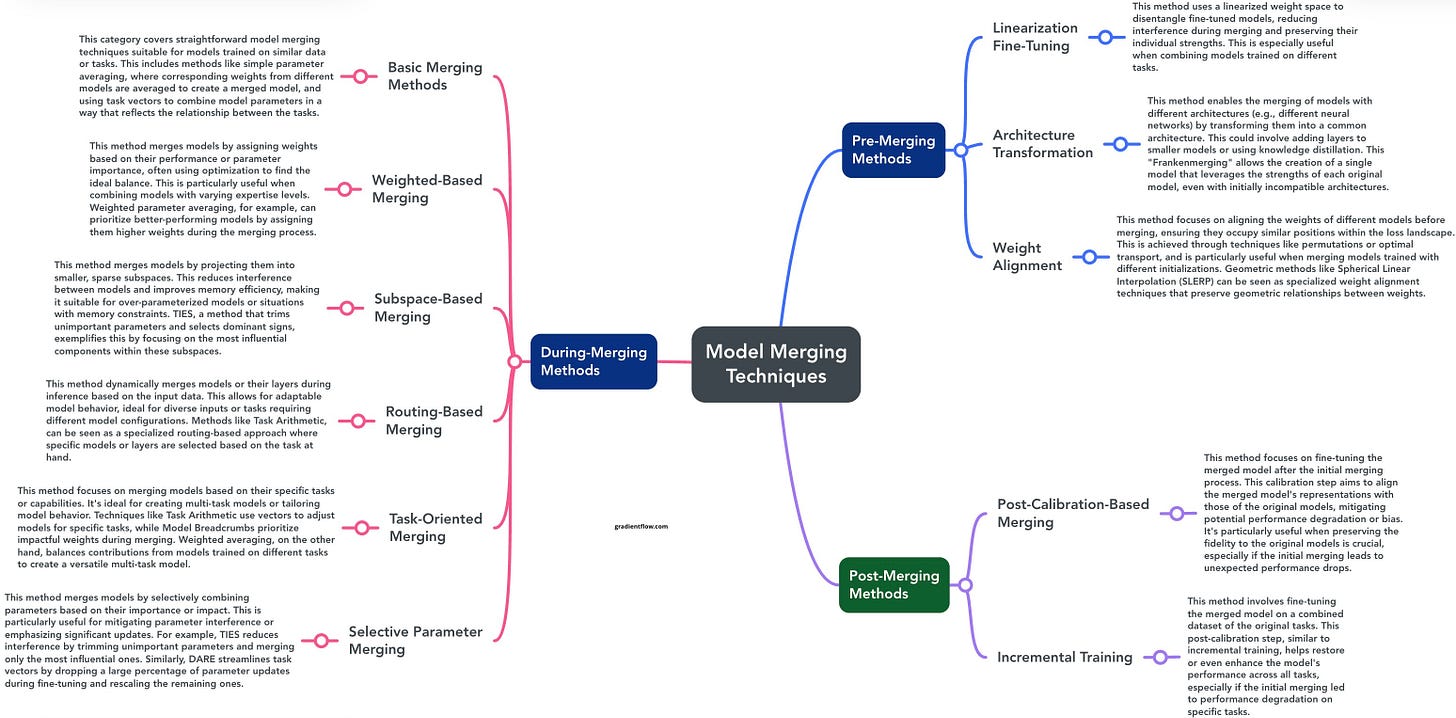

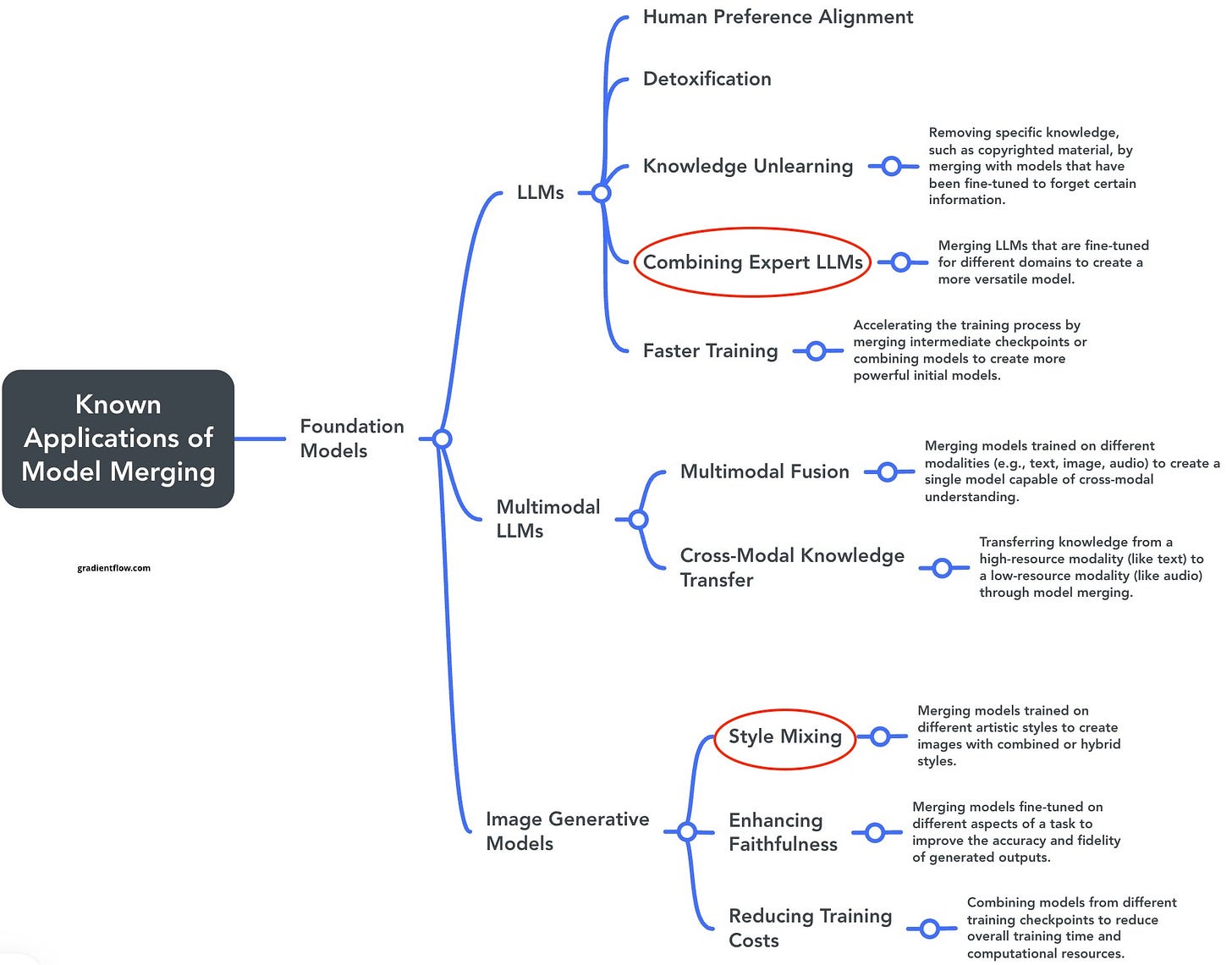

Model Merging is a more advanced technique that involves combining multiple pre-trained models into a single, unified entity. This method allows the merged model to inherit the capabilities and knowledge of all its constituent models, potentially improving performance across a broader range of tasks. Prototypical use cases include creating multilingual NLP models, adapting models to new domains, and building more comprehensive general-purpose language models.

Although Model Merging offers the advantage of reduced computational overhead compared to running multiple models in parallel, it is technically challenging and requires careful tuning to avoid issues like compatibility conflicts or dilution of specialized knowledge. The process can be complex, particularly when dealing with models that have different architectures or were trained on different datasets. That said, open source tools like mergekit and FusionBench are making it easier to experiment with various merging strategies. mergekit supports several algorithms providing a flexible framework for researchers and practitioners to merge models on even resource-constrained hardware.

While model merging is currently more challenging than other techniques like ensembles or routing, it may become more accessible as commercial services and open-source tools continue to evolve. For now, it’s a powerful option for teams that need to unify multiple models into a single, more versatile model.

Analysis and Recommendations

As AI teams consider these techniques, it’s important to weigh the strengths and weaknesses of each approach carefully. Each method brings unique strengths, making it crucial to choose the best fit for your project's specific needs and constraints.

Ensembles and Cascades. Ideal for high-stakes applications where accuracy and robustness are paramount. By combining the outputs of diverse models, ensembles and cascades mitigate individual model weaknesses, making them particularly valuable in fields like medical diagnostics or financial forecasting.

Routing. Best for flexibility, scalability, and resource optimization. Routers dynamically select the most appropriate model for each task, making them well-suited for environments with high task diversity and the need to optimize resource allocation based on input complexity.

Model Merging. Suited for creating versatile, efficient models that integrate the strengths of multiple models into a single, unified entity. This approach reduces computational overhead during inference but requires careful tuning to ensure consistent performance across all intended tasks.

Experiment and Iterate. There are now tools available that make incorporating ensembles, cascades, and routers straightforward. Don't be afraid to try different approaches or combinations of techniques. The optimal solution for your specific use case may not be immediately apparent, so it's essential to explore various options.

Embrace Distributed Collaboration. Prepare for a future where large foundation models in the cloud seamlessly collaborate with smaller, specialized models at the edge. This distributed architecture leverages the strengths of both centralized and decentralized systems, balancing broad capabilities with localized efficiency. By orchestrating complex tasks across diverse models, teams can achieve a new level of AI performance and adaptability, further enhancing the potential of model collaboration techniques.

The ultimate goal is to enhance the performance and accuracy of LLMs and foundation models. Each method offers unique advantages, allowing AI practitioners to address various scenarios effectively. It's also important to emphasize that real-world Generative AI applications will likely incorporate multiple approaches, including those discussed here and methods that leverage external knowledge, such as RAG and its advanced variants.

Interestingly, this aligns with the trend of tech-forward companies building custom AI platforms, as discussed in my previous article. Many companies recognize the value of implementing these techniques alongside RAG and other methods. By opting for custom solutions over off-the-shelf options, they can tailor AI capabilities to their specific needs and seamlessly integrate them with existing systems.

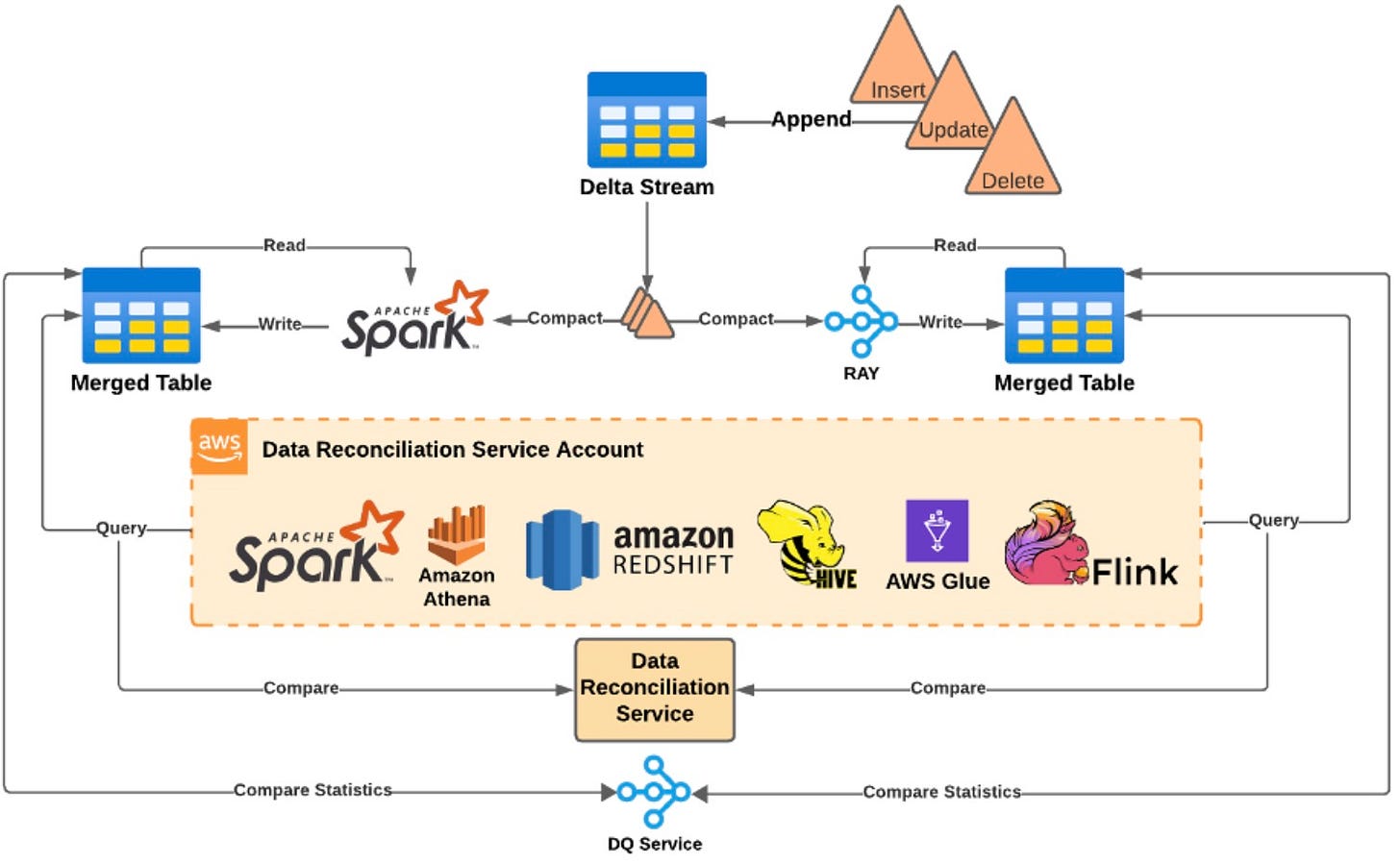

AI applications live and die by their data pipelines. Ray provides a powerful solution for preprocessing and feature engineering bottlenecks, as demonstrated by Amazon. They leveraged Ray to build specialized pipelines, achieving significant cost reductions while processing exabytes of data with ease on Ray clusters scaling to tens of thousands of vCPUs and petabytes of RAM. For developers inspired by Amazon's use of Ray for data pipelines, the Data Prep Kit project provides a complementary toolkit for unstructured data preparation, also utilizing Ray's distributed computing power.

Data Exchange Podcast

The Current State of Enterprise Generative AI Adoption. Evangelos Simoudis, Managing Director at Synapse Partners, discusses the current state of enterprise generative AI adoption, highlighting early implementation stages, investment trends, and key challenges. The episode explores various use cases, implementation strategies, and the AI startup landscape, while also addressing future market dynamics and potential industry leaders.

Monthly Roundup: The Economic Realities of LLMs. This episode provides a comprehensive overview of current AI trends, including the AI arms race, advancements in neural networks and discusses the strategic shifts in the AI industry. It also previews upcoming AI conferences and highlights recent breakthroughs in AI problem-solving capabilities.

Gain a competitive edge at The AI Conference. Over two immersive days, you'll connect with 75+ speakers, dive deep into technical breakthroughs, and unlock insights on emerging trends from McKinsey & others. From fireside chats to in-depth technical sessions, this is your chance to shape the future of AI. As Program Chair, I'm excited to offer this 25% discount – register now!

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Twitter, Reddit, Mastodon, or TikTok. This newsletter is produced by Gradient Flow.

Apologies for the broken "GraphRAG" link, it should be to this previous newsletter: https://gradientflow.substack.com/p/graphrag-design-patterns-challenges