Beyond RL: A New Paradigm for Agent Optimization

A Better Way to Build and Refine Agents

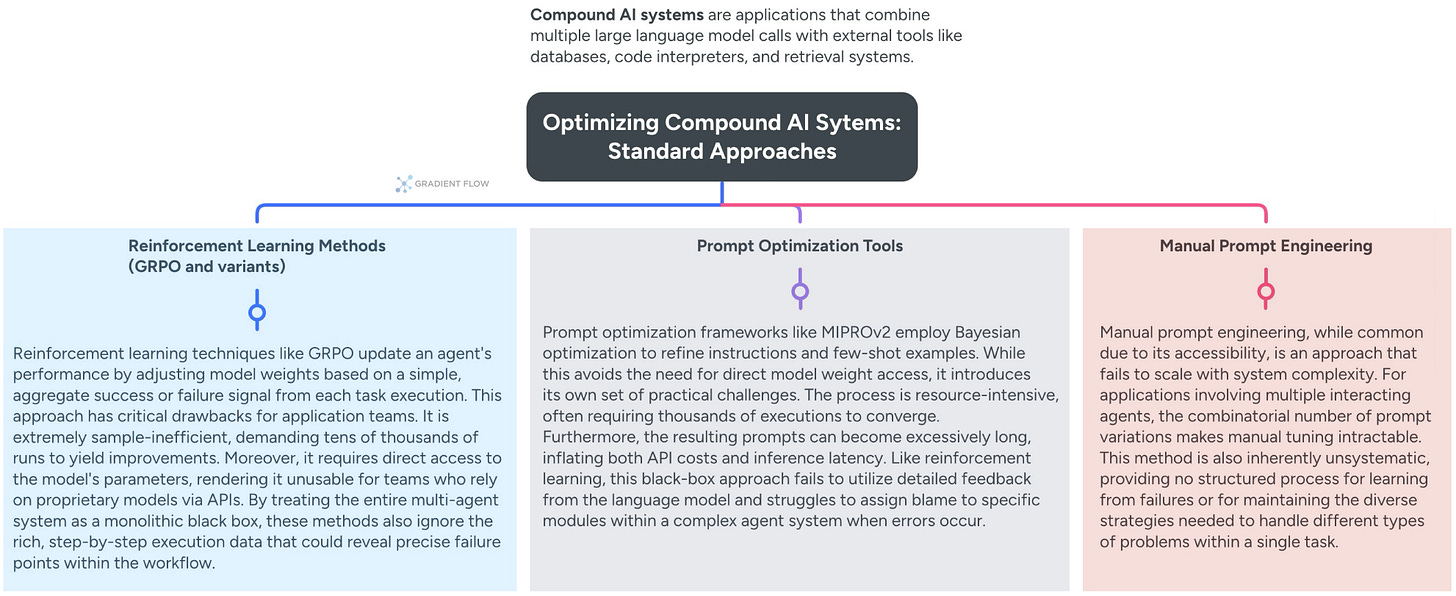

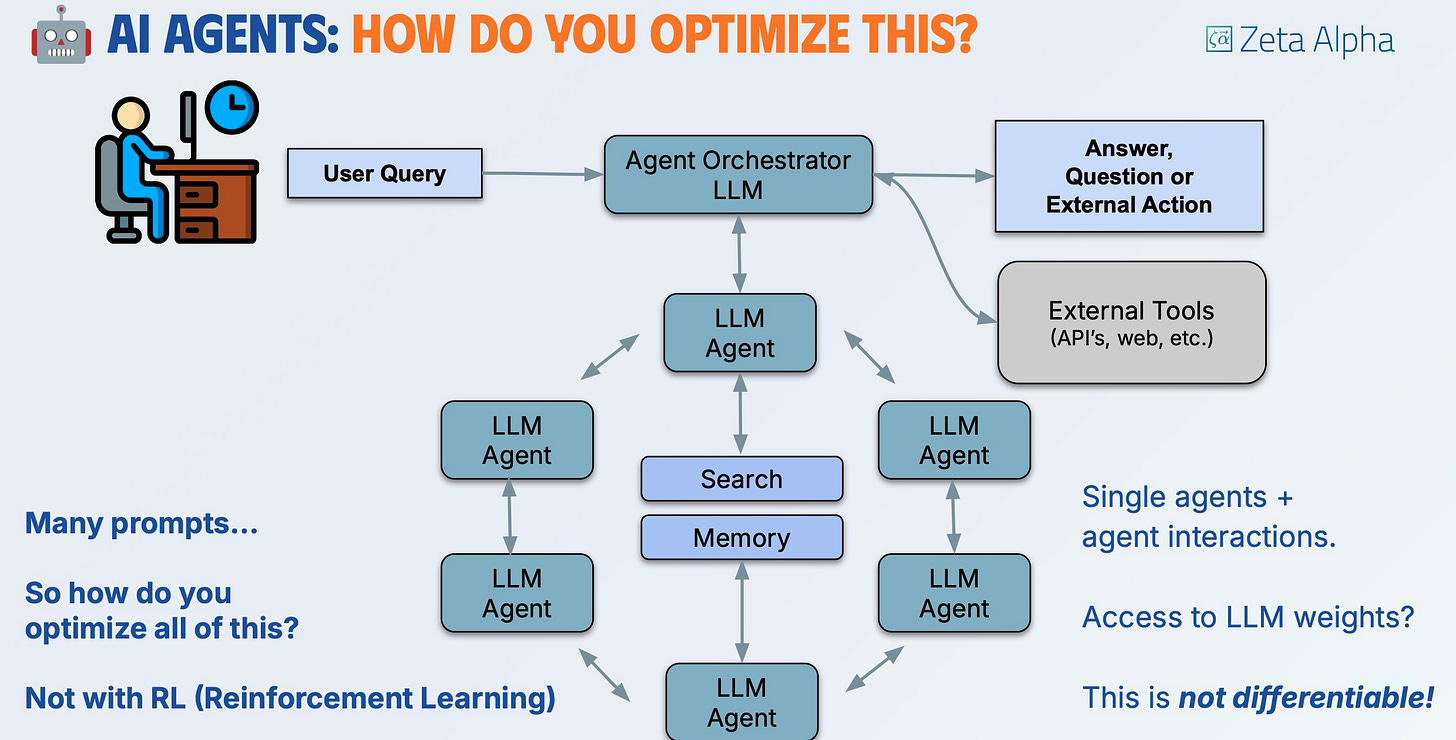

Modern AI applications have evolved far beyond single models. Many systems orchestrate multiple specialized agents — planners that decompose tasks, extractors that gather data, generators that create content — all coordinating through external tools and APIs. This architectural shift creates a fundamental optimization problem: the entire workflow becomes non-differentiable, making traditional gradient-based training methods impossible to apply.

The challenge runs deeper than technical complexity. Most development teams work with models through APIs, without access to underlying parameters. They can’t fine-tune weights even if they wanted to. This constraint transforms what should be systematic optimization into costly trial-and-error, where engineers manually adjust prompts and hope for improvement.

At the recent AI Conference, I was struck by how often reliability surfaced in my conversations with developers. Team after team described the same frustration: their AI systems worked impressively in demos but proved brittle under real-world conditions. The gap between proof-of-concept success and production-grade reliability has become the defining challenge for organizations deploying compound AI systems.

This pattern appears consistently across domains. Customer service agents, automated analysis workflows, and research assistants that fail to mature into production-ready systems. Teams burn through resources debugging edge cases and tweaking prompts, yet performance remains fragile. The core issue? Current approaches treat these complex workflows as black boxes, reducing rich diagnostic information to simple success/failure scores.

A Different Approach: Evolution Through Language

Zeta Alpha presented an intriguing alternative at the recent AI Conference that reframes the entire optimization problem. Rather than treating agent systems as impenetrable black boxes, their approach uses the language model itself as an optimization engine.

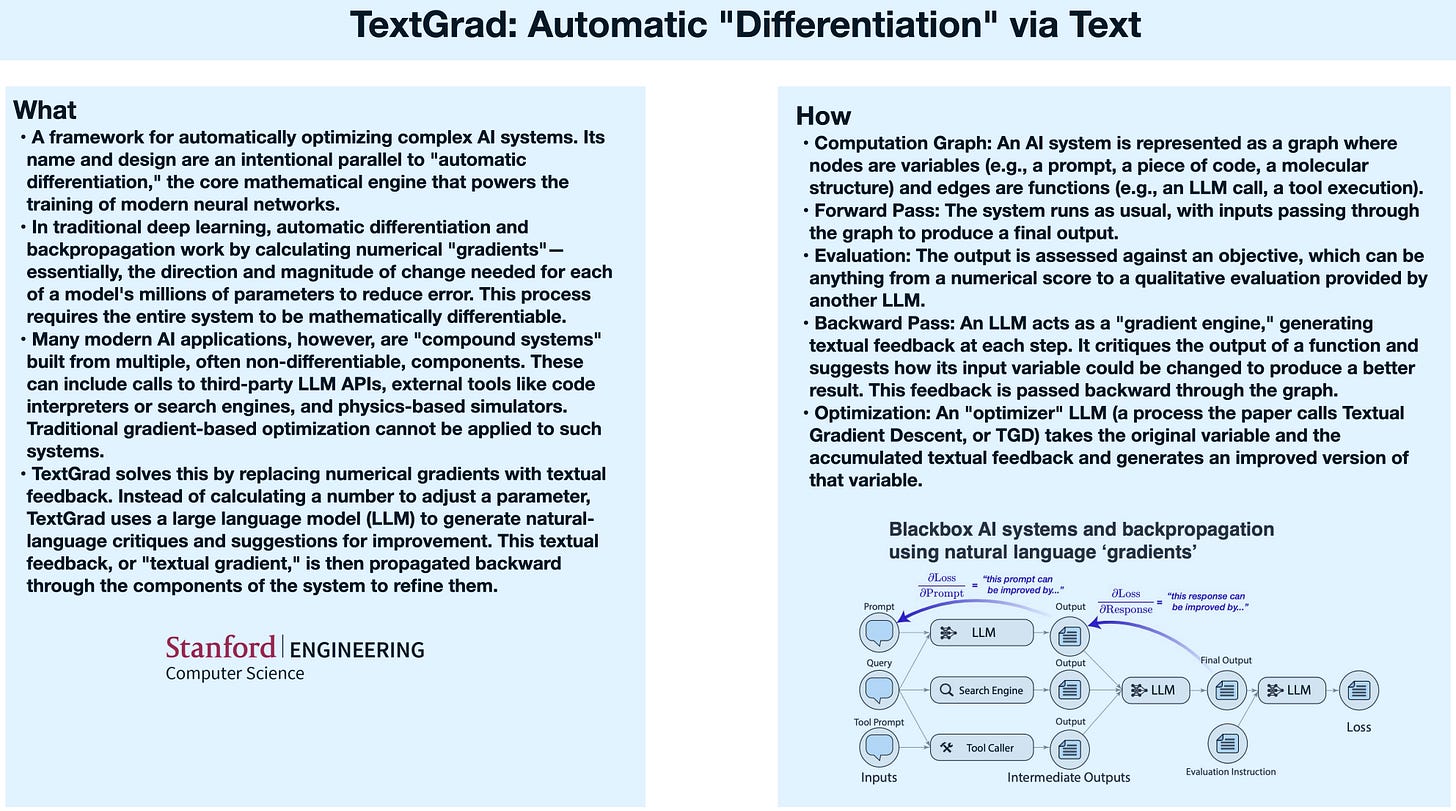

The key insight draws from recent research in genetic algorithms and what researchers call “textual gradients.” Instead of numerical scores, the system generates detailed natural language critiques of agent performance — specific feedback about flawed reasoning steps, ineffective tool usage, or coordination failures. Think of it as having an expert reviewer examine every execution trace and provide actionable commentary.

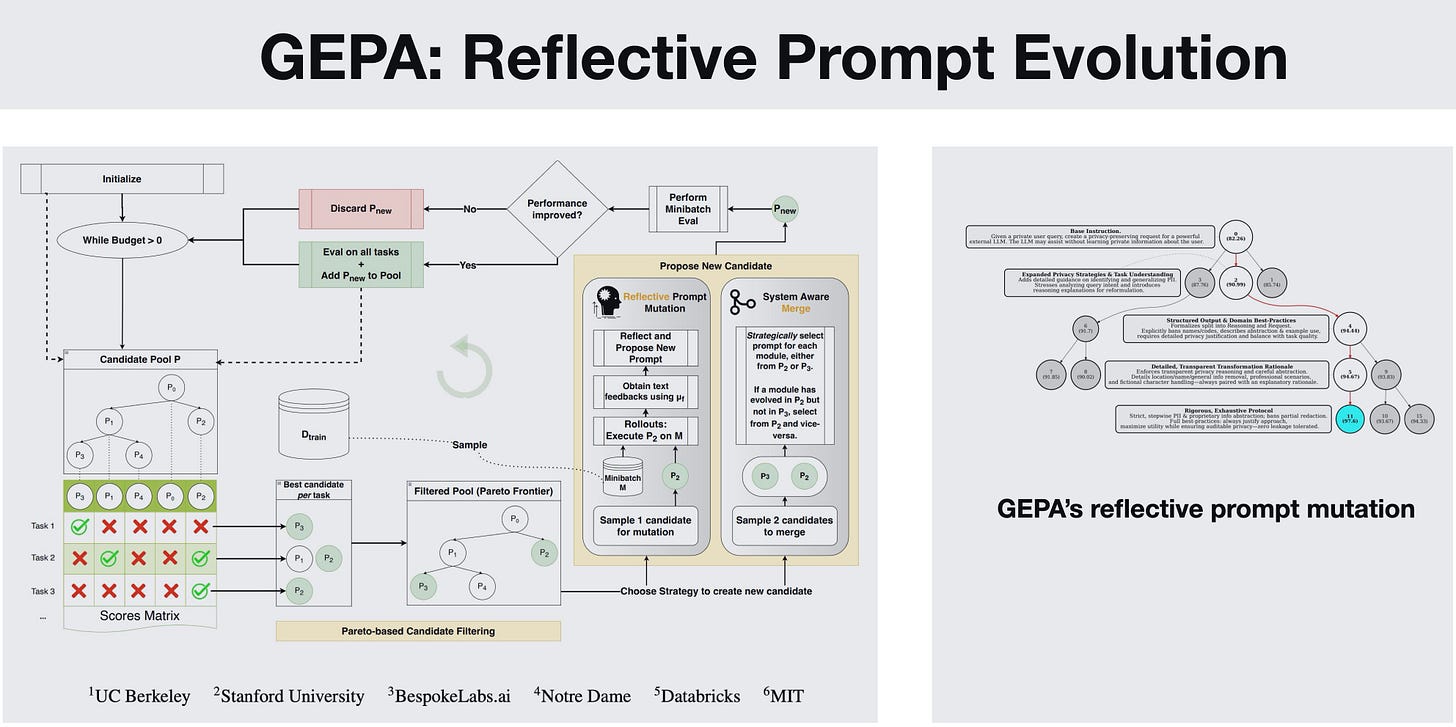

This textual feedback feeds into an evolutionary search process based on GEPA (Genetic-Pareto Evolution), where agent configurations undergo systematic mutation and selection cycles guided by natural language understanding rather than sparse numerical rewards. By analyzing execution traces as structured text rather than numerical signals, GEPA enables compound AI systems to learn complex behaviors from dozens of examples instead of tens of thousands.

Tournament-Style Competition for Continuous Improvement

Zeta Alpha has developed a working prototype that will soon be integrated into their Deep Research system — a multi-stage pipeline where agents collaborate to generate comprehensive research reports. Their implementation uses an elegant tournament structure: newly evolved agent variants compete head-to-head against existing configurations, with language models serving as judges.

These competitions update Elo ratings for each variant, creating a dynamic leaderboard of agent effectiveness. The system can even merge successful elements from different evolutionary branches, combining an effective planning prompt from one variant with a superior writing prompt from another. This enables true system-level optimization that component-wise tuning cannot achieve.

The practical advantages are compelling:

No model access required: Everything operates through API calls

Sample efficient: Learns from minimal calibration data rather than extensive datasets

Interpretable feedback: Natural language critiques explain exactly what needs improvement

Credit assignment: Traces failures to specific components within complex workflows

Looking Forward: Structural Evolution

While this evolutionary framework represents significant progress, we’re still in early stages of what’s possible. The most exciting frontier involves extending these techniques beyond prompt optimization to tackle structural design itself.

Imagine applying the same evolutionary principles to discover optimal agent architectures, systematically testing different pipeline configurations, tool selections, and coordination protocols. By integrating tournament-based selection with continuous user feedback, we could create truly adaptive systems that evolve based on real-world performance rather than static benchmarks.

Language feedback beats silent metrics: textual critiques turn black-box agents into debuggable systems.

The implications for teams building AI applications are substantial. Instead of the current paradigm where optimization requires extensive manual effort and domain expertise, we’re moving toward systematic engineering processes that can reliably produce robust AI systems. This shift transforms agent optimization from an expensive bottleneck into a scalable, interpretable process that continuously improves production systems.

The “reality gap” between AI prototypes and production systems remains a critical challenge. The fundamental challenges of agent specification, inter-agent coordination, and verification mechanisms still pose significant barriers to reliable deployment. But approaches like Zeta Alpha’s suggest a path forward, one where foundation models themselves become the optimization engines that help us build more reliable, efficient multi-agent systems. For practitioners, this means less time wrestling with prompt engineering and more time focusing on the unique value their applications provide.

Quick Takes

Evangelos Simoudis and I cover these three topics:

Ben Lorica edits the Gradient Flow newsletter and hosts the Data Exchange podcast. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. You can follow him on Linkedin, X, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.

a neural model defined by its low parameter count, typically in the sin