Rethinking Databases for the Age of Autonomous Agents

As the AI community buzzes with the potential of autonomous agents, I've been pondering a less glamorous but critical question: what does this mean for our data infrastructure? We are designing intelligent, autonomous systems on top of databases built for predictable, human-driven interactions. What happens when software that writes software also provisions and manages its own data? This is an architectural mismatch waiting to happen, and one that demands a new generation of tools.

This isn't just about handling more transactions. It represents the next stage in a broader convergence of operational and analytical data systems, a trend accelerated by the cloud's elastic nature. The core challenge is no longer just how to support agents' actions, but how to make the data from those actions immediately available for analysis, insight, and retraining the next generation of models. Yet even before we tackle this convergence, we're struggling with the basics.

Consider what happened recently to a mid-sized e-commerce site. The operations team woke up to find their database crippled, response times through the roof. It wasn't a DDoS attack or a code bug. A single AI company's web crawler was hammering their product API with 39,000 requests per minute, each triggering complex database queries. According to recent analysis from Fastly, which monitors 6.5 trillion web requests monthly, this is becoming routine. AI bots from companies like Meta and OpenAI are already pushing database-backed systems to their breaking points with what are, fundamentally, simple read operations.

These bots are just the prelude. Their workload is relatively simple, consisting mostly of read-only operations — the database equivalent of window shopping. The real challenge will come when these bots evolve into agents that can take action. An agent won't just read a product page; it will perform a complex, multi-step task requiring a full transaction: checking inventory (SELECT), adding an item to a cart (UPDATE), processing an order (INSERT), and perhaps coordinating with other agents (JOINs and concurrent writes). This fundamental shift from read-heavy to transactional workloads will break systems designed for the former.

If basic fetchers can stall sites, how can we possibly support millions of autonomous agents performing complex, stateful tasks? The answer is, it will be challenging — particularly with the tools we have today. We need a new blueprint.

New Blueprints: Databases Designed for Agent Scale

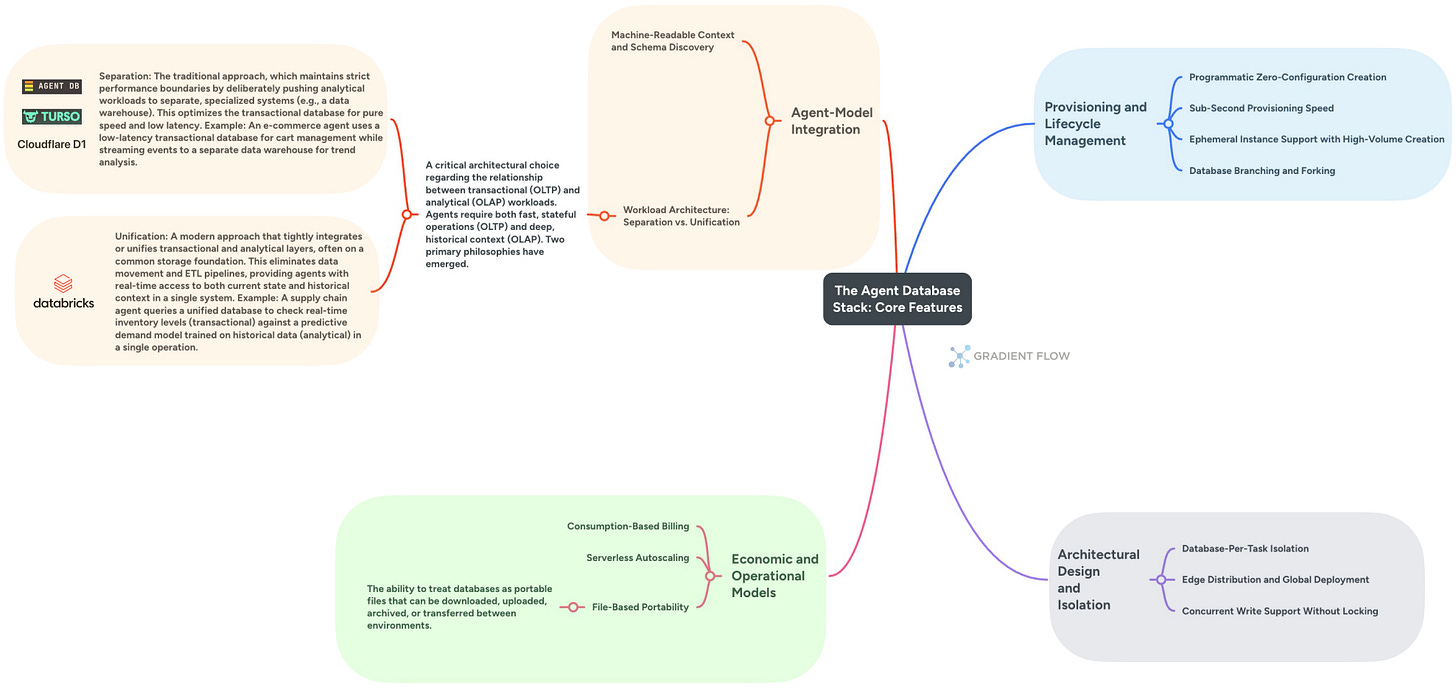

New architectural blueprints are emerging to meet this challenge. Some focus on agent-specific optimizations, while others, like the 'Lakebase' architecture articulated by Databricks co-founder Matei Zaharia, tackle the broader challenge of unifying operational and analytical systems. They aren't just faster versions of PostgreSQL or MySQL; they represent a fundamental rethink of how databases should work in an agent-driven world. Let me walk through the core principles driving this transformation.

First, treat databases like files: lightweight, fast, and ephemeral. The biggest shift is moving from databases as permanent infrastructure requiring careful provisioning to treating them as lightweight, disposable artifacts. Agent DB and Neon (acquired by Databricks) exemplify this approach with sub-second database creation requiring nothing more than a unique identifier. A code-generation agent can spin up a test database for each pull request in 500 milliseconds, run validation queries, and tear it down — all within a single CI pipeline step. Traditional databases requiring 10-minute provisioning wizards simply can't support this pattern. The scale of this shift is staggering. Agents are already creating four times more databases than humans. Neon recently reported that AI agents went from creating 30% to over 80% of all new databases on their platform within months. This pattern of high-frequency creation and deletion is the new normal.

Second, make databases speak the model's language. Agents shouldn't waste expensive tokens and cycles trying to figure out a database's schema. Systems like Agent DB implement the Model Context Protocol (MCP), providing agents with URLs containing everything needed: schema definitions, data types, even sample queries. The agent generates correct SQL on the first attempt without exploratory queries. Meanwhile, Turso's evolution of SQLite to support concurrent writers without locking means multiple agents can collaborate on shared data — imagine a planning agent and three execution agents all updating a task database simultaneously without blocking each other.

Third, give every agent its own sandbox. Managing permissions for thousands of agents in a shared database is a security and operational nightmare. The new model, pioneered by platforms like Turso and Cloudflare D1, gives each agent or user session its own isolated database instance. Agent DB’s file-per-db model makes sandboxes a first-class primitive. A financial analysis agent creates separate, encrypted databases for each client's data — complete isolation without complex permission matrices. These "personal silos" can be distributed to edge locations globally, co-locating data with compute to slash latency from hundreds of milliseconds to single digits.

Fourth, bridge the gap to analytics. While the first three principles focus on optimizing the transactional workload itself, a parallel trend seeks to eliminate the wall between transactional and analytical systems. A prime example is the Lakebase approach, which embeds transactional capabilities directly into a data lakehouse, enabling applications to query historical patterns while maintaining transactional state. An inventory management agent can check real-time stock levels against predictive demand models without complex data pipelines. This operational-analytical convergence represents another path forward, particularly for organizations already invested in lakehouse architectures.

We're already seeing these ideas in action: developer agents spin up temporary databases for CI runs, planning agents fork databases to test strategies in parallel, and product agents create private data silos for each user at the edge. This is what becomes possible when we give agents disposable, private workspaces that live right next to their code.

From Storage to Memory: Building Truly Stateful AI

These new database capabilities are more than just infrastructure solutions; they are the foundation for creating truly stateful AI agents. The critical link between this new infrastructure and agent intelligence is memory.

Don't make your agent guess. Provide a machine-readable context that eliminates exploratory queries.

Memory is the logical layer above this foundation. Frameworks like Letta define the logic of how an agent manages context — the rules for what stays in its "RAM" versus its "disk." But it's your database choice that determines if that logic can actually perform. The database is the agent's external "disk." Unified platforms that combine structured data and vector search are ideal for this role, making retrieval fast and debugging simple, letting you defer specialized vector stores until scale truly demands them. Some platforms take this further by unifying operational and analytical layers entirely. When transactional databases can directly access lakehouse tables, agents gain unprecedented context without the latency and complexity of data movement.

With this model in mind, the path forward for builders becomes clear:

Treat databases as ephemeral, task-specific resources, not permanent fixtures.

Prioritize isolation with database-per-task or per-user patterns for any multi-agent or multi-tenant application.

Unify your memory stack on platforms that combine relational data and vector search to simplify your architecture.

Consider where operational-analytical convergence matters for your use case — if agents need real-time access to both transactional state and analytical insights, explore platforms that unify these layers.

As agents handle the mechanics of provisioning and querying, our role becomes more curatorial. We shift from writing low-level code to the higher-level work of orchestrating how our systems handle both operational state and analytical intelligence. Whether through ephemeral databases optimized for agent workloads or integrated platforms that bridge transactional and analytical processing, the key is choosing the right architecture for your specific needs. Just as Google's search quality relies on relentless human refinement, the most effective agentic systems will be those where we constantly monitor, correct, and teach our agents what 'good' actually looks like — regardless of which database philosophy we embrace.

Learn • Connect • Build: Open-Source AI in SF

Ben Lorica edits the Gradient Flow newsletter and hosts the Data Exchange podcast. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. You can follow him on Linkedin, X, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.