What You Need to Know About GPT-4 and PaLM 2

Behind the Curtain: Unpacking GPT-4 and PaLM 2

As AI technology continues to evolve, we are witnessing a shift in the openness of systems. Leading-edge AI models, such as GPT-4 (OpenAI) and PaLM 2 (Google), are trending towards being less open than their predecessors. This shift is being driven by a number of factors, including the mounting costs of training these models, the need to protect intellectual property, and concerns over potential misuse.

Despite the diminished transparency, valuable insights can still be gleaned from the comprehensive Technical Reports that model creators continue to release. These reports provide a wealth of information on model capabilities, benchmark results, and demonstrations. By carefully studying these reports, we can gain a better understanding of the developers' priorities and considerations. This knowledge can help us to better assess the potential risks and benefits of these models, and to develop strategies to mitigate possible harm.

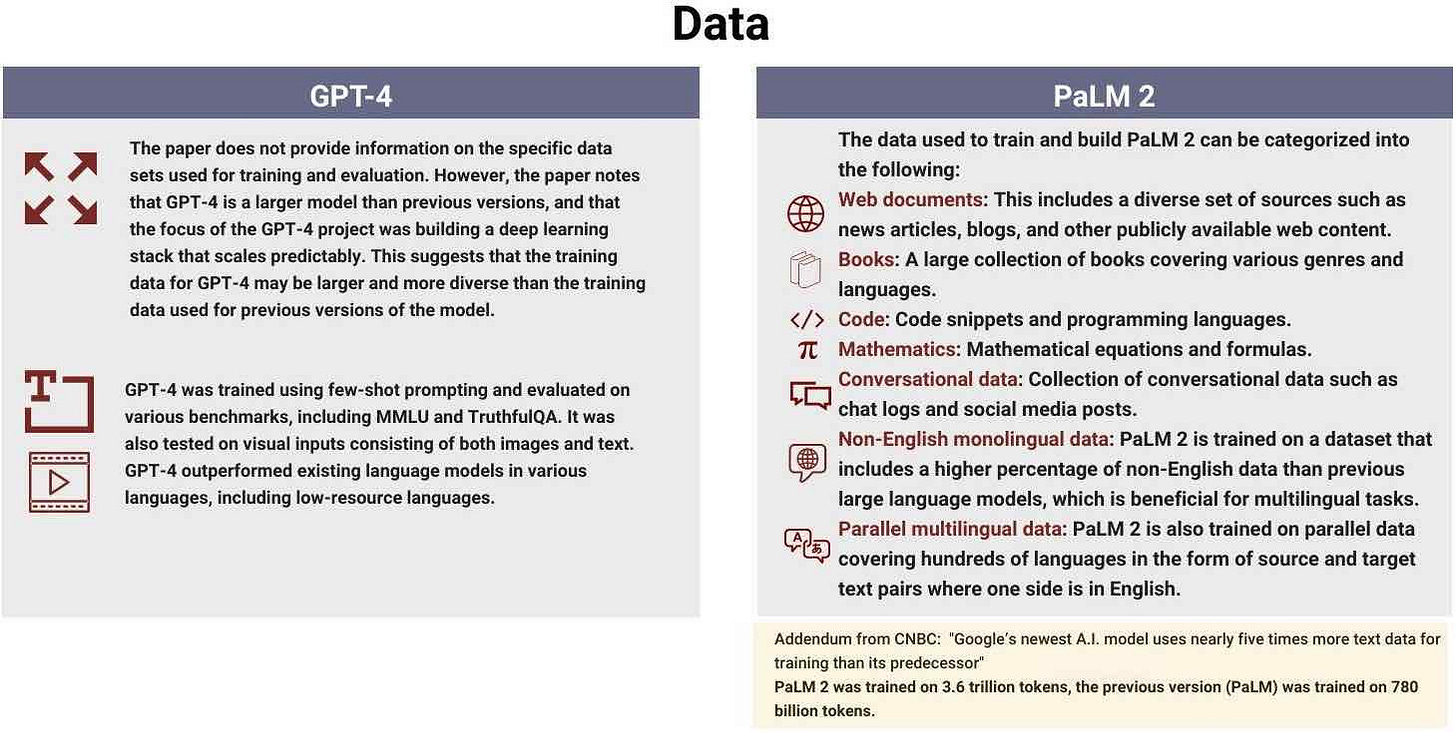

Data

GPT-4 is a larger model than its predecessors. It was trained on a diverse and extensive dataset of text and code, but the specific datasets used are not publicly available. GPT-4 was evaluated on a variety of benchmarks and visual inputs, and it outperformed existing language models in several languages, including low-resource ones.

In contrast, PaLM 2's training data sources are explicitly described. The model was trained on a massive dataset of web documents, books, code, mathematical data, conversational data, non-English monolingual data, and parallel multilingual data. PaLM 2 includes a higher percentage of non-English data than previous models, which fosters stronger multilingual capabilities. According to a recent CNBC article, Google's PaLM 2 was trained on 3.6 trillion tokens, nearly five times more than the previous version of PaLM, which was trained on 780 billion tokens.

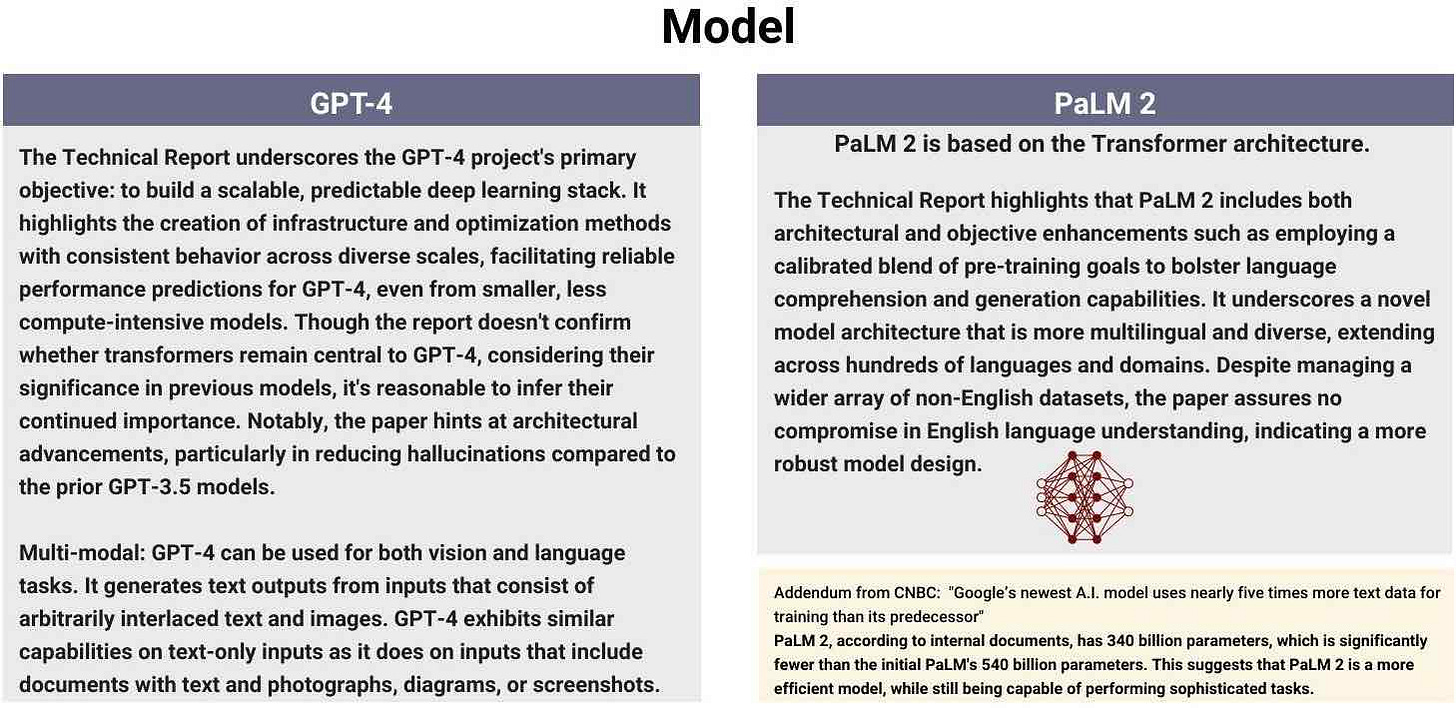

Model

The GPT-4 Technical Report emphasizes the development of a scalable, predictable deep learning stack, with potential advancements in reducing hallucinations compared to the previous model, GPT-3.5. The report highlights that GPT-4 is a multimodal language model that can generate text from text and image inputs. It performs similarly on text-only and multimodal inputs.

On the other hand, the PaLM 2 Technical Report confirms the use of Transformer architecture, with improvements in both architectural and objective aspects. It outlines a more diverse, multilingual model capable of handling numerous languages and domains without compromising English language understanding.

PaLM 2, according to internal documents reviewed by CNBC, has 340 billion parameters, which is significantly fewer than the initial PaLM's 540 billion parameters. This suggests that PaLM 2 is a more efficient model that can still perform sophisticated tasks.

Nonetheless, model size & other factors remain crucial. Recent research underscores the significance of model size, and highlights the benefits of models leveraging extensive data, advanced algorithms, and proprietary fine-tuning datasets.

Ethics and Alignment

Both GPT-4 and PaLM 2 were rigorously tested and fine-tuned to ensure responsible AI behavior. GPT-4 used expert-driven adversarial testing, Rule-Based Reward Models (RBRMs), reinforcement learning with human feedback (RLHF), and safety metrics to assess its response to sensitive content. PaLM 2 focused on data cleaning and quality filtering, added control tokens for toxicity, used multilingual canaries in memorization evaluations, conducted an in-depth responsible AI analysis, tested in a few-shot, in-context learning scenario, and assessed bias and harm in its outputs. The two models used different, but complementary, strategies to achieve safer and more responsible AI.

Performance Evaluation

GPT-4 outperformed its predecessor, GPT-3.5, in most areas, excelling in academic and professional exams, factuality evaluations, language benchmarks, and the TruthfulQA benchmark. It also demonstrated proficiency with visual inputs and generated favored responses in ChatGPT prompts.

PaLM 2 was assessed on language proficiency exams, English question answering and classification tasks, translation quality, and code generation. It was compared primarily to its previous version, PaLM, and also to Google Translate for translation tasks. Both models showcased impressive capabilities, with improvements over their respective predecessors.

Inference

The technical documentation for GPT-4 does not sufficiently elaborate on the model's inference speed, a crucial aspect of performance. In contrast, the detailed analysis presented in the report for PaLM 2 reveals marked improvements over its predecessor. More specifically, PaLM 2 achieves a 1.5 times increase in speed and efficiency when operating on a CPU, and a 2.5 times improvement on a GPU. This performance boost signifies that PaLM 2 is well-suited for tasks demanding swift and efficient language processing. It's noteworthy that, according to internal documents, PaLM 2 uses 200 billion fewer parameters than its predecessor.

Scaling Laws

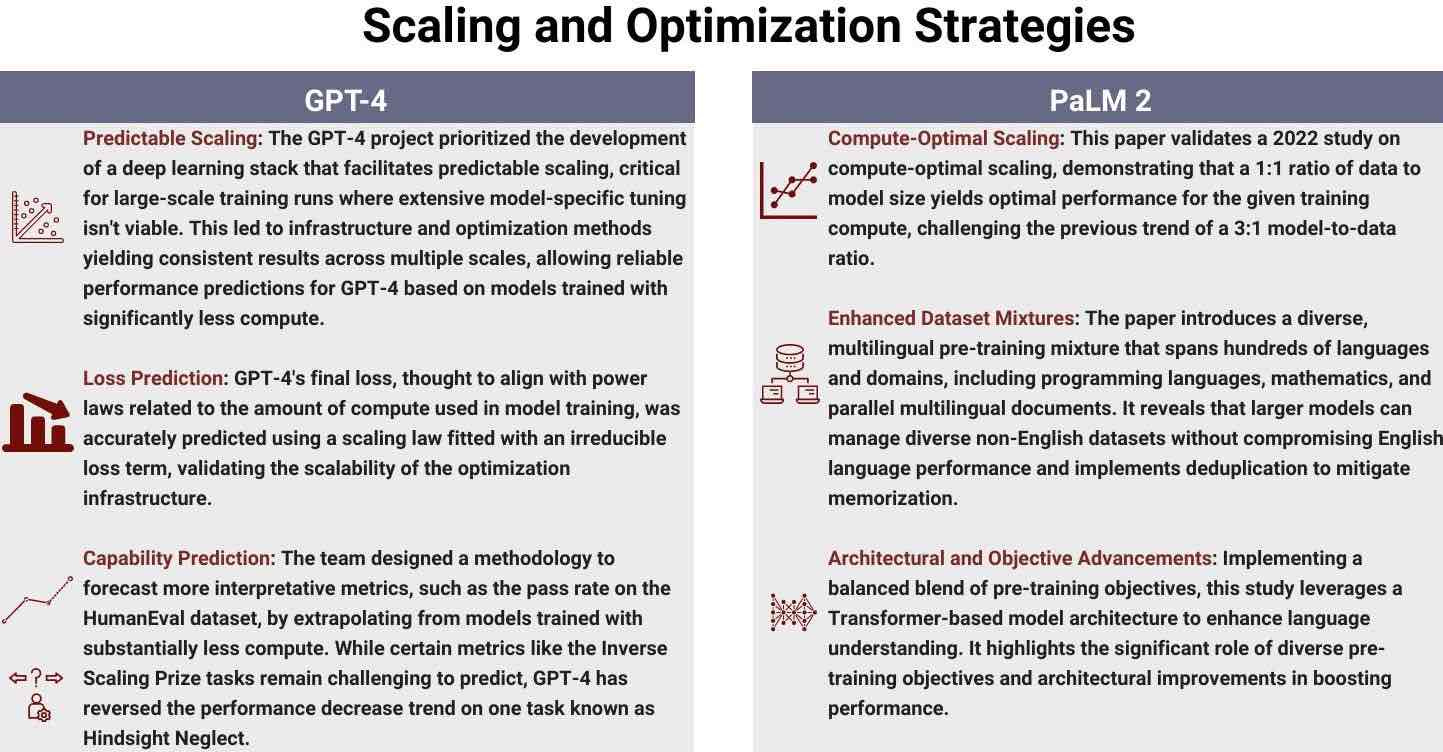

The GPT-4 project primarily focused on building a deep learning stack for predictable scaling, accurate loss prediction, and capability prediction across multiple scales, without extensive model-specific tuning. PaLM 2 centered on validating compute-optimal scaling and enhancing dataset mixtures across numerous languages and domains, while also emphasizing the importance of diverse pre-training objectives and architectural advancements for improved performance. Despite the different focus areas, both studies underscore the significance of scaling and optimization strategies in achieving superior language model performance.

Summary

The technical reports for GPT-4 and PaLM 2 provide insights into the underlying priorities, strategies, and breakthroughs in the development of these cutting-edge language models. While GPT-4 emphasizes predictable scaling and capability prediction, PaLM 2 validates compute-optimal scaling and underscores the importance of diverse dataset mixtures and architectural advancements. Despite variations in their focus, both reports underscore the crucial role of scaling and optimization strategies in achieving superior performance. As AI continues to advance, these reports offer essential guidance for future research and development, paving the way for safer, more efficient, and effective AI systems.

Spotlight

Instacart modularized data pipelines using Lakehouse Architecture. Instacart restructured its Ads measurement platform to improve efficiency and reduce costs. They moved from a traditional ETL stack to a modular pipeline system built on Spark. This overhaul allowed for more effective data management, enhanced read optimization, monitoring, and SLA flexibility, and resulted in over 50% savings and better scalability.

Small yet Mighty: MIT's Leap in Scalable Language Models. Researchers recently developed smaller, self-learning language models that outperform larger counterparts in language understanding tasks. Using textual entailment and self-training, the models continually improve their performance and offer a cost-effective, privacy-preserving, and scalable alternative to large AI models. This breakthrough in language understanding opens up new possibilities in various applications.

Massively Multilingual Speech (MMS) project. Meta’s MMS project aims to significantly expand speech technology by creating a single model that supports over 1,100 languages for speech recognition, language identification, and text-to-speech functionality. The project leverages technologies like wav2vec 2.0 and self-supervised learning to amass a unique dataset of labeled and unlabeled data for numerous languages. The models and code have been shared publicly to enable further research.

Building a Self Hosted Question Answering Service in 20 minutes. This post describes how easy it is to build a retrieval-augmented question answering service using Ray and LangChain. The service first queries a search engine (or a vector database) for results, and then uses an LLM to summarize the results. This method yields greater accuracy compared to posing the question directly to the LLM, as it effectively mitigates the risk of the model generating fabricated responses.

Data Exchange Podcast

Building and Deploying Foundation Models for Enterprises. Jonas Andrulis is the Founder & CEO of Aleph Alpha, a startup that provides enterprise software solutions backed by large language models and multimodal models. Aleph Alpha focuses on critical enterprises such as law firms, healthcare providers, and financial institutions that require reliable and accurate information. In contrast to other large language models, Aleph Alpha provides a more reliable solution by providing sources and citations for its responses.

Robust AI Infrastructure for Critical Solutions. Alex Remedios, founder of Treebeardtech, is a London consultant who aids machine learning teams in building robust cloud infrastructures for critical AI solutions. We discuss his instrumental contribution to integrating Ray into the NHS Early Warning System, a platform used to predict daily COVID-19 admissions in the UK.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, or Twitter, or Mastodon, or T2, on Post. This newsletter is produced by Gradient Flow.