We Need Efficient and Transparent Language Models

Data Exchange podcast

Efficient Methods for Natural Language Processing: Roy Schwartz is Professor of NLP at The Hebrew University of Jerusalem. We discuss an important survey paper (co-written by Roy) that presents a broad overview of existing methods to improve NLP efficiency through the lens of NLP pipelines.

Building a premier industrial AI research and product group .. in three years: Hung Bui is the CEO of Vietnam-based, VinAI, explains the process of building a team that within a span of three years found itself listed among the Top 20 Global Companies in AI Research.

[Image: Cuypers Library – Inside the Rijksmuseum by Ben Lorica.]

Data & Machine Learning Tools and Infrastructure

Holistic Evaluation of Language Models. Stanford researchers recently introduced tools to help users and developers understand large language models (LLM) in their totality. Given the central role of LLMs in NLP and in GenerativeAI, this suite of tools is an important step towards better transparency for language models. I hope other researchers build upon this exciting suite of techniques and ideas.

Benchmarking Open Table Formats. When migrating to a modern data warehouse or data lakehouse, selecting the right table format is crucial. Brooklyn Data Company just released an important new benchmark comparing open source Delta Lake and Apache Iceberg. They found that Delta Lake is typically 1.5x - 3x better for write workloads. Read workloads ran consistently 7x - 8x faster on Delta Lake.

Monolith, a large-scale, real-time recommendation system from Bytedance. Monolith addresses two problems faced by modern recommenders: (1) Concept Drift - underlying distribution of the training data is non-stationary; ( 2) Features used by models are mostly sparse, categorical and dynamically changing.

Controlling Commercial Cooling Systems Using Reinforcement Learning. DeepMind's recent work on RL for controlling commercial HVAC facilities is impressive. They identify specific examples of RL challenges and detail how these issues were addressed in a real system. As a result, they were able to achieve around 9% and 13% savings during live testing in two real world facilities. To get started with reinforcement learning, check out RLlib, a popular open source library for production-level, highly distributed RL workloads.

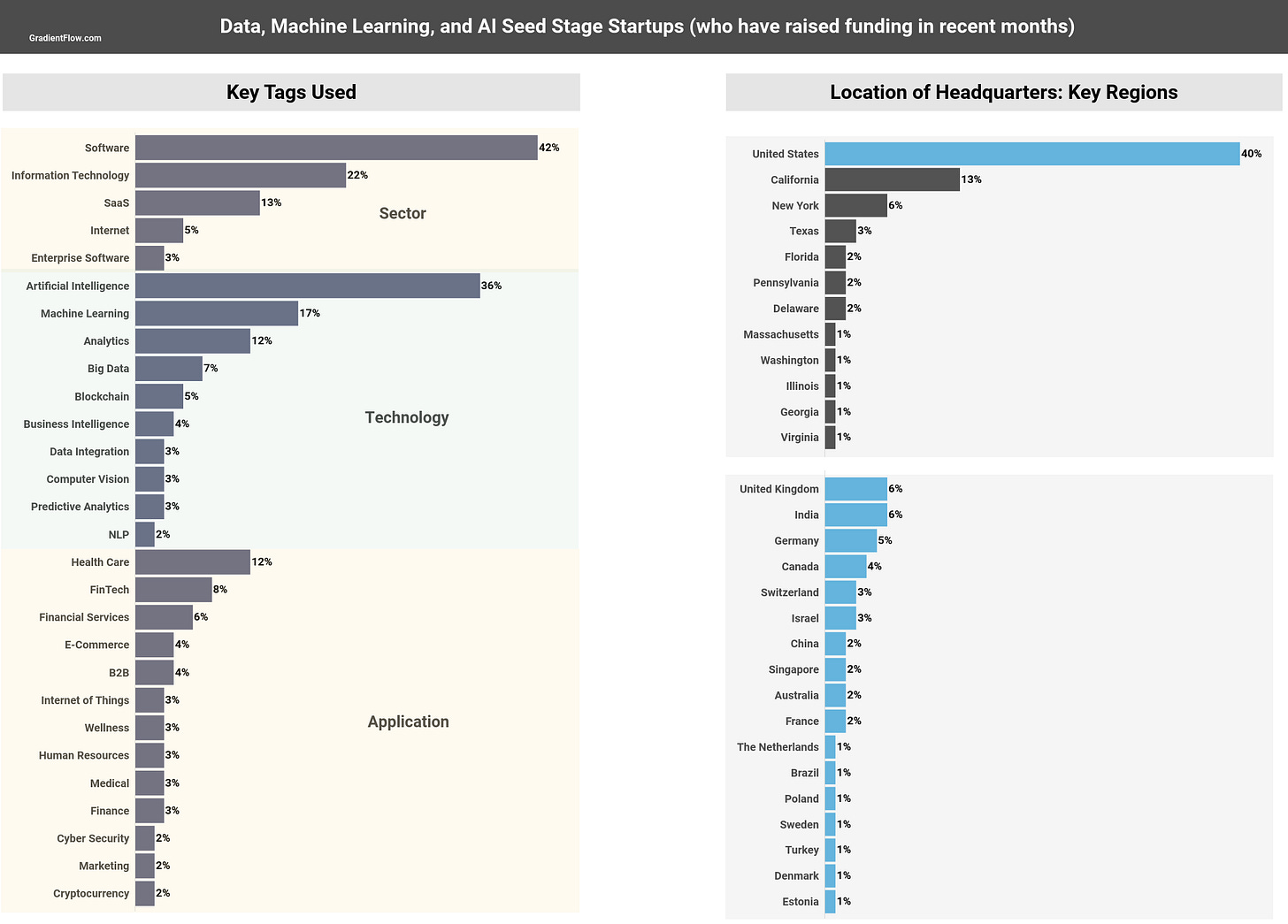

Sourcing Future Data and AI Pegacorns

In a previous newsletter I noted the sharp decline in job postings across data and AI technologies and personas. The news isn't any better on the investment front: startup funding declined 53% year-over-year from Q3-2021 to Q3-2022. On the bright side, global seed-stage funding was close to flat (year-over-year) in Q3-2022.

Where are the future Data and AI pegacorns likely to come from? In terms of region, California (and the U.S.) continue to be the most probable locations. In our previous lists we noted that most pegacorn companies sell applications. With the maturation of data and AI technologies, building targeted applications continues to get easier. Glancing at the graphic below, the financial and healthcare sectors continue to be popular targets for data and AI startups.

A Brief Social Update

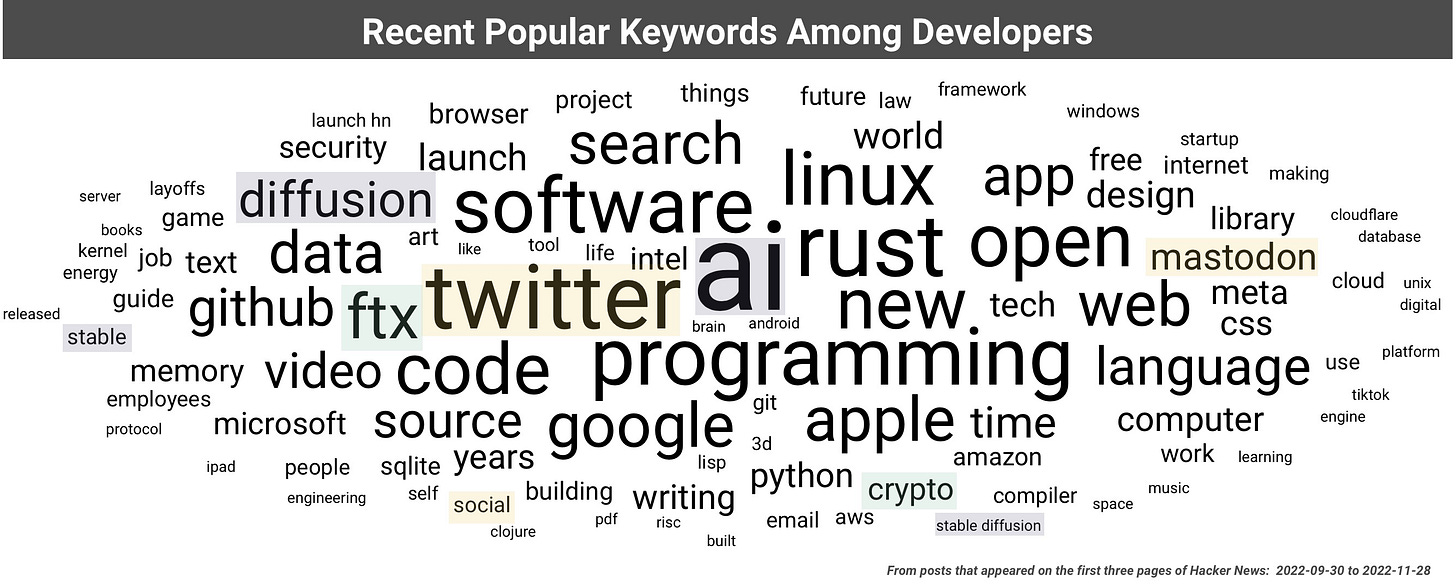

Since the chaos on Twitter started, I've been using it less. Instead I’ve revisited a long dormant Mastodon account (@bigdata), and I’ve also been trying out Post (@bigdata), a new platform where some Twitter personalities are beginning to show up.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Twitter, on Mastodon, or on Post. This newsletter is produced by Gradient Flow.