Unveiling the Future of AI: Insights on AI Chips, Knowledge Graphs, and AI Regulations

AI Chips: Past, Present, Future

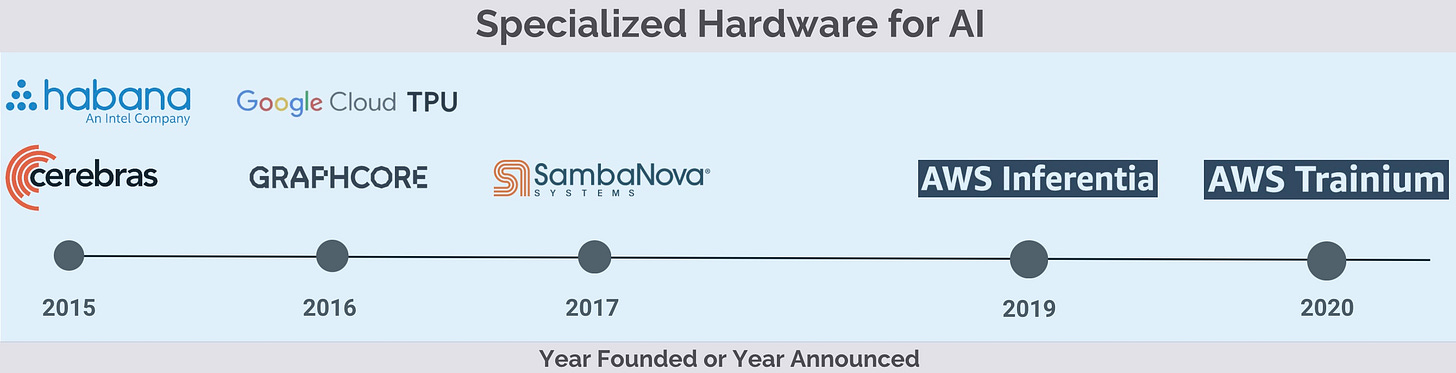

In a recent collaboration with Assaf Araki of Intel Capital, we delve into specialized hardware for Artificial Intelligence. The training of massive AI models has historically been dominated by Nvidia GPUs and Google TPUs. There is a significant challenge facing new entrants to the market: the lack of resources to develop a software stack comparable to CUDA or XLA makes other hardware accelerators less competitive. One notable advancement in this direction is the software stack centered around PyTorch 2.0 and Triton, that aims to simplify the implementation of non-Nvidia backends.

We reassess our previous views on specialized hardware for AI. As we cast our gaze forward, we examine the hardware ramifications of contemporary trends in the field, such as the exponential growth of model size, interest in data-centric AI, the growing excitement surrounding foundational models and generative AI, and the advent of decentralized custom models.

Knowledge Graphs

Knowledge graphs are not new. I expect usage of knowledge graphs to grow in the coming years as language models and AI applications gain traction. An AI application can benefit from knowledge graphs because they provide a structured, interconnected representation of data. This allows AI algorithms to better understand and make use of the information they are working with. By providing an interconnected network of relationships between different entities, knowledge graphs enable AI applications to better comprehend the context and relationships between data points.

The use of knowledge graphs enables AI systems to solve problems more effectively and more efficiently, without getting lost in a sea of data. For example, imagine trying to find information about a specific person. A knowledge graph can provide a quick overview of that person's background, relationships, and relevant facts without having to search through countless pages of unorganized information. Knowledge graphs are a key component of digital assistants and search engines, and they contribute to a wide range of AI applications, including link prediction, entity relationship prediction, recommendation systems, and question answering systems.

Knowledge graphs can also provide complementary, real-world factual information to augment limited labeled data to train a machine learning algorithm. Recent research and implemented industrial intelligent systems have shown promising performance for machine learning algorithms that combine training data with a knowledge graph. They are used to enhance input data for AI applications such as recommendation and community detection.

[Supply & Demand for “knowledge graph” talent, Jan/2023. Click to enlarge.]

Knowledge graphs have already garnered the attention of numerous healthcare and financial services firms, as well as enterprises with intricate supply chains. I expect that as language models and foundation models gain popularity, more companies will create and invest in knowledge graphs.

This is due to the increasing number of firms that will opt to train their own large models. For many enterprises, especially those operating in heavily regulated industries, the mere provision of API access by public foundation models may prove insufficient. The extent to which a company invests technically, the amount of data it possesses, and its comfort level with shipping data to an API or third-party, are all factors that will influence the decision-making process. It is unlikely that a few GPT-like models will monopolize the market, as the dynamics of organizational structure and considerations such as trust and cost will play a critical role.

While startups are developing tools to simplify the training of large models, there has been less investments in tooling for knowledge graphs that cater to AI teams constructing hybrid [neural / KG / retrieval-based] models. As my friend Paco Nathan has long lamented, “It would be nice to be able to train language models on connected data!” For your next project or venture, consider developing tools that can streamline the creation, management, maintenance, and scaling of these graphs.

Data Exchange Podcast

Preparing for the Implementation of the EU AI Act and Other AI Regulations. Data and AI teams may be surprised to learn that a number of AI regulations and policies will take effect in 2023. To help teams grapple with looming regulations, I consulted with Gabriela Zanfir-Fortuna (VP for Global Privacy at the Future of Privacy Forum) and Andrew Burt (Managing Partner at BNH, the first law firm focused on AI and Analytics).

Unleashing a Game-Changing AI Hardware Shift. Dylan Patel is the Chief Analyst at SemiAnalysis, a boutique semiconductor research and consulting firm focused on the semiconductor supply chain. We discuss AI hardware, RISC-V, and the export controls the Biden administration has placed on certain advanced computing and semiconductor manufacturing items.

My recent guest stint on the Open||Source||Data podcast hosted by Sam Ramji gave me the opportunity to reflect on the early days of big data. Our conversation prompted me to dig up a chart I created a decade ago, during a period of increasing excitement surrounding big data and Hadoop. As things turned out, cloud data warehouses and lakehouses later emerged as the central components of modern big data platforms.

Spotlight

Extracting Training Data from Diffusion Models. Stable Diffusion and similar models are trained on images that are copyrighted, trademarked, private, and sensitive. This recent study demonstrates that these diffusion models retain memories of the images they were trained on and reproduce them during generation. Diffusion models are less private than prior generative models such as GANs, and new advances in privacy-preserving training may be needed to mitigate these vulnerabilities.

Comparing Snowflake and Databricks for NLP, computer vision, and classic machine learning problems. A team from Hitachi designed and conducted experiments from the TPCx-AI benchmark standard and found that Databricks is generally faster, cheaper, and easier to use.

Transformative AI and Compute. This interesting syllabus and reading list curated by Lennart Heim dives into the crucial topic of computational resources in AI. It covers the role of compute in AI, the supply chain of chip production, governance frameworks that lead to beneficial AI outcomes, and projections of AI's transformational capabilities.

Unleashing ML Innovation at Spotify with Ray. Spotify selected Ray as its machine learning platform of choice due to its ability to cater to the demands of a diverse range of ML practitioners and enhance their productivity throughout the ML lifecycle. The streaming giant relies on Ray's versatility, its scalability in processing compute-intensive workloads, and its compatibility with a multitude of machine learning tools and libraries.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, or Twitter, or Mastodon, or on Post. This newsletter is produced by Gradient Flow.