How Two Sigma & Nubank Rewire Finance with Foundation Models

Financial services has always been my bellwether for how new technologies are rolled out, and generative AI is the latest example. My own stint as a quant at a hedge fund many years ago has kept me interested in the intersection of finance and technology. This ongoing interest led me to pay close attention to the recent Ray + AI Infra Summit in New York, where two very different finance teams—one from Two Sigma and another from Nubank—took the stage.

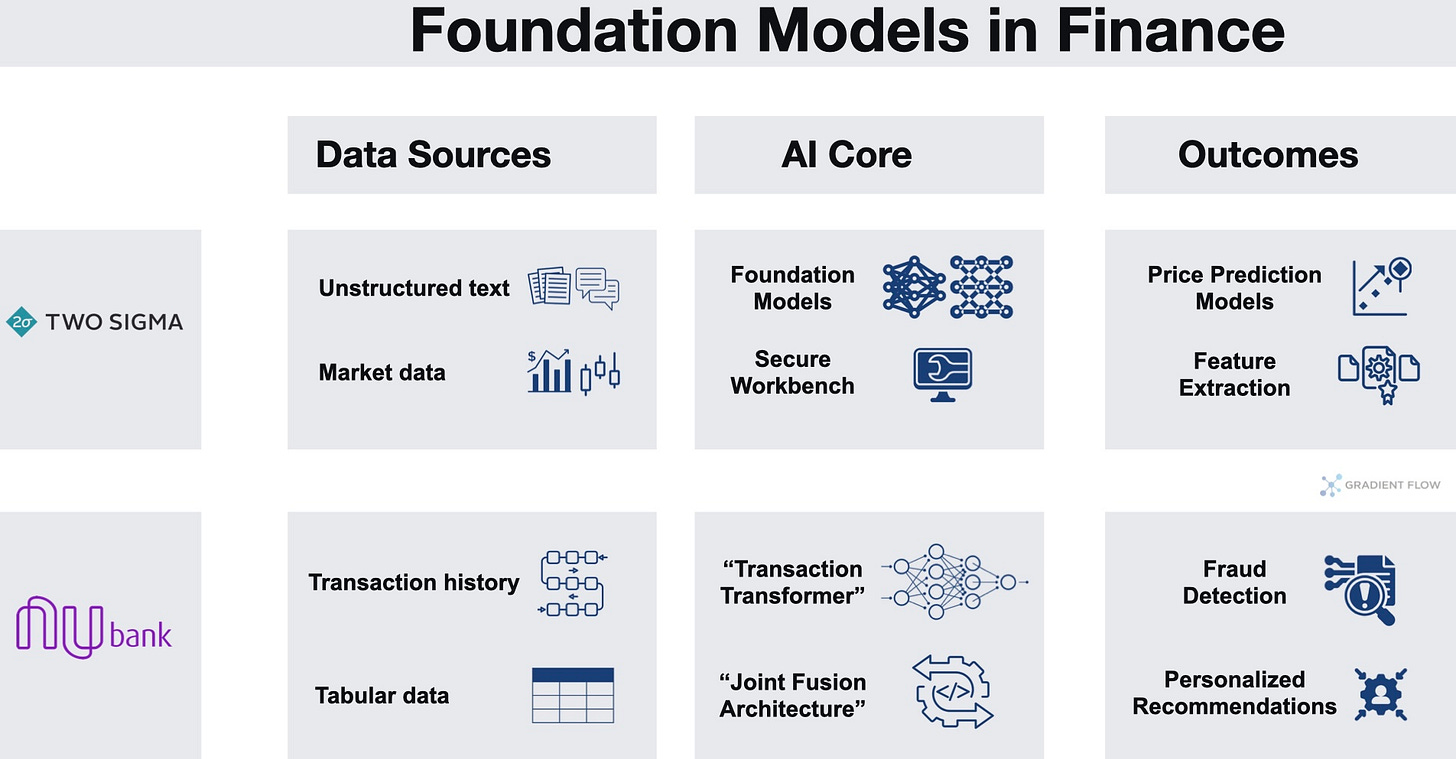

Two Sigma is a quantitative investment manager founded in 2001 that now manages over $60 billion. With 70% of its staff in research and development, the firm operates more like a scaled technology company whose product happens to be alpha generation than a traditional hedge fund. In contrast, Nubank is the world’s largest digital bank, serving over 100 million customers across Latin America. Founded with an engineering mindset, it brings a “mobile-first, data-first” ethos to retail banking.

Despite their different domains—one navigating the noisy world of public markets, the other the personal finances of millions—both firms are converging on a similar playbook. They are leveraging foundation models and a common infrastructure core to extract predictive signals from complex, sequential data. Their stories demonstrate that deploying AI in finance isn't about chasing the latest model architecture—it's about building resilient systems that can extract signals from noise while meeting stringent regulatory and performance requirements.

Unlocking New Capabilities in Noisy Markets

Both firms are strategically shifting from established machine learning techniques to foundation models. At Two Sigma, this means moving beyond traditional quantitative models and limited neural network usage. For core challenges like price prediction, they now employ deep neural networks with millions of parameters. For complex sequential problems like trade execution—optimizing the sale of a large block of stock over time—they use reinforcement learning. Large language models (LLMs) are accessed through a secure internal "workbench," allowing researchers to extract features from unstructured text, such as parsing two decades of Federal Reserve speeches to predict interest rate changes, without compromising intellectual property. They validate these models not with simple A/B tests, which are impractical in noisy markets, but by their ability to unlock entirely new analytical capabilities that were previously infeasible.

Nubank’s transition is from large-scale, feature-based XGBoost models to a more dynamic, narrative-based approach. Their core innovation is a "transaction transformer" that treats a customer's entire financial history as a sequential story. Each transaction is tokenized and fed into a transformer model, allowing it to learn deep, contextual patterns of behavior. This single, pre-trained model is then fine-tuned for critical business functions like fraud detection, income estimation, and product recommendations. This approach uses a "joint fusion" architecture, combining the sequential transaction data with static tabular data (like credit bureau information) end-to-end. The value is clear and measurable: they consistently achieve an average performance increase of 1.2% AUC over their highly optimized XGBoost baselines, a gain that would have previously taken two to three years of incremental model updates.

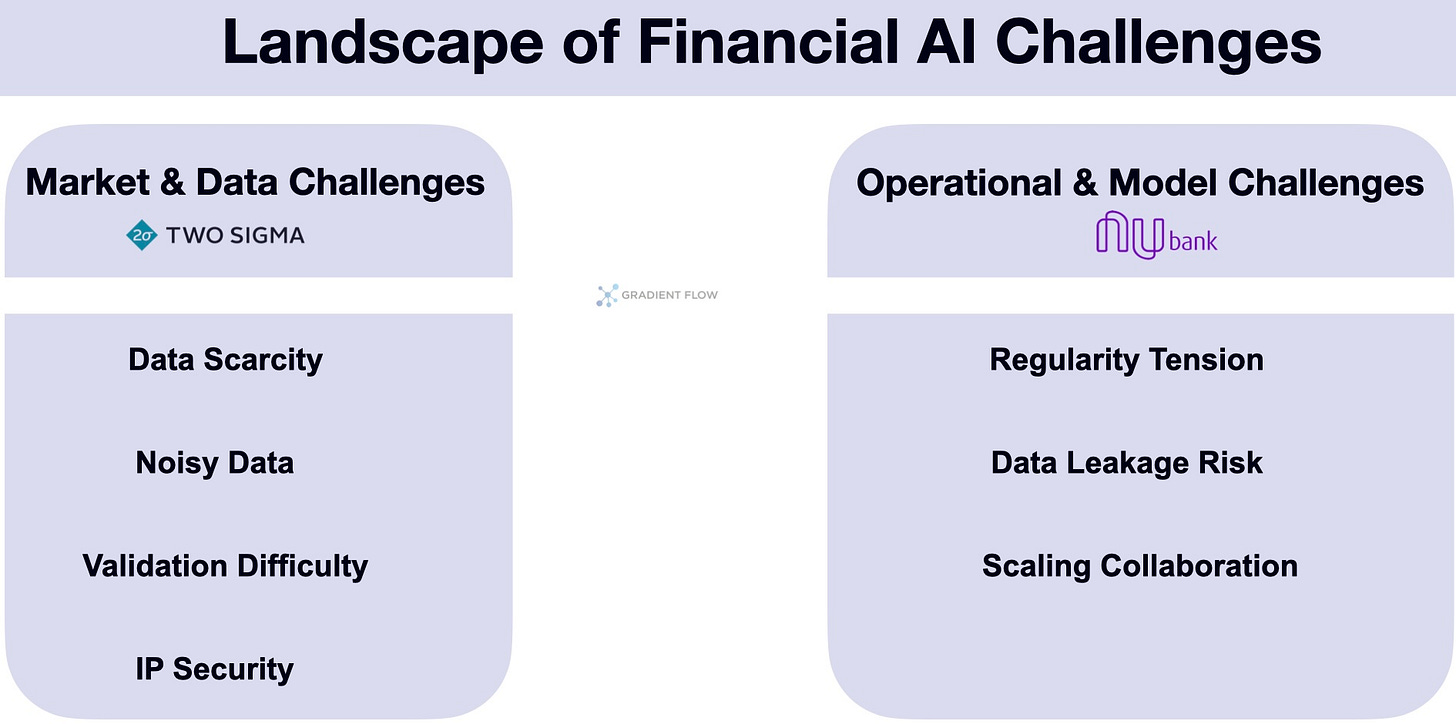

Beyond the Hype: The Grind of Implementation

Neither firm treats Generative AI as a magic wand. For Two Sigma, the primary challenges are rooted in the fundamental nature of financial market data. This data is inherently scarce, with only one new data point generated per instrument per day for many models. It is also exceptionally noisy, skewed by unpredictable real-world events like pandemics and wars, which makes standard validation methods like A/B testing impractical. This forces the firm to rely on complex simulations that are difficult to make truly representative of the real world. Beyond data, practical hurdles include ensuring intellectual property security when using external models, managing the high cost of running millions of queries, and tempering the internal misconception that AI is a "black box" that can solve any problem without human oversight.

Nubank’s obstacles skew cultural and operational. Their main challenges arise from the tension between its tech-company ambition and its operational reality as a regulated bank. The high stakes of banking prohibit the "move fast and break things" ethos of tech, requiring a cultural shift toward new, rigorous evaluation methods for complex AI models. On a technical level, their initial token-level approach to modeling transactions was inefficient, quickly exhausting the model's context window. It took three to four months of iterative architectural improvements—like developing a dedicated transaction-level encoder—just to match the performance of their existing XGBoost systems. Furthermore, using raw data sources directly created a high risk of subtle data leakage, necessitating an investment in monitoring and validation systems that was as large as the modeling effort itself.

Enabling Massive Scale with a Small Team

To manage the immense computational demands of these models, both firms have strategically adopted Ray as a core part of their computational infrastructure. Ray provides a single, unified abstraction that handles everything from embarrassingly parallel jobs to complex, multi-node training, simplifying the stack for their engineering teams. At Two Sigma, Ray is integrated into the main software branch alongside other frameworks like Spark and Dask. It is used for complex reinforcement learning training with RLlib and for distributing other machine learning models, all while respecting the firm's strict IP security requirements.

For Nubank, a small team of engineers uses Ray to orchestrate their entire pipeline. This enables them to train models with up to 1.5 billion parameters, fine-tune them on a billion labeled rows using 64 H100 GPUs, and process two billion rows in a single inference batch—a scale that would be unmanageable without a unified platform.

Sequence First, Model Second

A unifying concept from both presentations is the strategic imperative to model behavior as a sequence. This act of representing trades, clicks, or payments as an ordered history is what unlocks the predictive power of modern foundation models, providing a richer, more contextual view than static, tabular methods can offer. From there, teams should adopt a scalable orchestration framework like Ray early in the process to avoid painful infrastructure rewrites down the line.

When building models, it is crucial to fuse tabular and sequential data jointly, training the entire model end-to-end rather than tacking on features at the last layer. Finally, foundation models are a team sport, requiring larger and more collaborative efforts than traditional ML. This means building for collaboration from the start and maintaining rigorous governance standards to enable rapid, reliable iteration in a high-stakes environment. Success depends less on sophisticated financial algorithms and more on mastering the basics: scalable software design, distributed system reliability, comprehensive data instrumentation, and disciplined experimental methodology.

Data Exchange Podcast

Unlocking AI Superpowers in Your Terminal. Zach Lloyd, Founder/CEO of Warp, joins the podcast to explain how his company is revolutionizing the command-line terminal by integrating AI. He discusses Warp's core features, such as intelligent completions and natural language commands, and shares his vision for the future of AI-augmented software development.

Building Production-Grade RAG at Scale. Douwe Kiela, Founder and CEO of Contextual AI, explains why Retrieval-Augmented Generation (RAG) is not obsolete despite massive LLM context windows. He introduces "RAG 2.0," a fundamental shift that treats RAG as an end-to-end trainable system, integrating document intelligence, grounded language models, and reasoning agents to eliminate hallucinations and improve performance.

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.