An In-Depth Look at the Stanford AI Index Report

The Stanford AI Index Report 2025 provides the most comprehensive, data-driven overview of artificial intelligence trends globally. I consider it essential annual reading to stay grounded in the actual progress and impact of AI, tracking everything from technical benchmarks to policy shifts. I recently had the chance to discuss the latest findings with Nestor Maslej, Research Manager at HAI and the report's editor-in-chief. What follows is a heavily edited excerpt from our conversation.

AI Models and Capabilities

What are the main developments in AI models over the past year?

The key developments have been reasoning-enhanced models and multi-modality capabilities. Particularly noteworthy is the rise of smaller models delivering impressive performance. We've seen a dramatic reduction in parameter count while maintaining performance—from PaLM with 540 billion parameters in May 2022 to Phi-3 Mini with just 4 billion parameters in May 2024, while still achieving above 60% on the MMLU benchmark. As these technical capabilities mature, the field is shifting from purely pursuing technological advancement to focusing on practical integration of these powerful tools into business workflows.

How are businesses approaching these smaller models?

Model selection depends on specific use cases. Legal teams and high-stakes applications prioritize accuracy and are willing to pay more for slower but more thorough models. In contrast, customer-facing applications often prioritize speed and responsiveness, making smaller models more valuable. The clear trend is that models are getting smaller while maintaining strong performance levels, which excites many businesses due to the potential cost savings and efficiency gains.

What advancements have we seen in multi-modal capabilities?

Multi-modal capabilities have advanced, but interaction with graphical user interfaces remains challenging. Benchmarking AI's agentic capabilities is difficult because tasks are complex and multifaceted. Research suggests AI systems perform better than humans on simple tasks with short time budgets, but humans still outperform AI on complex tasks requiring longer periods. One study on computer science research tasks found that while AI excels with tight time constraints, humans maintain a significant advantage when tasks require several hours of work. The key challenge isn't just advancing technological capability, but creating interfaces and workflows that make AI truly useful for businesses beyond current chatbot models.

Ready for more? Upgrade to paid for exclusive content! ✨

Open Weights and Model Transparency

What trends are emerging around model transparency and open weights?

Two significant trends have emerged. First, open weight models are much stronger than a year ago. According to the Chatbot Arena, the gap between the best closed weight model and the best open weight model has narrowed from about 8 percentage points in January 2024 to just 2% by February 2025. Second, the ecosystem is becoming more transparent according to Stanford's Foundation Model Transparency Index, though significant room for improvement remains with many providers still not fully disclosing details about their model development and training methods.

How has the competitive landscape changed between closed and open weight models?

The competitive landscape has tightened considerably. While closed weight models from OpenAI, Anthropic, and Google previously held a clear performance advantage, new models like Llama 3, DeepSeek, and Alibaba's Qwen have effectively closed that gap. We now have a highly competitive ecosystem with 4-5 developers all releasing capable models that score similarly on benchmarks. This shift transforms open weight models into a much more viable and competitive option for businesses seeking to build AI applications.

Benchmarking and Performance

How have AI benchmarks evolved over the past year?

Benchmarks that were considered challenging in 2023 have seen remarkable progress, with performance increases of 20-60 percentage points in just a year. As models saturate existing benchmarks, researchers continuously develop new challenges. For example, ARC recently launched a new benchmark that still challenges systems, and Epoch AI released "Frontier Math," a complex mathematical benchmark that even high-quality systems struggle with. In a humorous twist, researchers even launched a benchmark dubbed "Humanity's Last Exam" - playfully suggesting it might be the final academic test needed to evaluate AI systems. This ongoing cycle illustrates the rapid pace of AI advancement and the evolving standards for performance evaluation.

Are traditional academic benchmarks still relevant for evaluating AI systems?

There's a growing disconnect between academic benchmarks and real-world applications. The best AI systems now exceed humans on many academic tests, but real-world tasks remain challenging to capture in neat benchmarks. For businesses, knowing that a model scores 97% on eighth-grade math questions is less relevant than understanding how it performs on specific business tasks. Practitioners should evaluate models based on their particular business needs and constraints, considering factors like accuracy, speed, latency, and cost—rather than relying solely on benchmark leaderboards.

Inference Costs and Scaling

What trends are we seeing in inference costs for AI models?

We've seen a dramatic reduction in inference costs at fixed performance levels. The cost of running an AI model that scores equivalent to GPT-3.5 on MMLU has dropped from around $20 per million tokens in November 2022 to just seven cents per million tokens in October 2024—a reduction of more than 280 times in approximately a year and a half. Similar trends are visible for reasoning models, though not as dramatic proportionally. This overall cost reduction makes deploying capable AI models increasingly feasible for a wider range of applications.

How should businesses approach the financial considerations of using advanced reasoning models?

Businesses need to conduct financial modeling to determine if the value derived justifies the cost. For example, a coding assistant might consume significant compute to resolve JIRA tickets, but the key question is whether the output is as good as and cheaper than a junior developer. Similarly, AI analysis tools might assist analysts but may not completely replace their judgment. The critical consideration is whether models save enough time or create enough value to justify their cost. The "best" model for a business isn't necessarily the highest performer overall, but the one that most effectively meets specific operational requirements.

US-China Rivalry in AI

How has the AI competition between the US and China evolved?

The US maintains a clear lead in total notable models produced in 2024 (40 from the US compared to 15 from China and 3 from Europe). However, Chinese models have rapidly caught up in performance, with the gap between the best US and Chinese models narrowing significantly across virtually every benchmark. This reached near parity in 2024 with DeepSeek's reasoning models. The competition has intensified, with both countries demonstrating substantial progress in advancing AI capabilities.

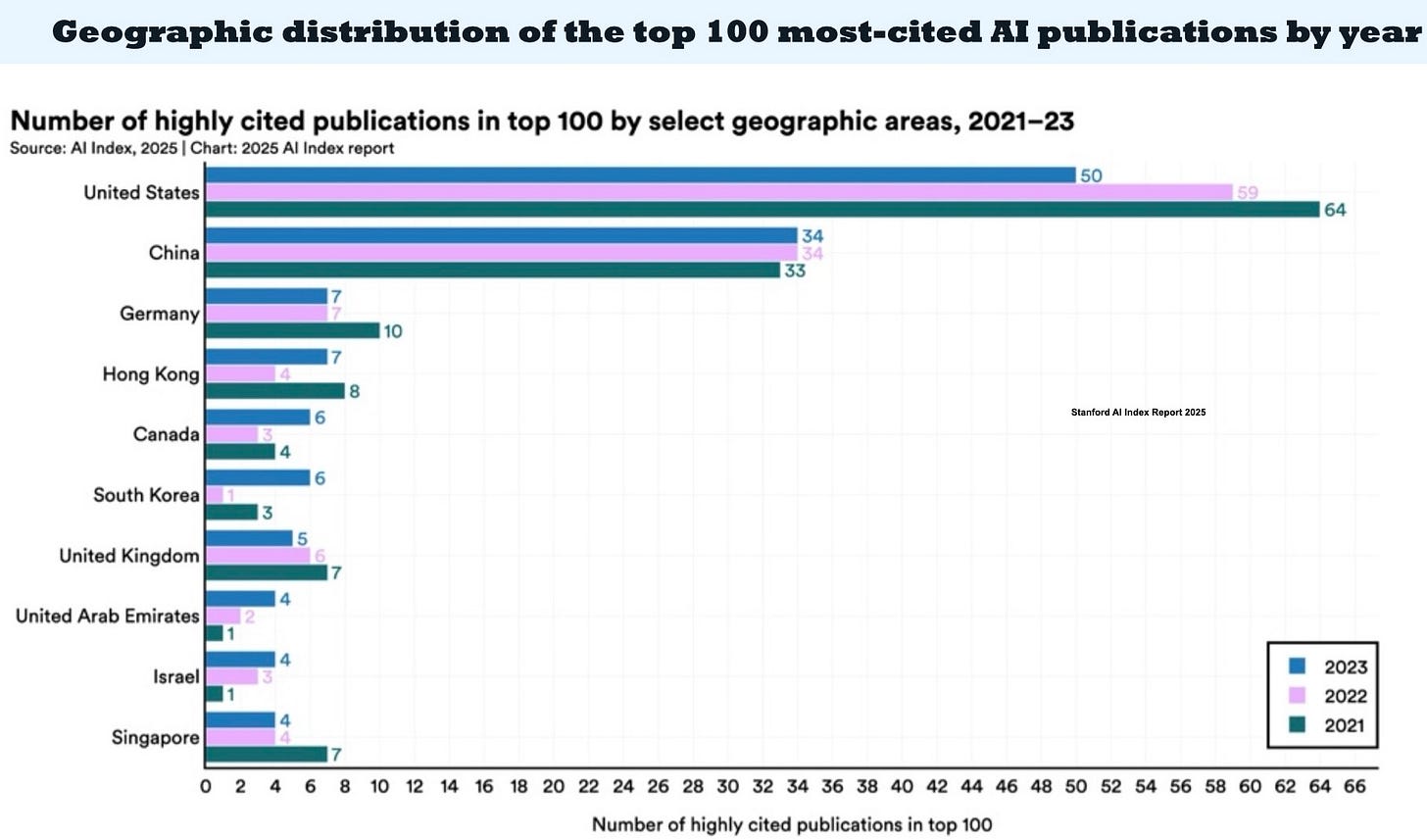

How do the US and China compare in terms of research output?

China leads in aggregate publications and patents, but when examining highly cited publications (top 100 AI publications from 2021-2023), the US remains far ahead. This indicates that while China produces more research in volume, the US still generates higher-quality research on average, though this gap is closing. China demonstrates particular strengths in specialized areas like industrial robotics and autonomous vehicles.

Could China's open weights models give them an advantage in the global AI race?

This remains an open question. While Chinese open weight models like those from DeepSeek are currently competitive with or better than US alternatives, it's unclear to what degree businesses outside China will adopt these models. A critical question for the near future is whether non-Chinese companies will build on top-performing Chinese open weight models if they outperform Western alternatives. If Meta or other US companies release competitive open weight models soon, the competitive landscape could shift dramatically.

AI Adoption and Business Integration

What trends are we seeing in AI adoption by organizations?

Adoption has increased significantly, with organizations reporting AI use in at least one business function rising from around 50% in the early 2020s to 78% according to recent McKinsey surveys. However, the key question now isn't about technology advancement but about how businesses will effectively integrate these tools to generate value. The challenge is finding specific workflows where AI can deliver clear benefits that justify implementation.

What are executives predicting about AI's impact on workforce size?

Executives are becoming less convinced that AI will lead to workforce reductions. In previous surveys, 43% of business executives thought AI would decrease their workforce size in the next three years. In the most recent survey, this has dropped to 31%. Many businesses are using AI to save time and then reinvesting that time into other activities, reflecting a shift toward augmentation rather than automation.

What approach should businesses take to implement AI effectively?

Businesses should start narrow and small, focusing on specific use cases where AI can deliver clear value rather than attempting broad implementation. They need to accept that it's still early days for this technology and scale wisely. The challenge isn't technological but about delivering actual value instead of hype. History shows that transformative technologies often take a decade or more for their full productivity benefits to materialize, as organizations need to fundamentally change their processes to fully realize the potential.

Synthetic Data and Training Resources

Are AI companies running out of data for training?

Researchers project with 80% confidence that high-quality, accessible training data will be exhausted between 2026 and 2032. This timeline depends on factors like data growth rates, accessibility challenges, and the increasing trend of websites implementing crawling restrictions. This looming data shortage has intensified industry interest in alternative approaches, particularly synthetic data.

What role might synthetic data play in the future of AI training?

Synthetic data's value varies significantly by context. For general LLM training, adding synthetic data to existing datasets provides minimal performance benefits, while replacing real data with synthetic alternatives causes noticeable degradation. Interestingly, synthetic data has shown remarkable effectiveness in health and science domains, possibly because these fields have naturally limited real-world data pools. Current research indicates synthetic data can effectively supplement—but not replace—high-quality real data in training pipelines.

Read free, or become a supporter for extras! 🎁

AI Infrastructure

What infrastructure developments are occurring to support AI globally?

Energy infrastructure has become a critical priority for AI advancement, with major companies pivoting to alternative power sources. Several tech giants have established nuclear energy partnerships, most notably Microsoft's initiative to revive the Three Mile Island nuclear reactor for its AI data centers. Simultaneously, governments worldwide are making substantial investments in AI infrastructure. Within the past year and a half, nations including Canada, France, UAE, Singapore, and India have each committed over a billion US dollars to develop AI capabilities. This investment surge reflects a notable shift in global AI discourse—moving from earlier safety-focused conversations toward action-oriented opportunities and economic advantages.

Skills and Workforce Impact

How should professionals approach skill development in the age of AI?

As Eric Brynjolfsson aptly observed, "AI is not going to replace workers, but workers that know how to use AI are going to replace workers that don't know how to use AI." The focus should be on mastering AI as an enhancement tool rather than viewing it as a complete replacement for human skills. Adaptability and continuous learning will be essential career skills. Notably, conventional wisdom about automation has been upended—economists long predicted that routine manual jobs would disappear first, yet AI has instead revolutionized knowledge work while struggling with physical tasks. The most successful professionals will be those who realistically assess AI's capabilities and limitations while developing skills to effectively collaborate with these technologies.

Is AI likely to benefit junior or senior workers more?

Research indicates that while AI tends to proportionally boost performance more for lower-skilled workers, higher-skilled workers still maintain their absolute advantage in most contexts. However, the impact varies significantly across different tasks and domains. In software development, for example, junior developers often embrace AI tools more readily than mid-career professionals, potentially giving newer entrants unexpected advantages as the field evolves. Ultimately, career advancement in the AI era will depend less on seniority and more on one's ability to leverage these technologies to deliver exceptional value—regardless of experience level.

Dive deeper into global AI trends with Stanford's essential AI Index Report 2025.

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the AI Agent Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.