Navigating the Intricacies of LLM Inference & Serving

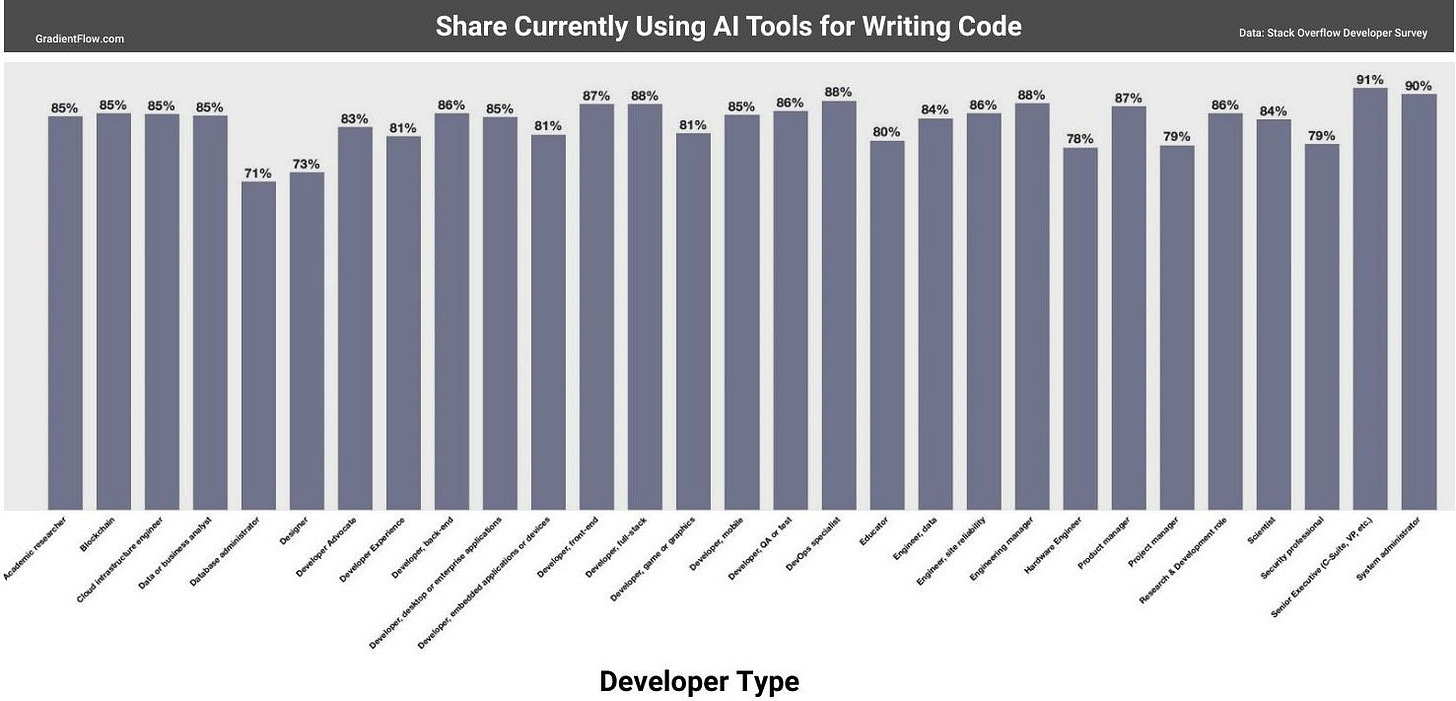

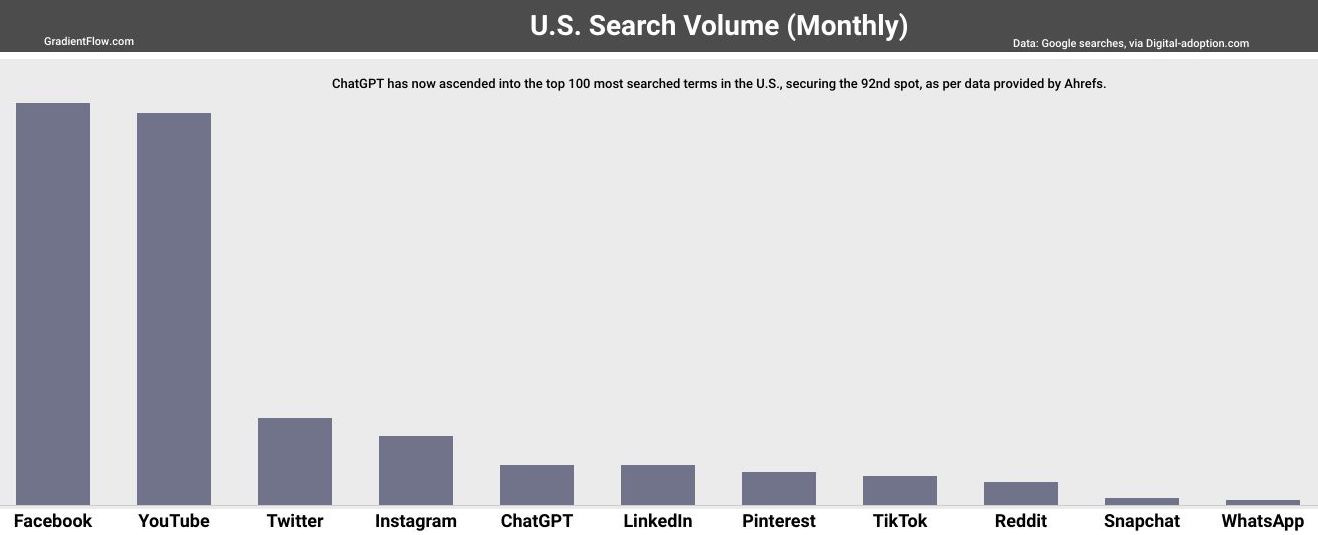

According to a recent Stack Overflow survey, 70% of developers use AI tools or plan to do so within the next few months, illustrating the pervasiveness of AI optimism within the tech community. The beneficial impacts of these technologies are evident: one-third of these developers have experienced increased productivity, a testament to the power of AI tools in streamlining workflows. Meanwhile, McKinsey's research highlights generative AI's potential contribution to global productivity, estimated between $2.6 and $4.4 trillion annually. McKinsey predicts that Generative AI and LLM technology will invigorate industries from banking to life sciences with potential earnings in the hundreds of billions.

This broad-based implementation is echoed in the 85% surge in AI mentions during earnings calls within S&P 500 companies. This means that businesses will need to efficiently and cost-effectively deploy these foundation models at scale. Successful model inference and deployment will be paramount, driving not just the efficient utilization of AI, but ensuring their integration is the foundation for the next wave of digital innovation.

A majority of the expenditure for many companies will go towards computing resources, a significant portion of which will be dedicated to model inference, a process where trained models are used to make predictions based on new, unseen data. This substantial financial burden, often comparable to the cost of employees, reveals the harsh truth about the hidden costs of deploying large language models, accentuating the necessity of cost-effective and efficient deployment strategies.

Rethinking Infrastructure for Foundation Models

The rise of LLMs is bringing about a new paradigm in how we build machine learning applications. However, the current state of Machine Learning Operations (MLOps) infrastructure reveals a stark reality; it simply wasn't designed to accommodate the sheer scale and complexity of LLMs. With these models often comprising billions of parameters and requiring massive computational resources to both train and deploy, traditional hardware systems and current model serving tools struggle to keep up.

While the recent focus has been on startups that provide tools for training and fine-tuning LLMs, it is becoming increasingly clear that this is only part of the story. We also need solutions that effectively address the challenges of inference, serving, and deployment, areas that I believe are somewhat underserved in the market. The rise of LLMs demands that we rethink our MLOps tools and processes, and necessitates a more holistic approach towards building and deploying these models. The future of MLOps should be equipped to fully embrace the era of large foundation models.

In transitioning from NLP libraries and models to LLM APIs, I have encountered two key challenges: cost and latency. While the proliferation of LLM providers is starting to drive down prices, offering some relief on the cost front, latency remains a thorny issue. The high processing times inherent to LLMs continue to hinder real-time applications, even as user numbers and applications multiply.

Batch inference poses another layer of complexity in implementing LLMs efficiently. This, coupled with the latency issue, emphasizes the need for solutions that directly address these operational challenges. The quality and robustness of these models also deserve attention. The outputs of LLMs, sometimes marred by their limited understanding of context, can be suboptimal and brittle.

Their susceptibility to adversarial attacks and data poisoning compounds the robustness issue. Beyond these, we must also contend with the architectural constraints of LLMs. Not every model is fit-for-all-purposes, and fine-tuning or adaptations may be necessary for optimal performance across different applications.

In essence, the emergence of LLMs calls for a reinvention of our MLOps tools and processes. To fully usher in the era of large foundation models, we must broaden our perspective and strive for comprehensive solutions that cater to all facets of LLM deployment.

The Emerging Landscape of LLM Deployment

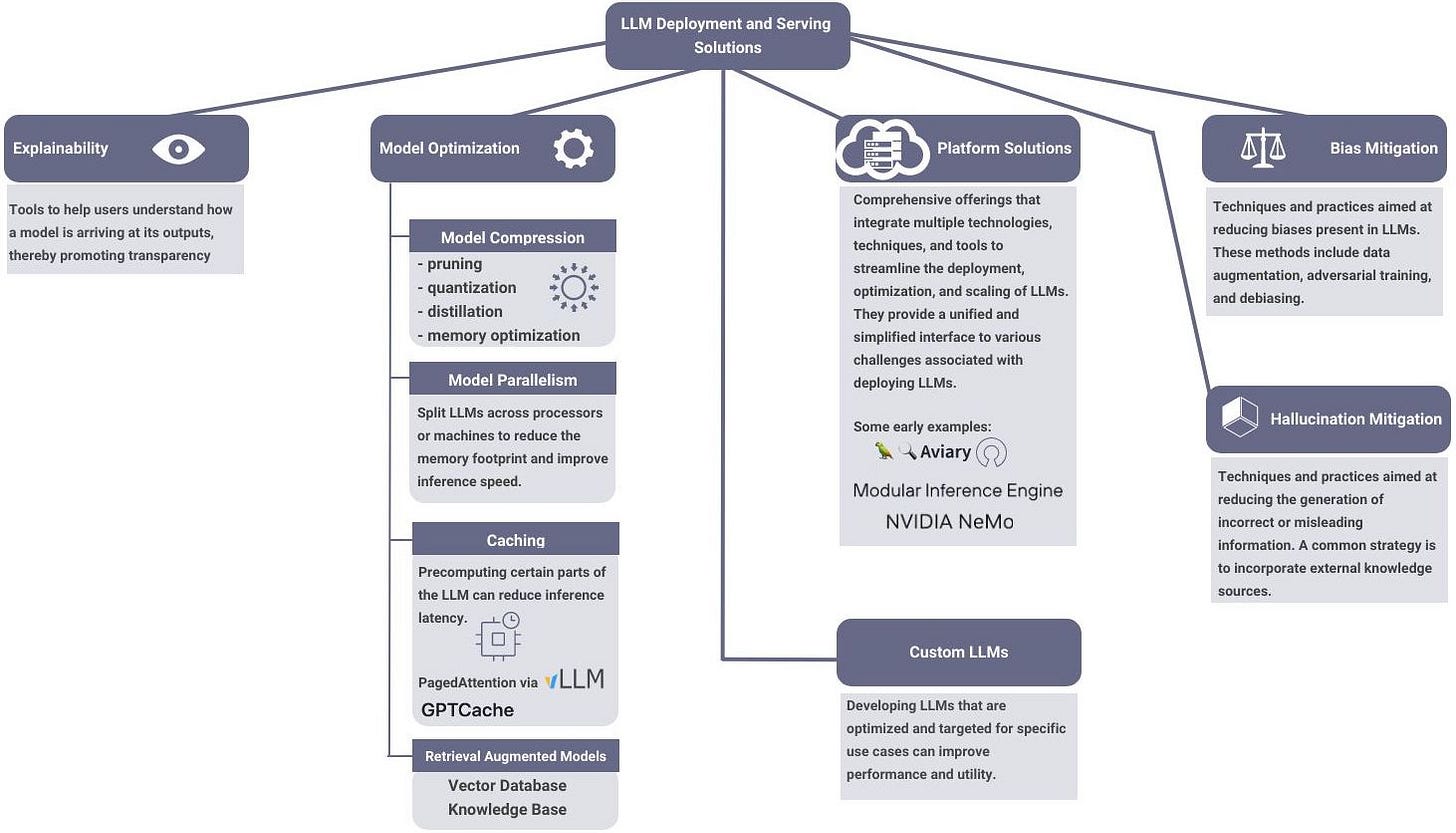

Hope is on the horizon. Several teams are working on tools and strategies that will make LLMs more accessible, interpretable, and efficient during model inference and serving. A diversity of approaches spanning model optimization to platform solutions are under active development to cater to the unique challenges that deploying LLMs present.

Emerging open-source projects like Aviary are pushing the boundaries of what's possible. With cutting-edge memory optimization techniques such as continuous batching and model quantization strategies, Aviary promises improved LLM inference throughput and reduced serving costs. It also simplifies the deployment of new LLMs and offers unique autoscaling support.

We are in the early stages of developing MLOps infrastructure specifically tailored for LLMs and foundation models. This new infrastructure will allow us to deploy LLMs more efficiently, reliably, and securely. The way we build and deploy LLMs is being redefined, and those who stay ahead of the curve will be the first to reap the rewards of this new technology.

Data Exchange Podcast

An Open Source Data Framework for LLMs: Jerry Liu is CEO and co-founder of LlamaIndex, an open source project for integrating private data with large language models (LLMs). TLlamaIndex streamlines data ingestion, indexing, and retrieval, tailoring LLMs to specific needs and improving AI performance in personal and commercial applications.

Modern Entity Resolution and Master Data Management: Jeff Jonas, CEO of Senzing, is on a mission to democratize entity resolution (ER), a complex but vital process that links disparate data records representing the same real-world entity, enhancing data quality and facilitating analytics and AI applications. He discusses intricate aspects of ER like sequence neutrality and principle-based ER, and explores the potential role of emerging technologies like LLMs, vector databases, and graph databases in developing large-scale, real-time ER systems.

Game-Changing Tools for Optimizing Data Quality and Performance in Computer Vision

With the recent focus on LLMs, it’s easy to forget how critical computer vision models have become. Applications of computer vision can be found across many industries, and the technology is still growing rapidly. As companies invest in computer vision, the technology is expected to continue to improve efficiency and drive innovation.

The healthcare industry stands to benefit with an estimated $2.57 billion market value by 2025, thanks to AI-powered diagnostic tools and wearables that detect diseases and health issues at an early stage. In a similar vein, the banking industry is keen to embrace facial recognition and mobile biometrics to streamline transactions, a market that will grow to $9.6 billion by 2022.

Healthcare and banking aren't the only industries that stand to benefit from computer vision; the automobile industry plans to grow its autonomous vehicle market to $556.67 billion by 2026. Manufacturing processes are also getting a high-tech makeover with computer vision-enhanced quality control systems, and the retail industry is not far behind with computer vision-enhanced store layouts and checkout systems.

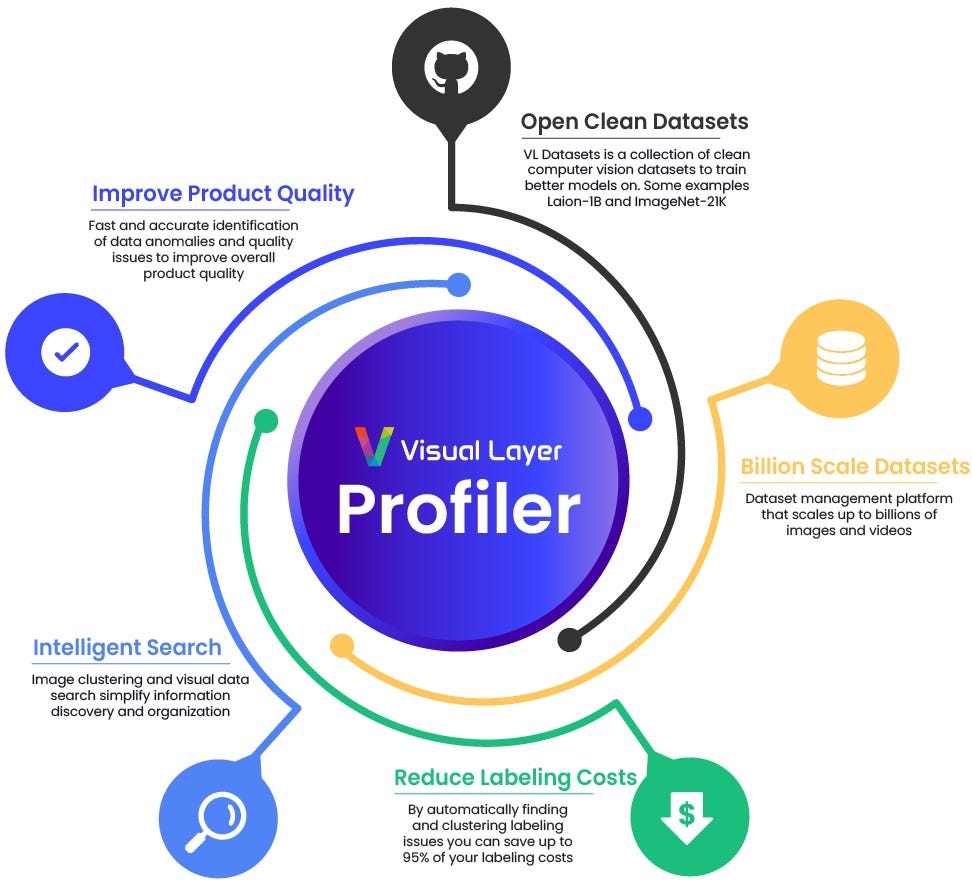

Modern computer vision applications rely on neural network models trained on vast amounts of images. Unfortunately, the efficient application of computer vision is hampered by inaccurate or mislabelled data, duplicate images, outliers, data leakage, and blurry images. When the startup Visual Layer scrutinized the popular Laion-1B dataset, an unsettling 105,000,000 duplicate images were discovered, along with numerous other quality issues.

To tackle these hurdles, Visual Layer recently unveiled two transformative tools: VL Profiler and VL Datasets. VL Profiler brings an unprecedented level of precision in identifying and addressing data quality issues, enabling the viewing of these issues and simultaneous corrective actions on multiple images. This leads to the elimination of redundant and low-quality images, improving the quality of the dataset and consequently, a model's performance.

Meanwhile, VL Datasets is a pristine collection of computer vision datasets designed to minimize the issues listed above. As a free and open-source tool, VL Datasets provides an ideal starting point for training machine learning models on clean datasets.

The promise of computer vision is compelling, and the tools to make it a reality are readily available. Explore and integrate tools such as VL Profiler and VL Datasets into your workflow. They are indispensable for visualizing data and understanding the performance of your computer vision models. These tools enable teams to address the challenges in computer vision datasets and significantly elevate model performance, thus ushering AI applications with clear, computer-aided vision.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, or Twitter, or Mastodon, or T2, on Post. This newsletter is produced by Gradient Flow.