The Hidden Foundation of AI Success: Why Infrastructure Strategy Matters

The New Data Center Revolution

I've been monitoring a fundamental shift in how we conceive of AI infrastructure. NVIDIA's concept of "AI factories" marks a departure from traditional data centers, designing facilities specifically to produce intelligence at scale by transforming raw data into real-time insights. Meanwhile, CoreWeave's recent public disclosures confirm what many of us suspected — there's a direct relationship between compute resources and model quality. As state-of-the-art models continue demanding exponentially more computational power, the limitations of generalized cloud environments become increasingly apparent. The message is clear: purpose-built infrastructure with advanced GPUs, high-speed networking, and specialized cooling is no longer optional for AI applications and solutions.

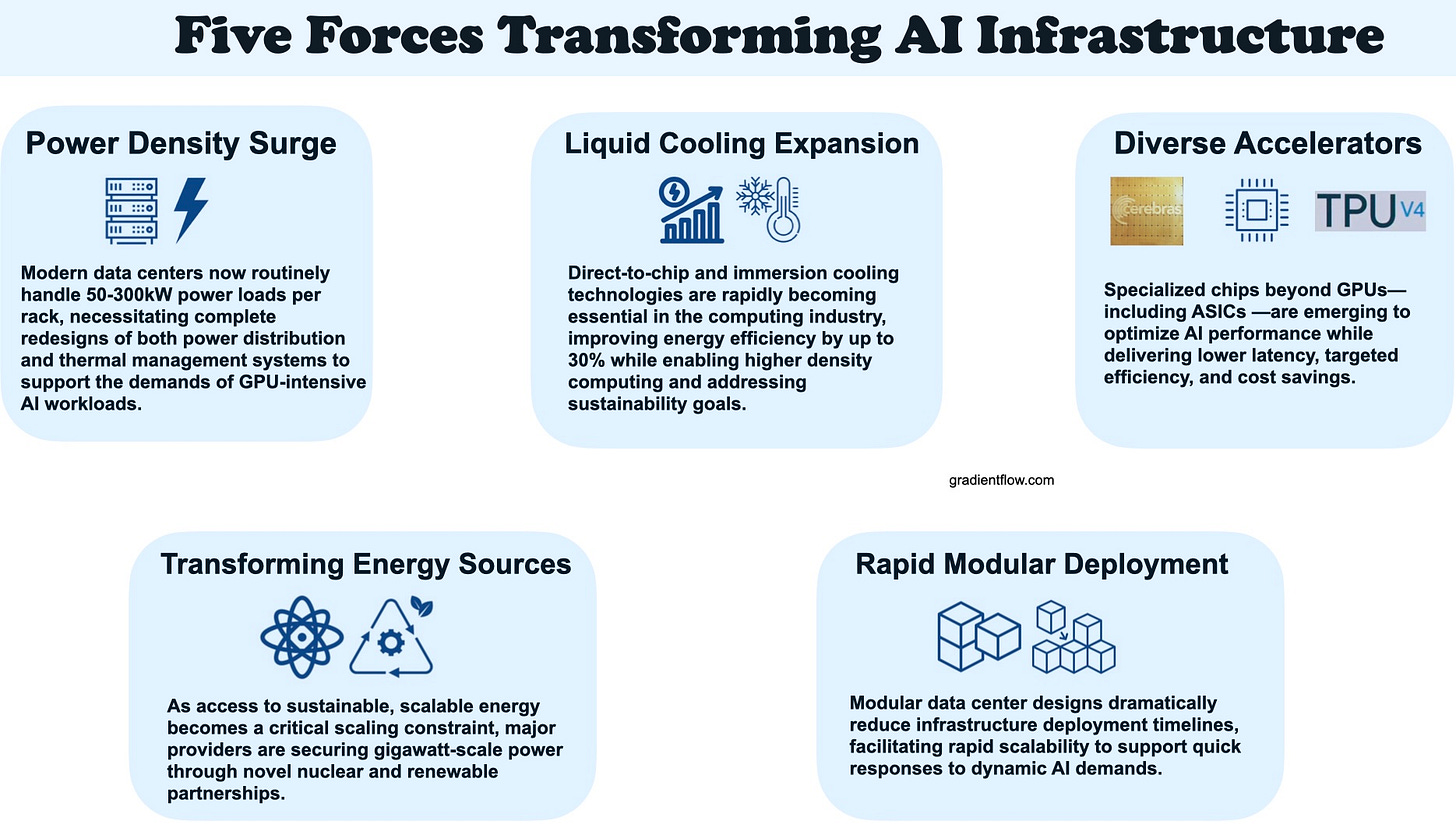

Today's AI workloads aren't just pushing traditional data centers to their limits—they're rewriting the rules entirely. A single rack can now demand up to 300kW of power, dwarfing traditional setups and fundamentally reshaping what's achievable. Even if you're not personally managing infrastructure, these changes profoundly influence your team's ability to innovate, experiment, and compete. Understanding how physical constraints shape your AI capabilities isn't optional—it's now central to strategic planning and your team's future competitiveness.

Our newsletter is FREE—become a paid supporter for bonus content! 🎁

The rise of agentic systems and advanced reasoning models is dramatically reshaping infrastructure demands beyond traditional AI workloads. Consider today's coding agents, which tackle tasks—like resolving Jira tickets or debugging code—by systematically burning computational resources through brute-force iteration, similar to solving complex puzzles via persistent trial-and-error. Likewise, deep research tools autonomously browse the web, conduct sophisticated reasoning, and perform comprehensive data analyses—tasks that can take from minutes to hours to produce reports—highlighting how advanced AI capabilities directly rely on robust compute infrastructure to deliver results within reasonable timeframes. As these intelligent, autonomous systems grow increasingly central to business operations, organizations must recognize that their competitive edge hinges not just on model access but on the performance, efficiency, and reliability of the infrastructure supporting them.

Policy Frameworks at a Crossroads

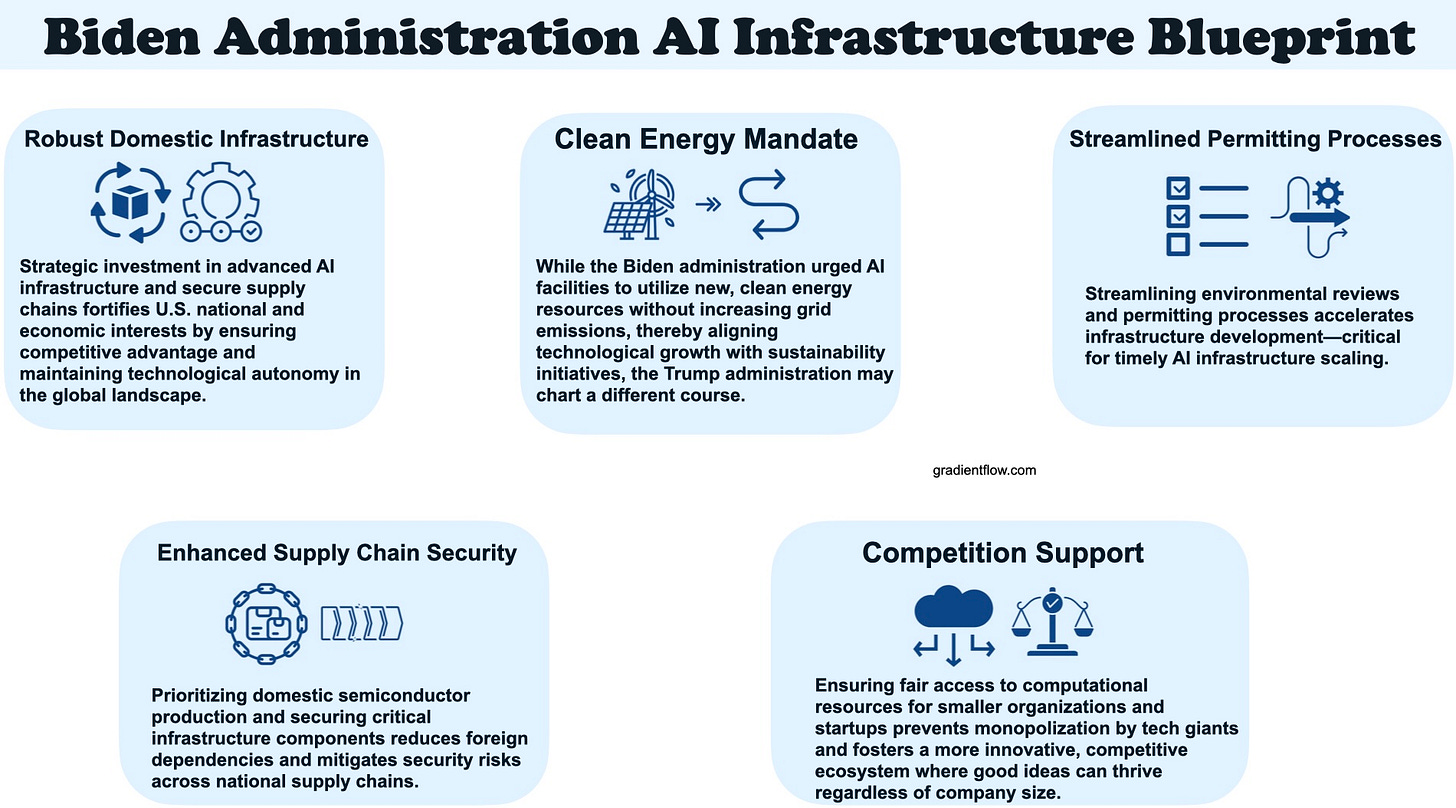

The January 2025 Biden Administration Executive Order established an ambitious framework to secure U.S. leadership in global AI infrastructure, setting aggressive timelines for frontier AI data centers, clean energy procurement, and streamlined permitting. However, as a new administration takes office, there’s considerable uncertainty regarding the continuity and execution of these plans. AI teams should monitor policy shifts closely, particularly around energy mandates and the critical permitting processes which directly impact deployment speed.

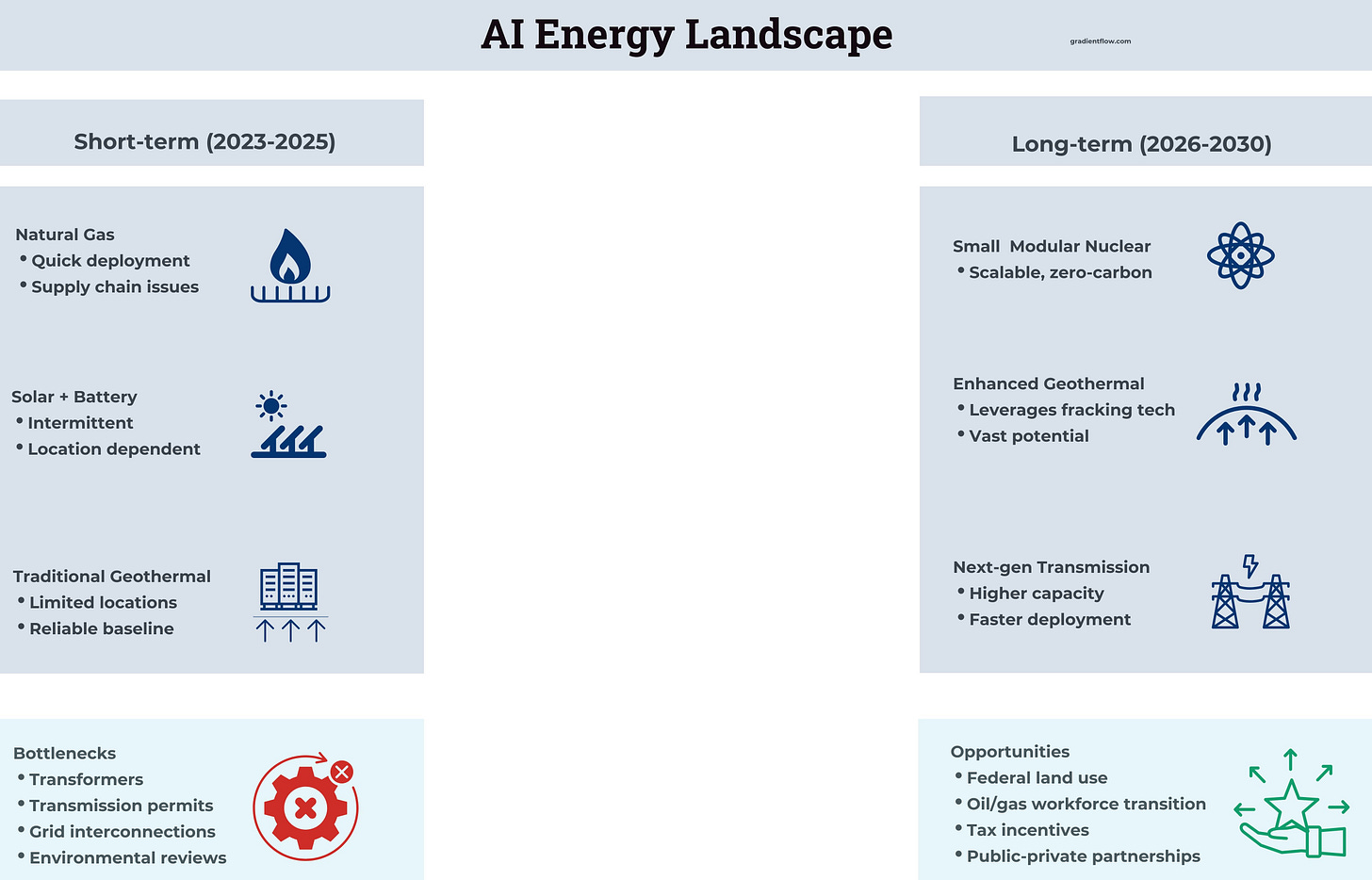

America's competitive edge in AI continues to be determined by how effectively we build and implement computational infrastructure. With compute demands for frontier models rising 4-5x annually, we'll soon need gigawatt-scale electricity for cutting-edge training runs. This creates twin challenges: developing massive training facilities for model development and establishing distributed data centers for nationwide AI deployment. I see significant gaps in our approach — particularly around the feasibility of clean energy timelines and persistent bottlenecks in transmission infrastructure and transformer manufacturing.

Strategic Imperatives for AI Teams

Infrastructure as Strategic Differentiator: I'm seeing a clear, quantifiable correlation between computational investment and AI capability—more compute leads directly to better models. Teams with superior infrastructure can train larger models, run more experiments, and deploy more capable agents than their competitors. Higher-performance systems also enable shorter development cycles and faster market response. This isn't just an IT decision anymore—it should be elevated to strategic planning and directly tied to product roadmaps.

Purpose-Built vs. General-Purpose: The numbers don't lie—purpose-built AI infrastructure delivers ~20% higher Model FLOPS Utilization and up to 10x faster spin-up times compared to generalized environments. The "build vs. buy" decision now includes substantial performance implications, not just cost considerations.

Energy as the New Constraint: Power availability, not hardware, is becoming the primary scaling bottleneck. Teams need to factor energy strategy into planning from day one—without sufficient power, even the most sophisticated hardware deployments will underperform.

Cooling Innovation Necessity: For high-density AI deployments, liquid cooling isn't optional—it's essential. The cooling approach you select will directly influence your performance ceiling and operational costs, making this a first-order decision rather than an implementation detail.

Security by Design: High-value AI models are becoming prime targets for sophisticated cyber threats and sabotage. Security isn't something you bolt on later—it's got to be baked into both your digital and physical infrastructure from the outset.

Distributed Intelligence Architecture: Inference workloads need to move closer to users. Latency and throughput optimization through strategic edge deployments aren't just technical decisions—they shape user satisfaction, engagement, and operating costs.

Automated Lifecycle Management: Real-time monitoring, automated health checking, and proactive validation aren't just operational nice-to-haves. They prevent downtime, protect expensive resources, and boost your overall operational efficiency, giving your team more room to innovate.

Future-Proofing Through Modularity: Hardware innovation cycles are accelerating. Designing modular systems for easy upgrades—not total replacements—future-proofs your investments, ensuring your AI capabilities can rapidly adopt new technologies without losing momentum.

Make your voice heard: complete our short AI Governance Survey now.

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the AI Agent Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.