Is Your AI Ready for the Next Wave of Governance?

As artificial intelligence seeps into everything from triage decisions in hospitals to the way capital is allocated on Wall Street, the question is less whether we should govern AI than how quickly we can build guard-rails that keep pace with the underlying technology. Over the past decade the focus has shifted from high-level principles drafted in Washington during the Obama years to concrete rule-sets such as the NIST AI Risk Management Framework and the European Union’s risk-tiered AI Act. The result is a patchwork: European regulators have gone all-in on prescriptive oversight, while the United States still relies on a looser, sector-by-sector approach. That divergence creates friction for global firms and complicates any hope of seamless cross-border standards.

The complexity of AI governance extends beyond national borders and regulatory philosophies. Organizations like Microsoft, Google, and SAP are implementing internal ethics committees and accountability structures. This move reflects a growing recognition that effective governance requires collaboration among technologists, ethicists, legal experts, and affected communities. This multi-stakeholder approach proves essential for addressing algorithmic bias and ensuring transparency—challenges that no single entity can solve in isolation.

Some early movers are already showing how that collaboration works in practice. AstraZeneca pairs a “Responsible AI Playbook” with independent audits and a risk-based classification, so a chatbot that suggests drug dosages faces far tighter scrutiny than an internal knowledge search. Major hospital networks have copied the model, convening AI ethics committees that include clinicians, data scientists, and legal counsel to vet every GenAI prototype before it touches patient data. On the enterprise-IT side, IBM’s generative-AI stack bakes in explainability layers and rigorous data-lineage checks, proving that governance can be engineered into the plumbing rather than bolted on later. These initiatives hint at a future in which responsible AI is not a compliance afterthought but a design constraint woven into product roadmaps and AI platform architectures.

The next phase of AI governance will likely demand two two fundamental shifts. First, firms must embed accountability deeper than compliance checklists—by giving product teams clear ownership of ethical outcomes and by opening their models to meaningful third-party audits. Second, policymakers need to coordinate internationally on a slim core of shared metrics—bias, transparency, and safety—so that innovation is not trapped behind conflicting national requirements.

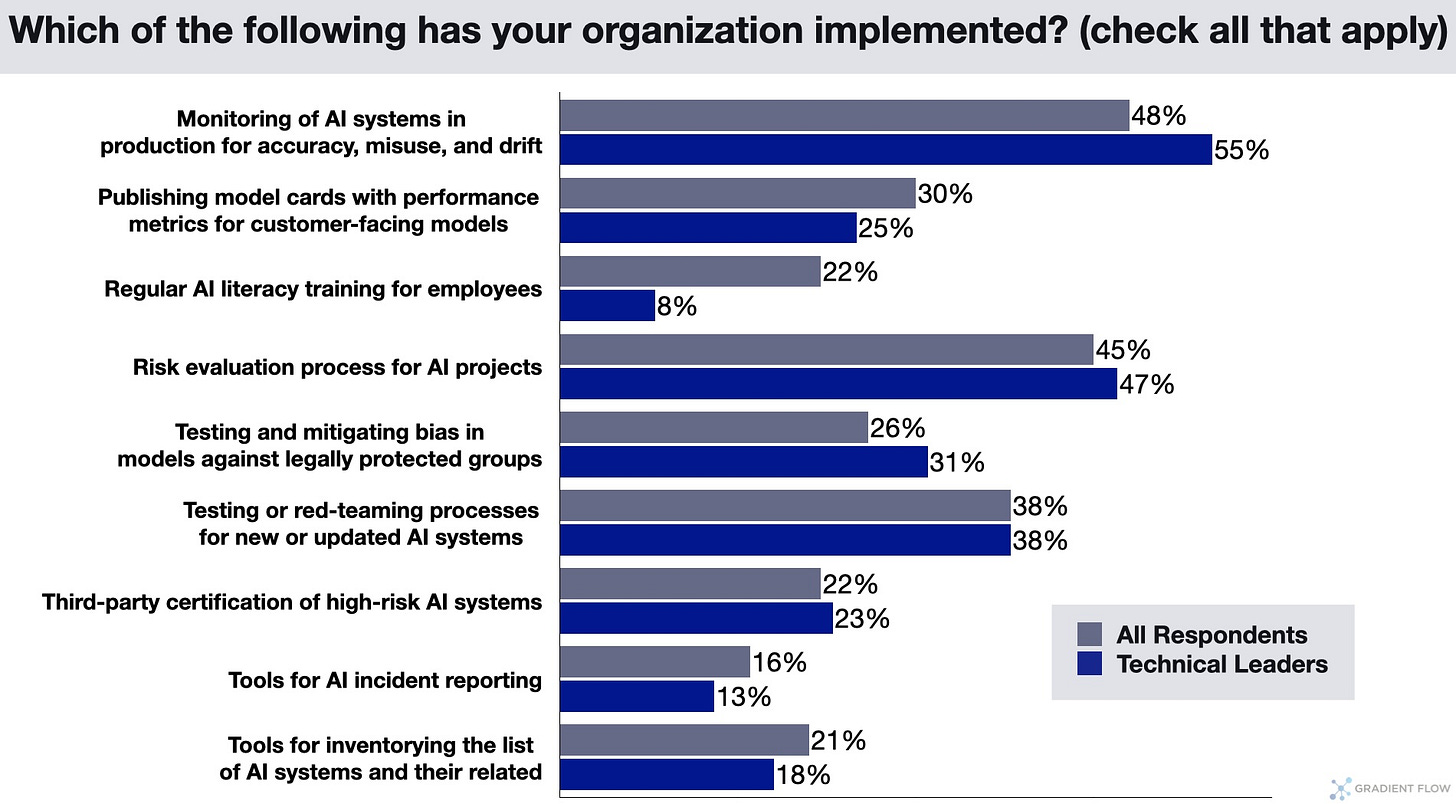

For a data-driven look at how business leaders, regulators, and technologists view these challenges, see the results of our 2025 AI Governance Survey.

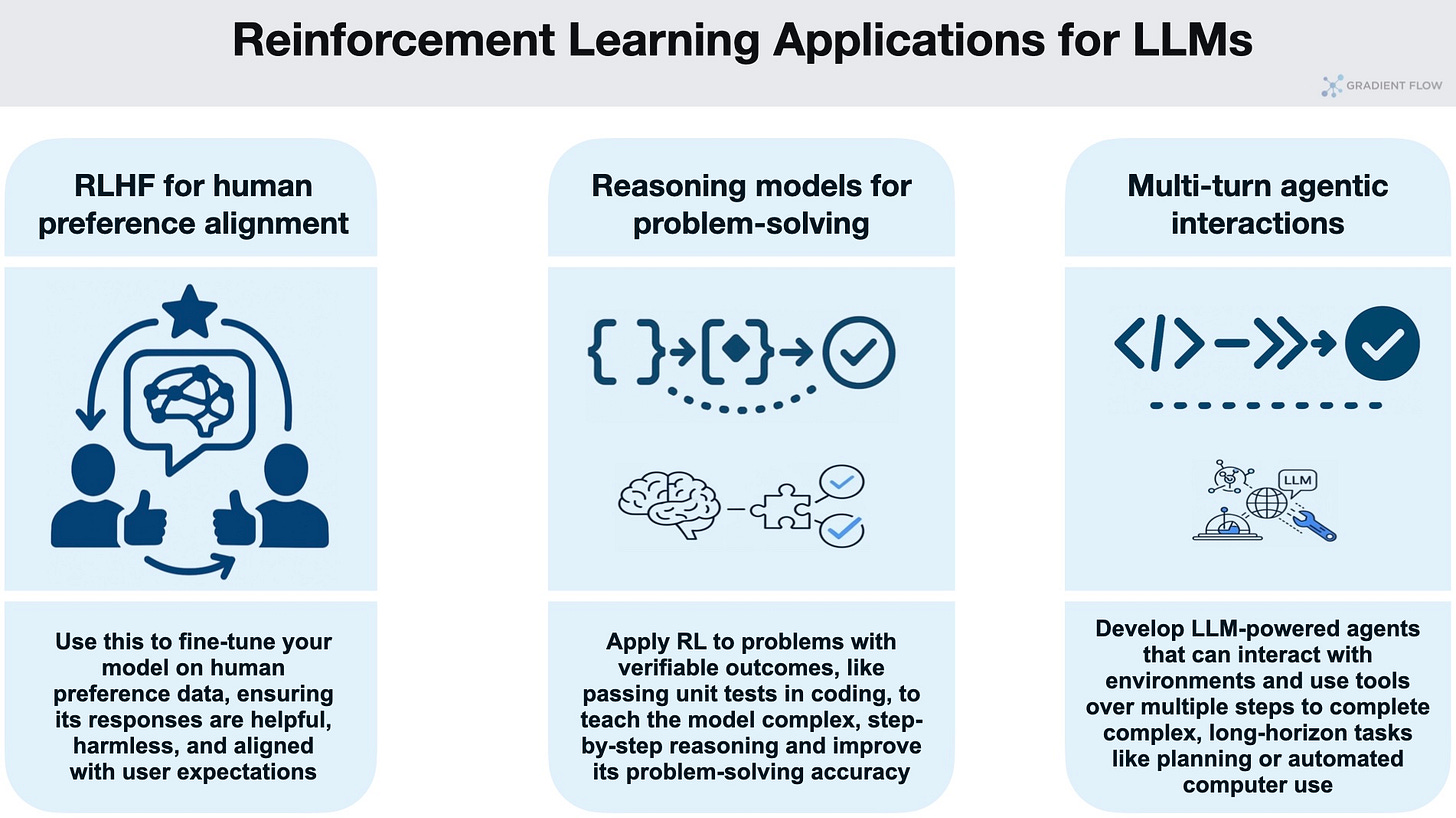

Open-Source RL Libraries for LLMs: Nine Frameworks Compared

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.