Build better Large Language Models with WeightWatcher

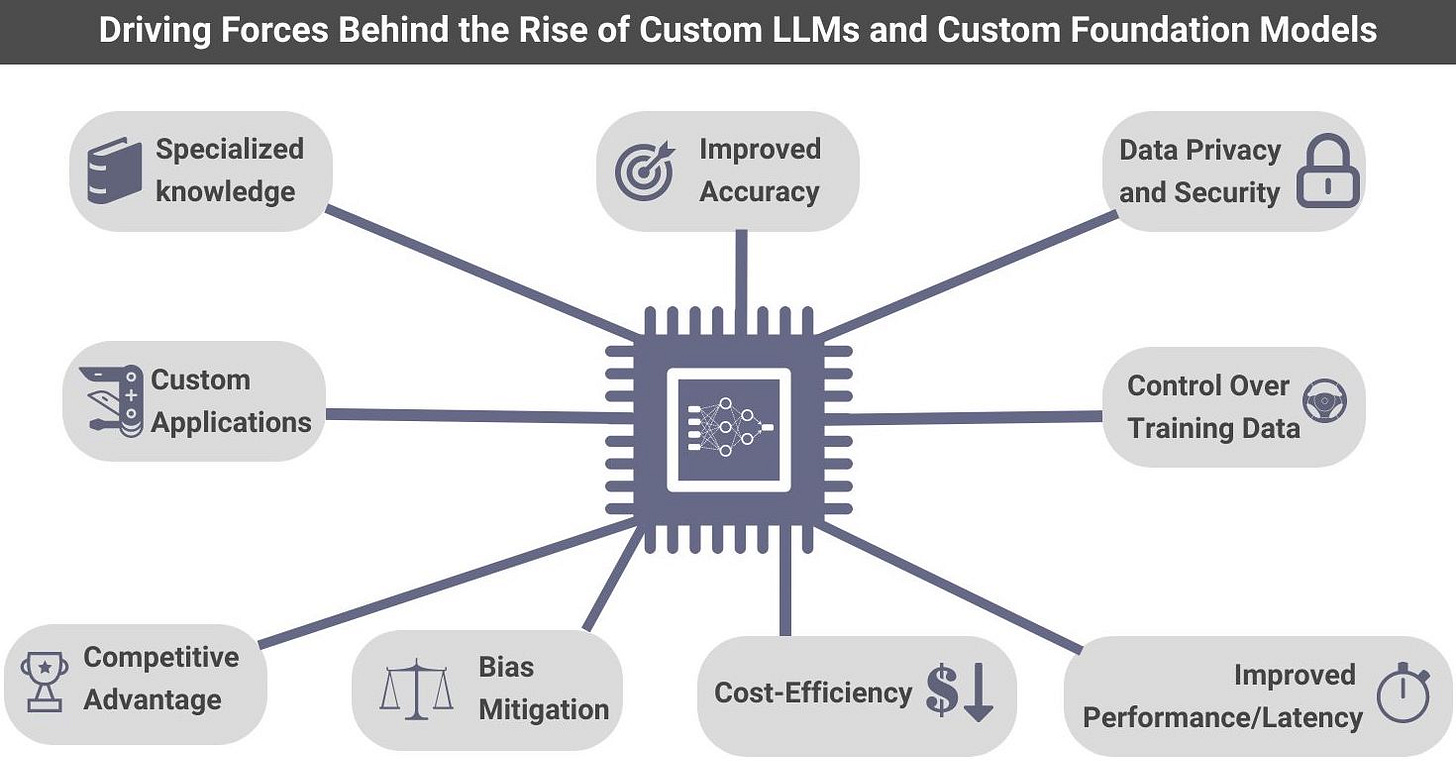

Companies are beginning to harness Custom Large Language Models (LLMs) and Custom Foundation Models due to their superior performance in specialized fields and applications. These models hold the potential to elevate accuracy, bolster data privacy and security, and deliver a competitive edge. For instance, domain-specific LLMs are more adept at understanding complex, field-specific terminologies and concepts, in sectors like healthcare, law, or finance. They provide improved accuracy, handling industry jargon and context with more precision. Additionally, in-house training of these models ensures data privacy and security, a vital concern for industries dealing with confidential information. Custom models also present an opportunity to reduce or eliminate biases present in more generalized models.

The example of BloombergGPT illustrates the effectiveness of custom models. It was trained specifically for the financial industry and yielded significantly superior results on financial tasks compared to general models. Despite requiring substantial computational resources and expertise, the substantial benefits of these custom models often outweigh the costs. New startups like Lamini are at the forefront, developing cost-efficient tools that facilitate the creation of custom LLMs

Training custom models that can power AI applications involves a few crucial stages. Starting with the "Pre-training" stage, a "Base Model" is built using extensive data including web pages, books, source code, and other data types. There is an ever-expanding roster of open-source base models, backed by user-friendly licenses, that are readily available. Next, "Supervised Fine-Tuning" refines the base model into a task-oriented "Assistant Model." The third stage, "Reward Modeling," uses expert data to create a feedback system for model performance. Lastly, the "Reinforcement Learning" stage optimizes the model using this feedback.

Enter WeightWatcher

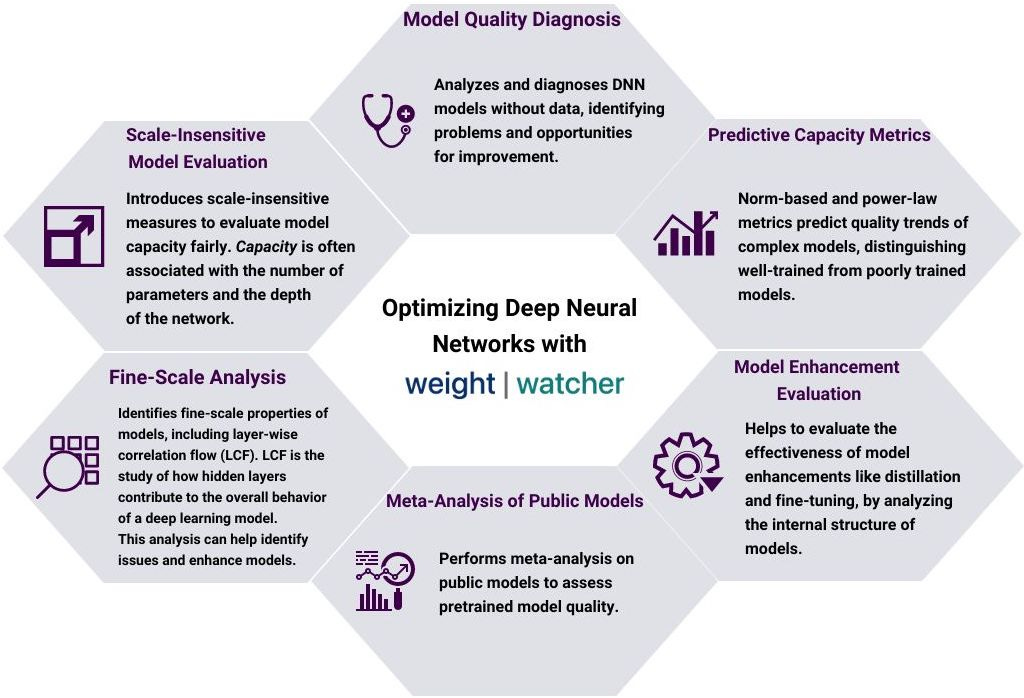

WeightWatcher (WW) is an open-source tool that can analyze Deep Neural Networks (DNN) without needing access to training or test data. It can be used to monitor, predict, and diagnose potential problems when compressing or fine-tuning pre-trained models. It can also provide layer warning labels and predict test accuracies. WW uses power law fits to model the eigenvalue density of weight matrices in any DNN. The average power-law exponent is a good indicator of how accurate the model will be when it is used to make predictions or classifications. In simpler terms, WW is a tool that helps researchers and developers analyze and improve the performance of deep learning models.

WeightWatcher helps AI teams conserve resources. In DNN training, saturation is the stage where the model's performance no longer improves significantly with added parameters or computing power because it has reached its maximum learning potential from the available data. If a team is training a large language model and adding more parameters or compute does not lead to significant improvements in performance, they can use WeightWatcher to analyze the weight matrices of the model and identify if it has reached its optimal capacity. WeightWatcher can help detect saturation by monitoring models and the model layers to see if they are overtrained or overparameterized. It can also be used to predict test accuracies across different models, with or without training data, and detect potential problems when compressing or fine-tuning pre-trained models.

WeightWatcher can be instrumental in building custom LLMs and foundation models, complementing tools like LMSys. While LMSys ranks pre-trained models, WW assesses their weight matrices for quality. It monitors DNN models for overtraining or overparameterization, predicts test accuracies, and flags potential issues during model compression or fine-tuning. Hence, WW can help teams to understand model performance intricately and make informed decisions about how to fine tune the model for their specific domain.

After meticulously completing all four phases of the custom LLM training process, developers can employ WeightWatcher to assess the caliber of their fine-tuned model prior to initiating user feedback collection. Consider this a critical final verification, sparing teams from the intricate and costly procedure of acquiring user data prematurely. WeightWatcher's quality metrics can be used to evaluate the performance of bespoke. More importantly, it uncovers potential challenges that remain undetectable through the mere scrutiny of training or test accuracies.

Summary

Despite demanding resources and expertise, the exceptional performance of Custom LLMs and Foundation Models in AI is driving increased investment across multiple industries, reflecting the growing interest in tools for building custom models. WeightWatcher, an open-source tool, supports teams in building these models, analyzing deep neural networks without needing data, and diagnosing issues when adjusting pre-trained models. This helps in saving resources and enhancing accuracy and reliability of models. The WeightWatcher team is beginning to target those developing LLM applications and custom foundation models. I recommend exploring WeightWatcher, a potent tool designed to assist in the construction of superior and more streamlined models.

Data Exchange Podcast

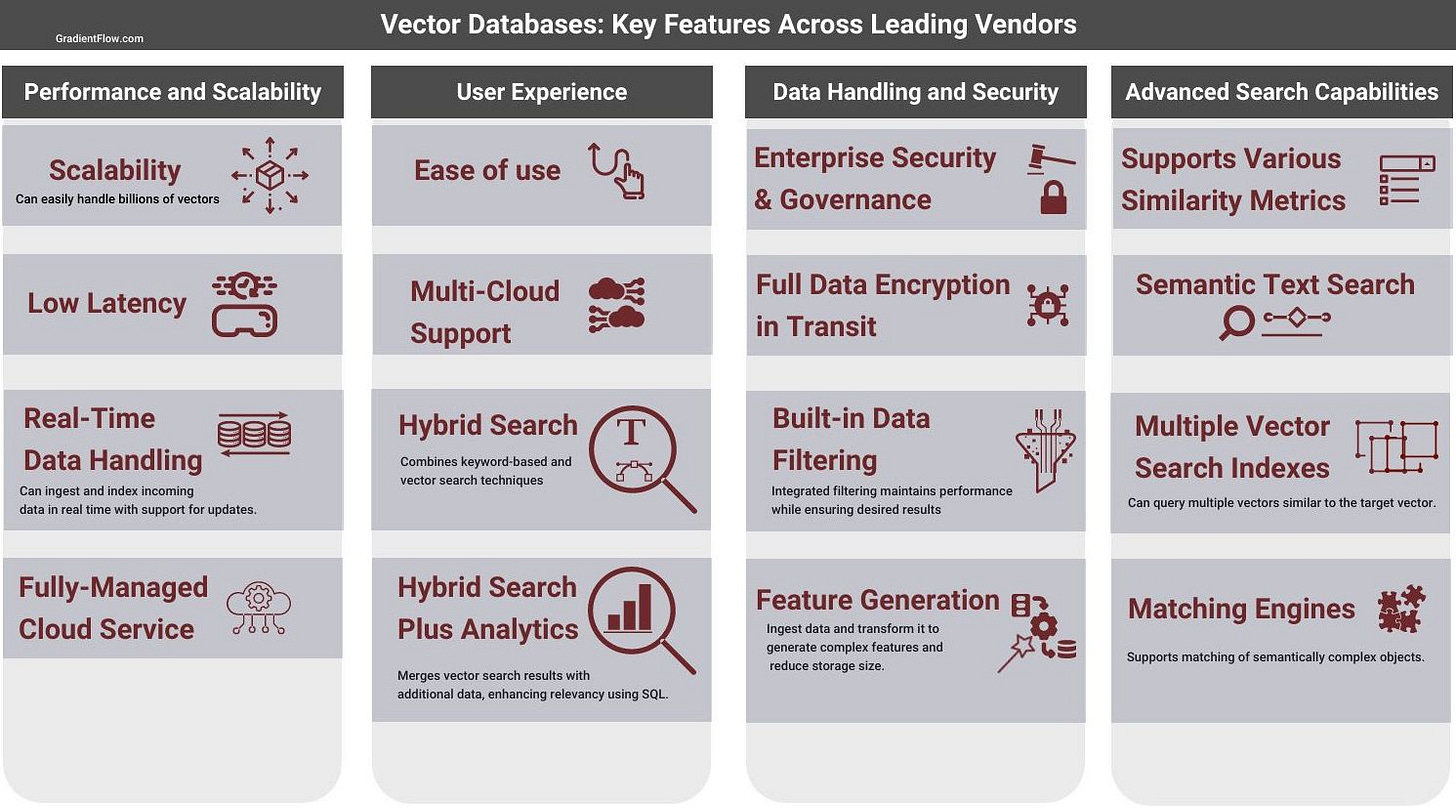

Vector Databases and the Rise of Instant Updates. Louis Brandy, VP of Engineering at Rockset, explains why incremental updates and metadata filtering will make vector databases more powerful and versatile. He also talks about hybrid search, retrieval-augmented LLMs, and the future of streaming architectures in the age of embeddings.

LLMs Are the Key to Unlocking the Next Generation of Search. Amin Ahmad, Vectara's co-founder, dissects the modern search landscape, discussing the shift from hybrid models (pre-ChatGPT), towards a refined search infrastructure that includes LLMs, vector databases, and embeddings for complex documents. We also explore multimodal search approaches, challenges like alignment and hallucination, and retrieval-augmented LLMs.

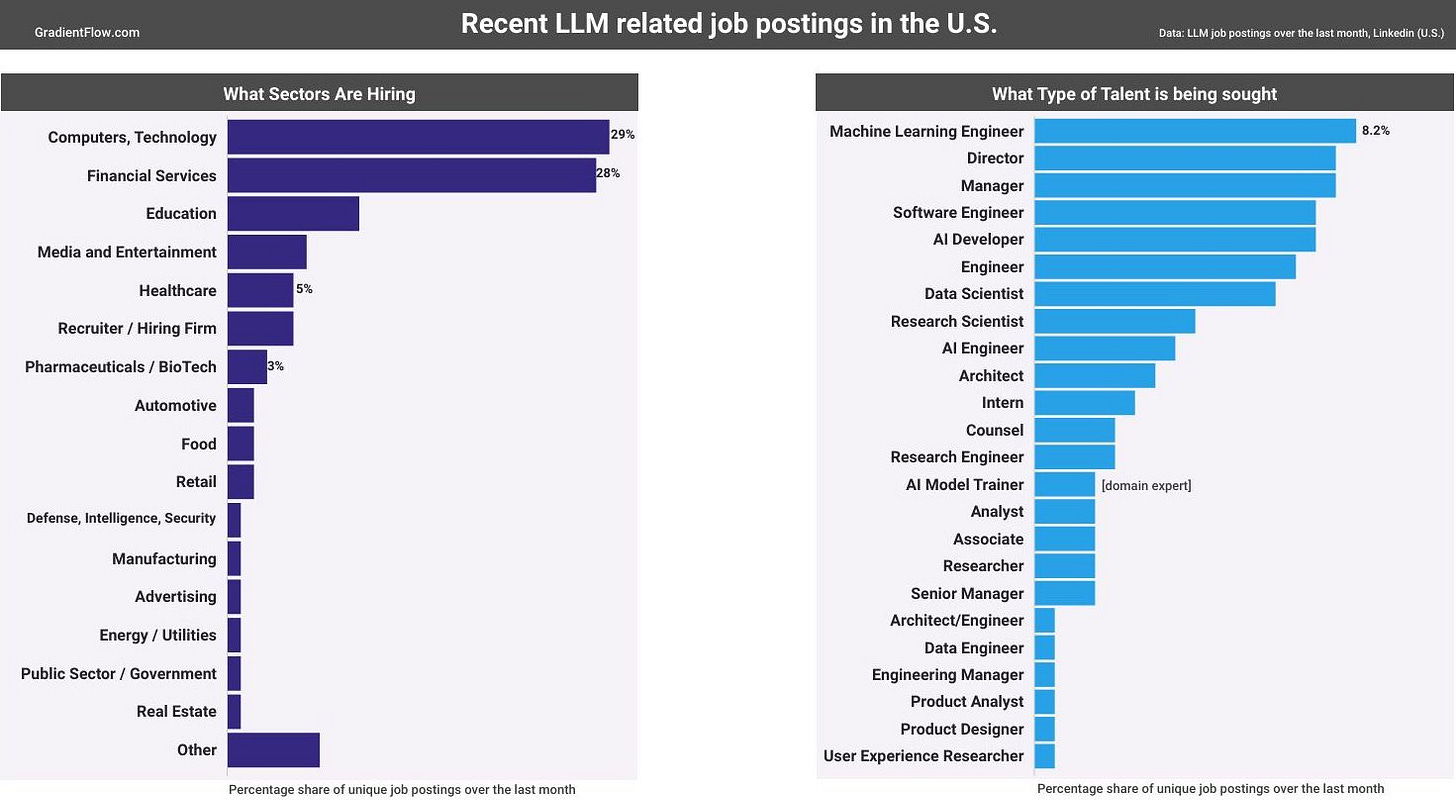

Signals from the Thriving LLM Job Market

The LLM job market is thriving, particularly in sectors such as technology, finance, healthcare, pharmaceuticals, biotech, media, entertainment, and academia. Considering the emerging interest from businesses operating in regulated sectors, such as financial services and healthcare/pharmaceuticals, startups developing LLM tools should prioritize the integration of enterprise features. These features include robust privacy and security measures, efficient access control, and comprehensive auditability, among others.

Prospective hires are expected to have a blend of expertise in NLP, AI, software engineering & protocols, scalable API solutions, and industry-specific knowledge. People who demonstrate a proven ability to manage technological risks, navigate issues related to data quality and training data, understand user interface design, and harness AI, all while effectively communicating with a wide range of audiences, are in high demand. Employers prioritize not only technological acumen, but also a commitment to responsible AI, sustainability, and trust.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, or Twitter, or Mastodon, or T2, on Post. This newsletter is produced by Gradient Flow.