AMD's Expanding Role in Shaping the Future of LLMs

In my recent exploration of emerging hardware options for Large Language Models (LLMs), AMD's offerings have emerged as particularly promising. In this analysis, I delve deeper into the factors that position AMD GPUs favorably for leveraging the growth of LLMs and Generative AI. These factors range from performance and efficiency gains in demanding AI tasks to significant software advancements, highlighting AMD's emerging niche in this rapidly evolving field.

Firstly, AMD hardware delivers performance and efficiency gains for demanding AI tasks. AMD chips feature optimized hardware acceleration for key AI building blocks, unlocking substantial performance potential. Additionally, their high memory bandwidth and capacity enable the handling of larger AI models and datasets. These specialized hardware optimizations, along with robust memory capabilities, make AMD solutions well-suited for both training and inference in cutting-edge AI use cases. Sharon Zhou, CEO of Lamini, observed, "My view on this is we're essentially almost like a CUDA layer on AMD, obviously not as deep as CUDA right now since we're a startup, but essentially we're that layer." This comment underscores the potential for companies like Lamini to build upon AMD's hardware and software stack to further optimize AI workloads.

On the software side, AMD has made significant investments in maturing and optimizing the ROCm open-source software stack for AI over the past decade. Greg Diamos, CTO of Lamini, recently noted that there was a common misperception about deficiencies in AMD's software stack. However, his experience showed it was close to 90% complete in capabilities needed for production AI deployments. Mature, optimized software is critical to unlocking the full performance potential of AMD hardware for real-world AI applications.

Moreover, AMD designed their ROCm software platform to be open source and modular, allowing the open-source AI community easy access and the ability to contribute code updates. Being open source facilitates broader adoption of ROCm and AMD hardware for AI development, compared to proprietary solutions like Nvidia's CUDA. This open ecosystem, exemplified by collaborations with entities like OpenAI Triton, Fedora, and PyTorch, is pivotal in providing diverse integration options.

Additionally, market trends are swinging in AMD's favor. The shorter lead times for AMD chips improve availability, contrasting with Nvidia and easing adoption for many customers. High-profile endorsements from industry giants such as Microsoft, Meta, and OpenAI signal strong momentum and trust in AMD, reminiscent of the support that buoyed Linux in its ascent.

These factors are beginning to translate into real-world systems, like Lamini’s enhancements to efficiently fine-tune LLMs on AMD chips. Their optimizations allow models to run faster and handle more data during training and inference. As software and hardware continue to mature, AMD's positioning in AI silicon becomes increasingly promising.

The combination of high-performance hardware, a mature software ecosystem, and positive market trends positions AMD at the forefront of AI and LLM technology. A recent survey of AI experts found declining computing costs to be the single most impactful factor affecting the pace of progress in AI. With the industry's continued advancement, driven in large part by these falling costs that enable more complex models and computations, AMD's GPUs will be essential in advancing Generative AI and AI systems.

Data Exchange Podcast

Software Meets Hardware: Enabling AMD for Large Language Models. Sharon Zhou and Greg Diamos are the founders of Lamini, a startup that recently developed a layer similar to CUDA that makes it easier to run workloads on AMD hardware, akin to how CUDA enables GPU computing on Nvidia chips.

Incentives are Superpowers: Mastering Motivation in the AI Era. Uri Gneezy is Professor of Economics and Strategy at UC San Diego, and author of our 2023 Book of the Year, “Mixed Signals: How Incentives Really Work”.

Improving Data Privacy in AI Systems Using Secure Multi-Party Computation

In the financial services sector and beyond, accessing comprehensive data for building models and reports is a critical yet challenging task. During my time working in financial services, we aimed to use data to understand customers fully, but siloed information across separate systems posed significant obstacles to achieving a complete view. This issue underscores the importance of data sharing—a theme so central it inspired the name of my podcast, The Data Exchange.

As reliance on analytics and AI grows, data privacy becomes increasingly critical. Teams often need access to sensitive datasets containing personally identifiable information (PII) or proprietary data to build accurate models. However, regulations and policies restrict data sharing and direct access, creating roadblocks for data science and machine learning teams. Common challenges include lengthy access wait times, resorting to synthetic data that offers subpar accuracy, and the inability to collaborate across teams or with partners.

Limitations of Current Approaches

Various methods have been employed to mitigate these risks. Techniques like hashing or adding noise to data, while common, do not provide robust protection during collaborative computations. Federated Learning, despite its decentralized approach, lacks formal privacy guarantees and is vulnerable to attacks.

Data clean rooms offer a controlled environment for sensitive data collaboration but often limit analysis and rely on vendor-specific tools. By providing a digital vault for private data sharing and analysis under strict privacy protocols, clean rooms have emerged as a vital tool. However, relying on vendor-provided clean rooms restricts analytical capabilities and depends on vendor timelines and tools, reducing user control.

In contrast, building proprietary clean rooms in-house offers greater flexibility, oversight, and long-term cost savings, while also fostering internal data and AI expertise. Meanwhile, compliance relies more on internal oversight than on vendor support. A hybrid approach, combining rented and proprietary clean rooms, balances vendor expertise with control over core data storage and IP.

Traditional clean rooms risk data exposure when moved from protected environments, even with data obfuscation. However, recent advances in secure multi-party computation (SMPC) pave the way for an open, decentralized software model for encrypted data computation on-premise, eliminating the need for external data transfer.

An Innovative Approach with Secure Multi-Party Computation

SMPC overcomes traditional data privacy method limitations by allowing multiple parties to compute functions on sensitive data without exposing the underlying data. It's akin to a group of people solving a puzzle together without revealing their individual pieces. SMPC is a type of cryptography that enables joint computation of a function while keeping private inputs hidden, even during collaboration.

Pyte, a startup specializing in SMPC, offers scalable and performant solutions that allow teams to work on real datasets without exposing sensitive information. Their tools, designed for compliance and governance, ensure data confidentiality and prevent leaks and copyright issues.

Pyte's SecureMatch platform enables the discovery of audience overlaps and data activation with partners, ensuring data security and control. PrivateML allows data scientists to securely experiment with machine learning models on real datasets without direct access to sensitive data.

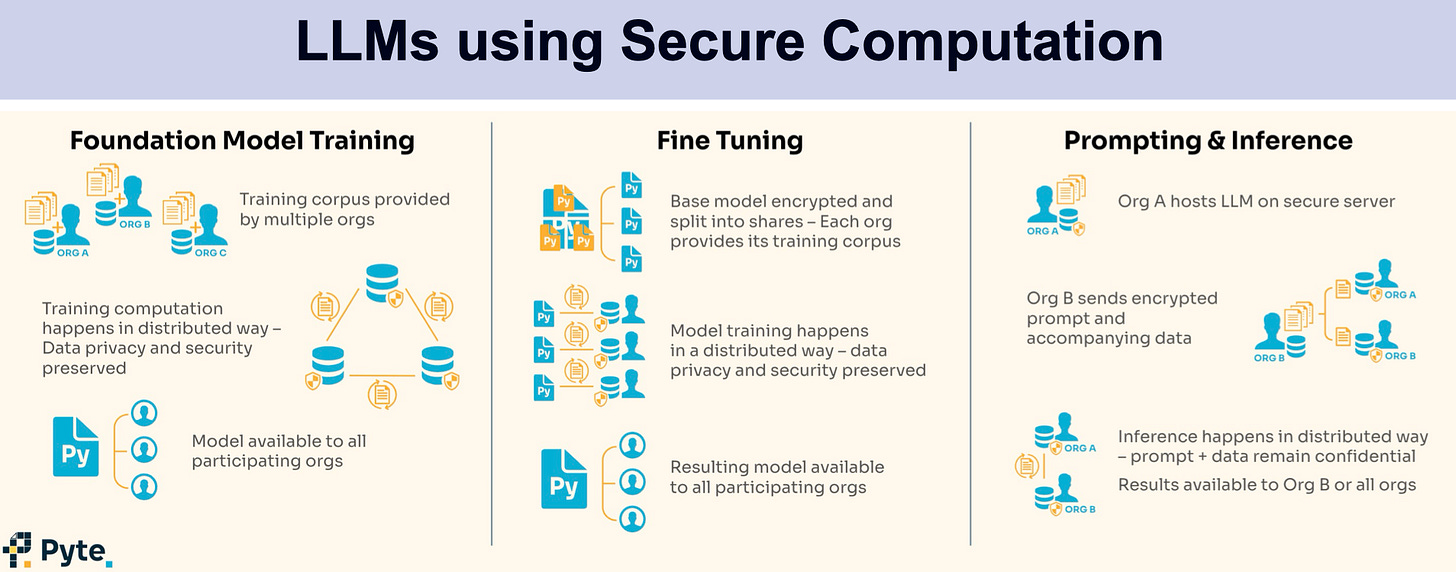

SMPC also enables crucial new use cases for LLMs that involve sensitive or proprietary data from multiple organizations. For example, law firms, financial institutions, and healthcare companies could leverage LLMs to process confidential contracts, transactions, or medical records without having to disclose the actual content to any third party. While SMPC introduces additional computational complexity, for many regulated industries this is an acceptable tradeoff for the privacy guarantees it provides. More broadly, SMPC unlocks opportunities for secure collaboration - multiple organizations could jointly fine-tune an LLM on their combined datasets to produce a superior model, without ever having to share raw data with each other. The overhead of SMPC is relatively small compared to the major gains in privacy, compliance, and expanded access to sensitive data.

The Future of Privacy-Preserving Analytics

Pyte's solutions have already shown significant impact in financial services, enabling faster and more collaborative data science on sensitive data. Banks and brokerages have been able to reduce the time for accessing sensitive datasets from over six months to 1-6 months, and a leading consumer packaged goods company has been able to collaborate on a machine learning model for product discovery. Looking ahead, Pyte plans to integrate with various data platforms and introduce AutoML capabilities for collaborative machine learning, further enhancing the potential of SMPC in AI and analytics.

With powerful privacy-preserving technologies like SMPC, the barriers to accessing and utilizing sensitive data are being dismantled, empowering organizations to leverage analytics and AI fully. This breakthrough marks a significant stride forward in the realm of privacy-preserving analytics, setting a new standard for how data is utilized in the financial services industry and beyond.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Twitter, Reddit, or Mastodon. This newsletter is produced by Gradient Flow.