Function Calling AI: Transforming Text Models into Dynamic Agents

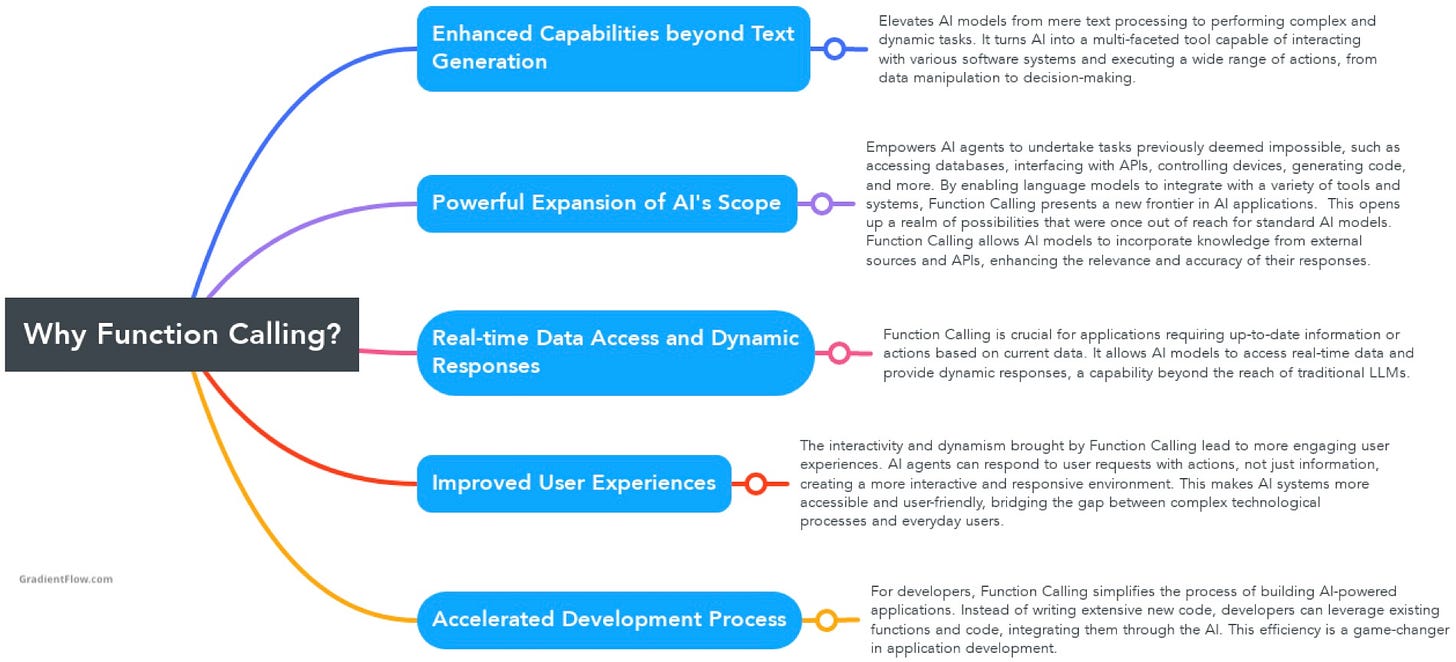

Function Calling, particularly relevant in large language models (LLMs), is a transformative feature that significantly broadens the capabilities of these frontier models. This feature allows AI models to go beyond basic text generation and language understanding by interacting with and executing external functions.

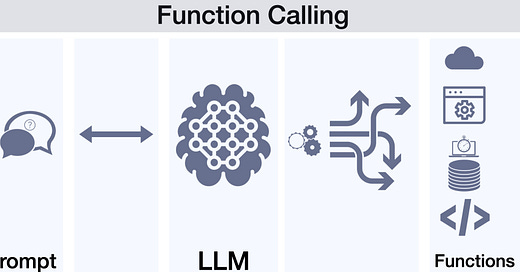

At its essence, Function Calling in AI entails the invocation or execution of functions within the AI model's context. This enables the model to carry out specific actions or operations based on the functions' implementation and parameters. For example, AI models can generate API calls and structure data outputs in response to defined functions within a request, which enhances their functionality.

Developers equip AI models with descriptions of available external functions that can be called. When users provide natural language prompts, the agent analyzes the request, determines if an external function could address the request, and recommends calling the relevant function. The user can approve calling the function, at which point the agent executes the function by passing the appropriate parameters and structuring the request in the correct API format.

By enabling AI systems to actually take actions through external function calls, driven by conversational prompts, they can become active assistants that understand intents, leverage services, execute custom logic, and automate tasks by programmatically integrating with external systems. This technology has the potential to enable more seamless human-AI collaboration across many domains.

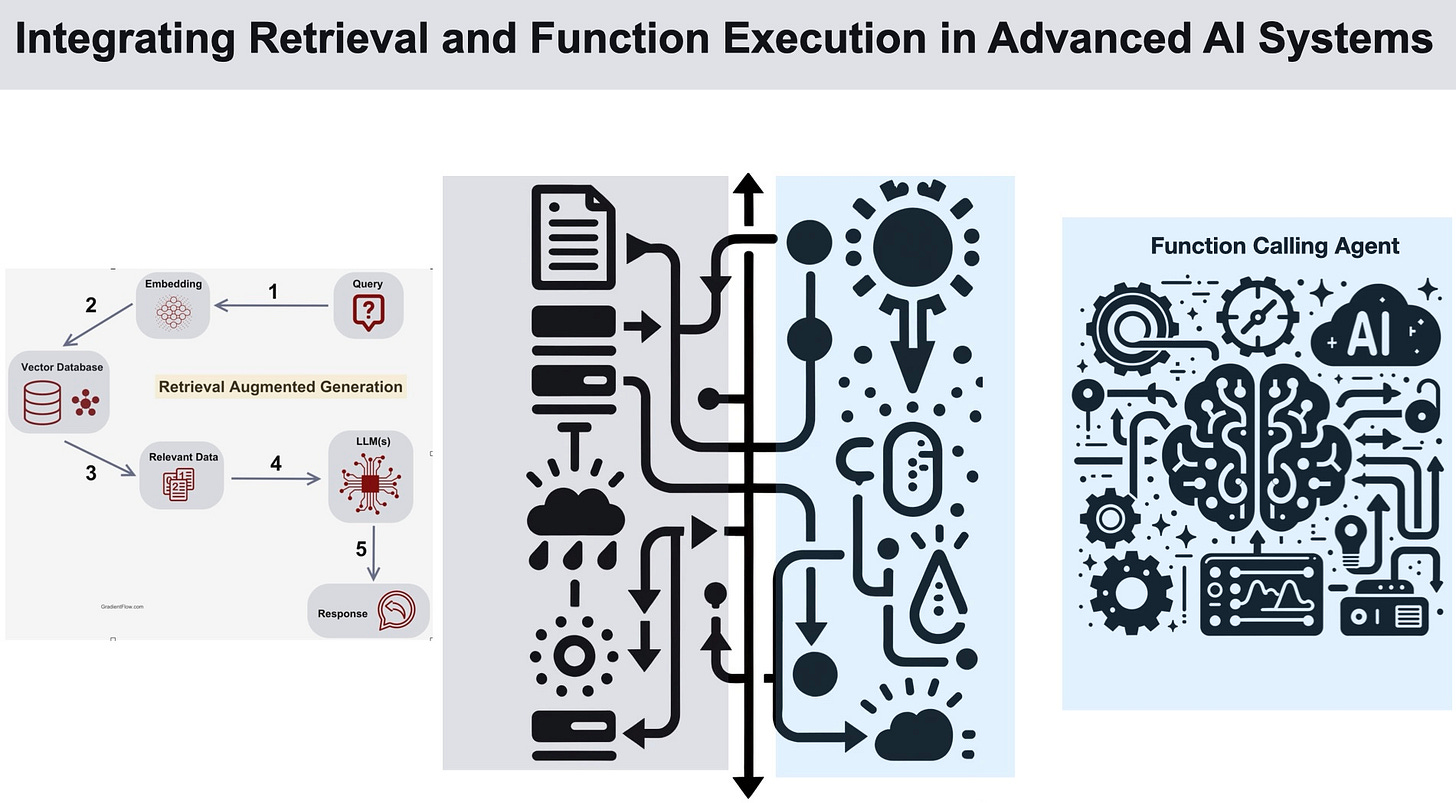

Retrieval Augmented Generation (RAG) and Function Calling are AI capabilities that enhance AI systems when used together. RAG enriches LLMs by pulling in relevant information from documents to inform text generation. On the other hand, Function Calling allows AI systems to access and utilize external APIs, data, and computational resources. These two capabilities, while distinct in their focus, can be integrated for more sophisticated AI applications.

For instance, RAG-equipped systems can use retrieved content to guide function calls, leading to more adaptable AI assistants. An AI assistant could first gather background information, and then use a weather API to provide a current weather forecast, combining both sources of information. Essentially, RAG supplies the necessary context and knowledge, while Function Calling offers access to external services and the ability to execute tasks. This combination enables the creation of advanced AI systems that not only understand situations but also actively seek out additional information and perform actions based on it.

Function Calling transforms AI from simple text generators into dynamic assistants, paving the way for the next wave of intelligent applications. It facilitates access to real-time data, database queries, device control, and more, rendering AI systems more versatile, responsive, adaptive, and interactive.

For developers, Function Calling streamlines the application-building process by utilizing existing code, which greatly simplifies development. End-users benefit from more natural and conversational interfaces for complex tasks, making AI systems more intuitive and user-friendly compared to traditional menu navigation.

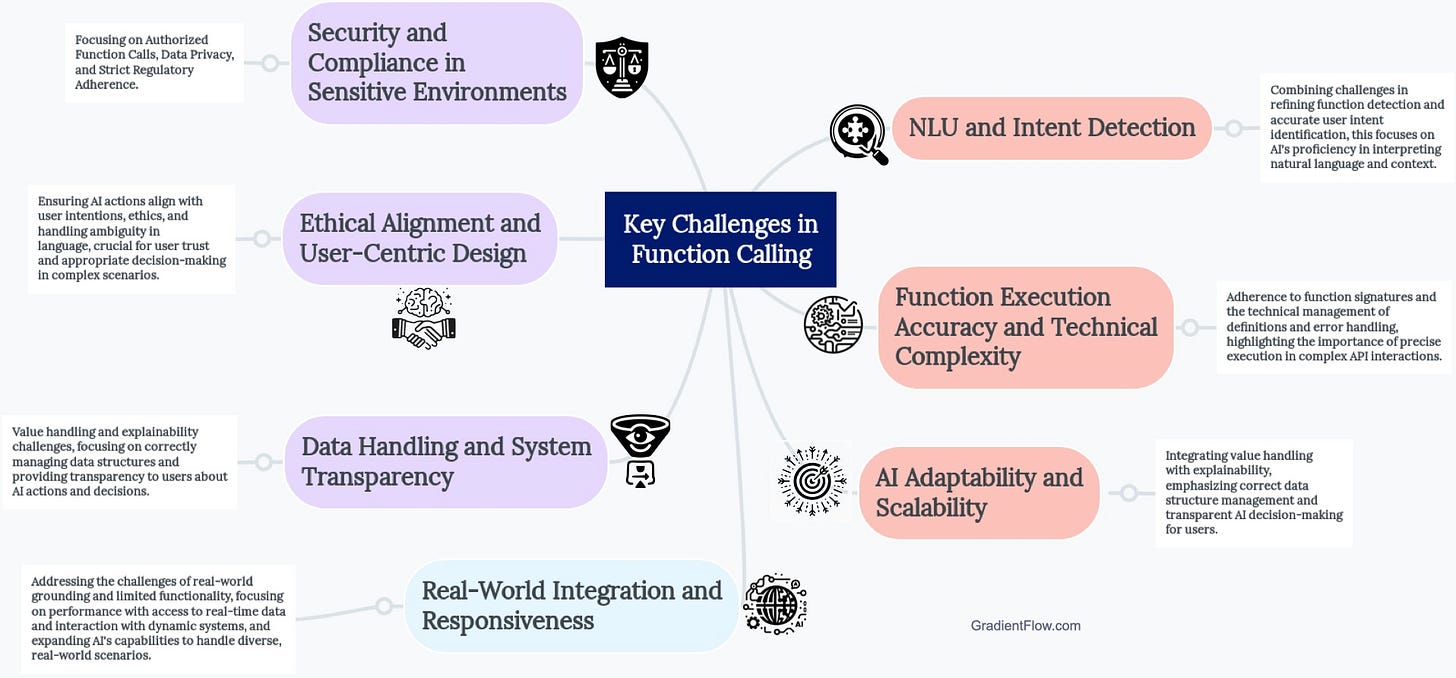

Despite its potential, challenges persist, including accurately interpreting user intentions, managing expanding function libraries, safeguarding against unauthorized functions, skillfully handling complex data, clearly explaining model actions, and integrating real-time data. The limitations of Function Calling in deep domain knowledge, historical analysis, and real-time responsiveness could significantly hinder its adoption for tasks requiring these capabilities. Progress in contextual understanding and integration with external APIs and datasets is essential for unlocking its full potential. Here are a few key challenges:

Security risks from data breaches or disruptions due to external code execution, necessitating strict verification and software supply chain risk management.

Alignment issues with ethical norms and user intentions, requiring rigorous testing and transparency.

Technical limitations in mapping complex requests to functions and error handling, with actual capabilities often overestimated.

Opacity in decision-making processes and auditing challenges.

Potential misuse, either intentional or inadvertent, with significant societal implications for jobs, norms, and AI autonomy.

Addressing these requires advancements in security, alignment, technical infrastructure, and ethical, human-centered multi-disciplinary collaboration, which are critical for realizing the benefits of function calling and mitigating its risks.

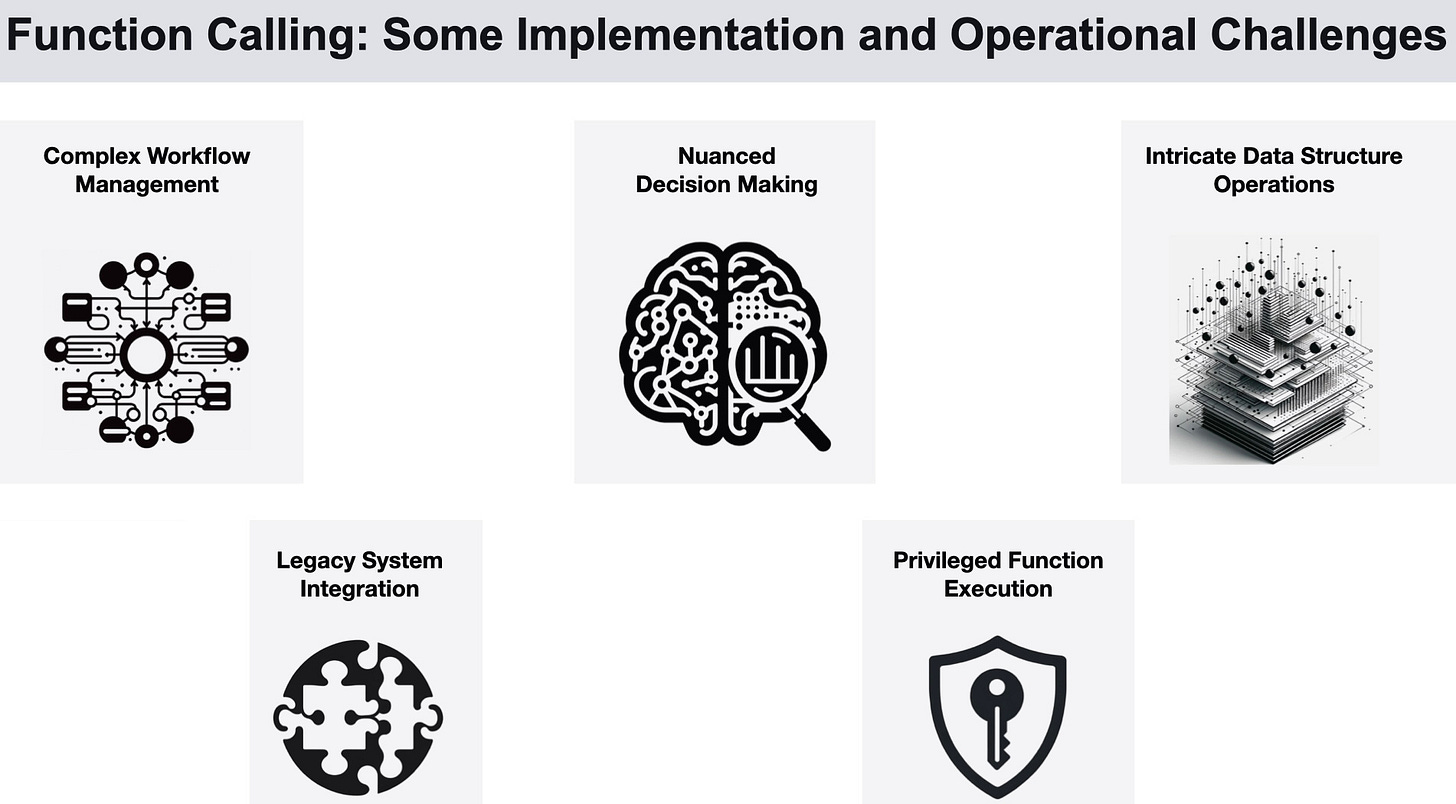

Invoking predefined functions and APIs poses challenges in complex, nuanced, or dynamic real-world applications. Specifically, Function Calling struggles with:

Managing complex workflows with multiple interdependent steps, where tracking context and state is critical.

Interpreting function outputs and external data to make nuanced decisions. It lacks higher reasoning skills for deep data analysis.

Operating on evolving, intricate data structures like graphs and nested objects. Its simplistic world model limits usefulness.

Integrating across messy, ambiguous legacy systems. It falters when interfaces are poorly documented.

Safely executing privileged functions. Without sophisticated judgment, misuse can cause harm.

Meeting rigorous scalability, availability and performance needs. Reliability is unproven in mission-critical systems.

Although a remarkable technical step forward, Function Calling is still hamstrung by its restricted scope and susceptibility to errors. It lacks the versatility to handle applications demanding nuance, judgment, and adaptability. Thus, many complex real-world scenarios pose stiff implementation challenges currently. Progress continues but complex multi-step workflows remain challenging.

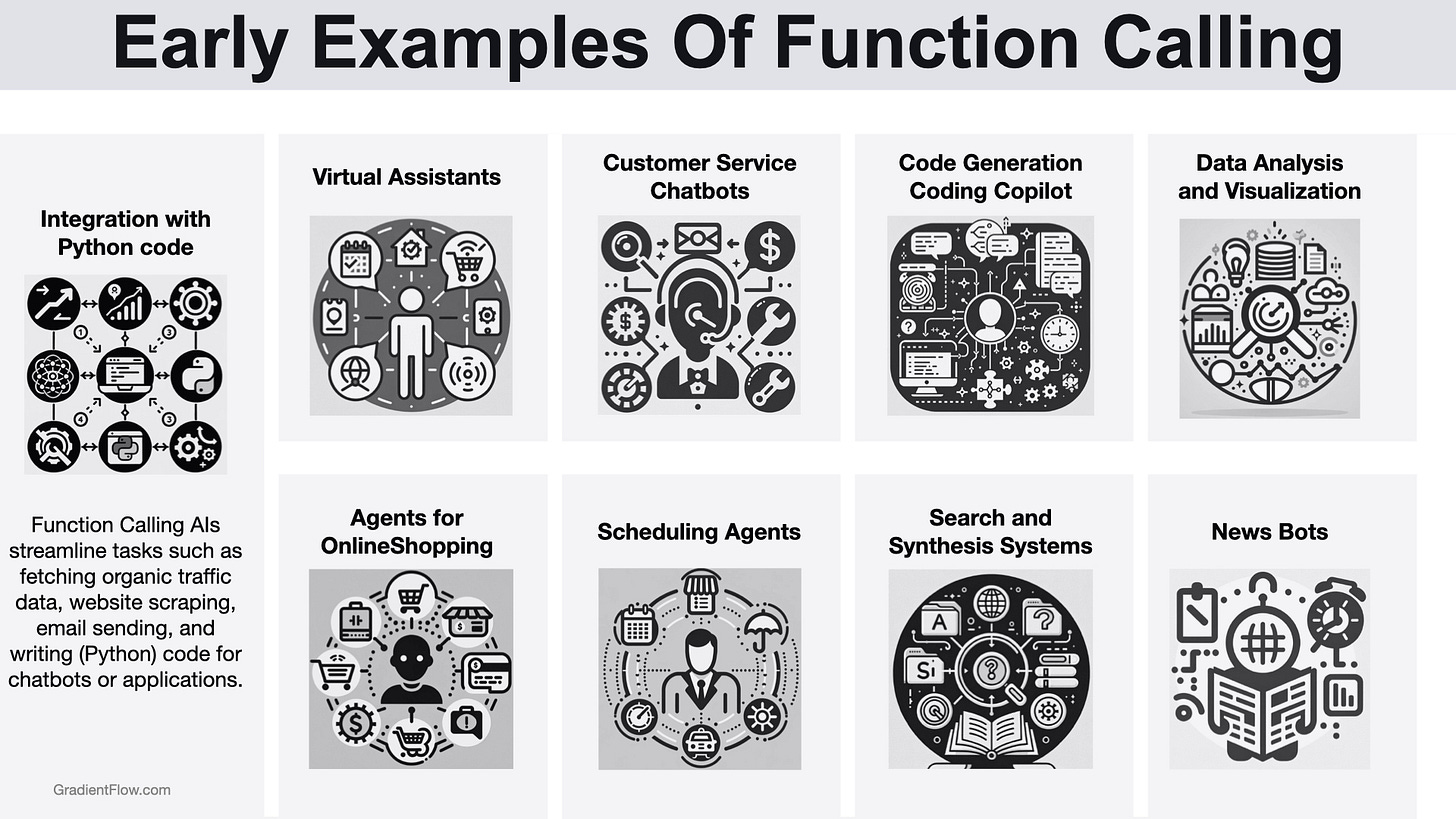

Early applications include customer service chatbots that can resolve issues more effectively, data analysis assistants that generate updated visualizations on demand, and code generation tools that save developers time. Other promising use cases are AI writing assistants that cite sources, scheduling agents that adapt to external data, voice-controlled smart home managers, and automated virtual assistants for tasks like travel booking. As AI progresses, Function Calling will enable models to orchestrate increasingly complex chains of external services through natural language instructions. This makes the technique valuable across many real-world applications.

OpenAI has been at the forefront of advancing Function Calling AI capabilities in models like GPT-3.5 Turbo and the latest GPT-4, while Google AI offers similar features through Vertex AI. Nexusflow's NexusRaven-V2, a 13 billion parameter open source model, sets new standards in zero-shot function calling. Most major AI providers will incorporate Function Calling, enabling models to execute functions and APIs from natural language prompts. In my view, open source benchmarks and leaderboards will spur advances, clearing the path for these technologies to permeate consumer and business applications.

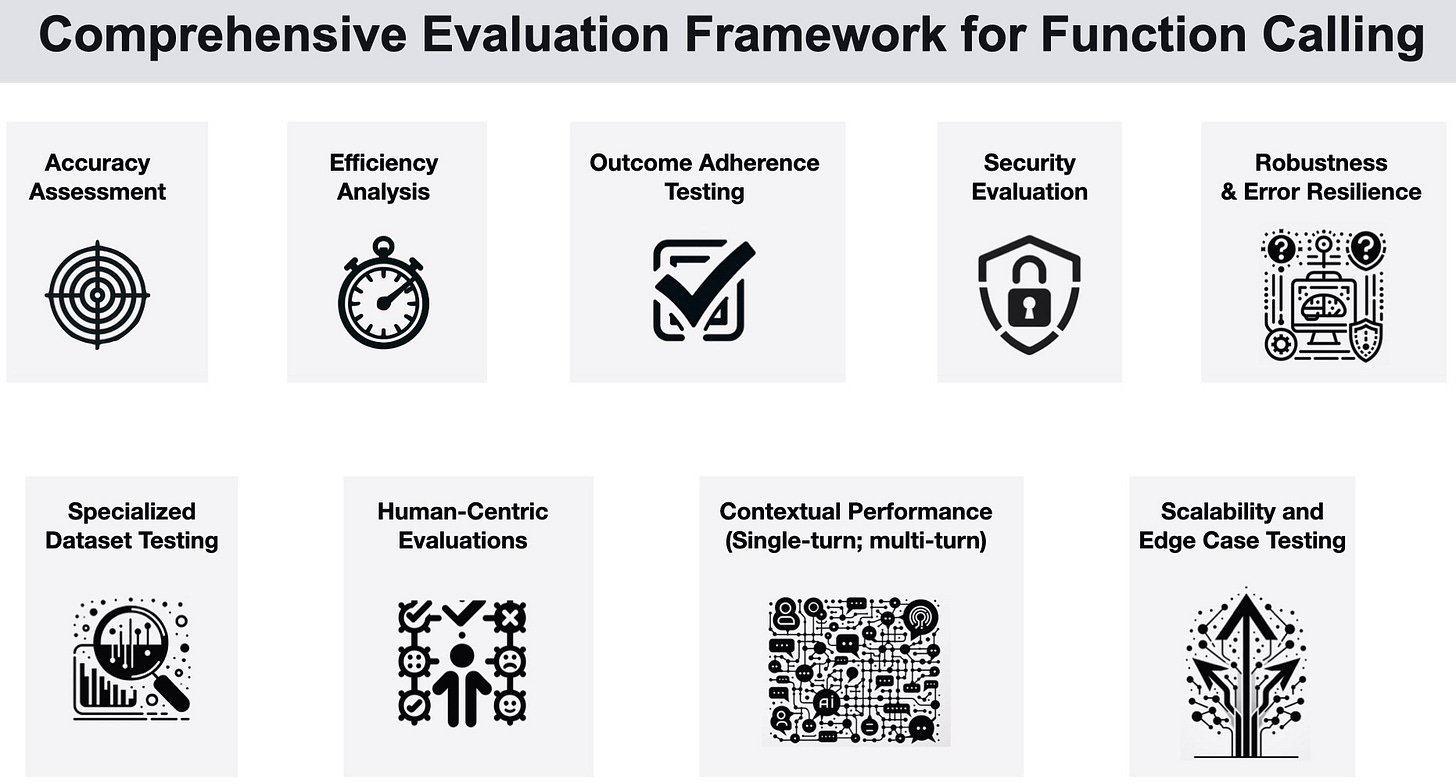

Testing ensures Function Calling AIs reliably interpret requests, select functions, populate arguments, and structure outputs. Specialized datasets spanning single-turn and multi-turn scenarios measure precision against human benchmarks and models like GPT-4. Additional testing scrutinizes scalability, security, robustness, and integration capabilities. Human evaluations gauge task success and user experience. This comprehensive approach rigorously assesses real-world performance on diverse tasks, both quantitatively and qualitatively, guaranteeing reliability across applications. Automated testing and human insights pinpoint strengths and areas for improvement.

New tools like MemGPT, which utilize Function Calling in LLMs, herald a breakthrough in AI's ability to manage vast data and extended interactions. MemGPT incorporates operating system principles to overcome LLMs' context constraints, efficiently handling complex tasks like in-depth document analysis and advanced conversational agents. This innovation signifies a leap towards AI systems with human-like long-term memory and sophisticated data processing capabilities, opening new avenues in AI applications for complex, context-heavy scenarios. Function Calling is crucial in enabling this progress, as it allows intricate memory and control flow management - essential capabilities for these advanced uses.

I’m thrilled about the rapid advancements in Function Calling. It's becoming increasingly integral across various industries, as demonstrated by innovative open-source projects like NexusRaven-V2.

I envision a future where AI agents can autonomously execute the necessary functions to assist humans in real-time, enhancing their capabilities. These AI applications will adeptly handle multi-step reasoning across a range of domains by utilizing current data. As our proficiency with external functions deepens, our collaborative efforts with AI will become more effective, helping us solve increasingly complex problems. It's an exciting time to be working on AI applications!

Data Exchange Podcast

AI Co-Pilots in Action: Transforming Function Calling in Cybersecurity. Jian Zhang is the co-founder and CTO of Nexusflow AI, a startup that recently released NexusRaven-V2. This open source 13 billion parameter language model achieves state-of-the-art performance on zero-shot function calling.

The Convergence of Biology and AI. Dmitriy Ryaboy is the VP of AI Enablement at Ginkgo Bioworks, a synthetic biology startup that leverages machine learning and AI to develop innovative applications across industries. The discussion highlights the role of large language models (LLMs) in biology, especially in protein engineering and DNA synthesis.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, or Twitter, or Mastodon, or Post. This newsletter is produced by Gradient Flow.

Stellar overview and analysis. Function calls have been an absolute game changer for me, particularly when used for structuring unstructured data that accrues when chatting with my gpt assistants.