Embed Retrieve Win

The Vector Database Index

If you work with text or images, chances are embeddings are already a key part of your machine learning and analytic pipelines. Embeddings are low-dimensional spaces into which higher-dimensional vectors can be mapped into. They can represent many kinds of data, whether a piece of text, an image or audio snippet, or a logged event. Embeddings capture some of the semantics of the inputs and place inputs that are semantically similar near each other in the embedding space. Thus, embeddings make AI applications much faster and cheaper without sacrificing quality.

Vector databases are designed specifically to deal with vector embeddings. In a sense, vector databases are what you get when you embed your entire database. In a recent post with Leo Meyerovich of Graphistry, we explore the world of vector databases and compare popular systems using an index that measures popularity.

Since embeddings increasingly play a crucial role in many machine learning pipelines, what is your organization's strategy for AI embeddings? Do specialized databases and even hardware for vectors make sense in the long run? This is an essential short overview on a vital capability essential to modern search engines, recommendation systems, and other information retrieval applications.

Data Exchange podcast

Machine Learning Integrity: Yaron Singer is Professor of Computer Science at Harvard University and the CEO of Robust Intelligence, a company building tools to help manage and mitigate risks associated with machine learning models and applications. Their tools integrate seamlessly into the ML lifecycle and combat model failures.

Synthetic data technologies can enable more capable and ethical AI: Yashar Behzadi is the CEO & Founder of Synthesis AI, a startup that uses synthetic data technologies to enable teams building AI applications, as well as gaming and metaverse applications. Synthetic generation tools can replicate data for computer vision with high fidelity using recent developments in machine learning and the visual effects industry.

Data & Machine Learning Tools and Infrastructure

T-Mobile - Why we migrated to a Data Lakehouse on Delta Lake: Architectural transition stories, particularly from well-known companies, are perennial audience favorites at conferences. Audiences love hearing companies discuss how they deploy new tools and how they evaluate different options. This post is an excellent example of this. As a result of moving from a data lake to a lakehouse, T-Mobile is enabling users to adopt advanced analytics and derive new insights easily.

ML Triptych - Ray, Kubeflow, k8s: I'm hearing about more machine learning platforms built on top of Ray. We might be approaching a tipping point where Ray becomes the default choice for people who want to build ML platforms. In this article, members of Google’s Kubernetes team explain how Kubeflow and Ray can be assembled into a seamless self-managed machine learning platform.

Amazon’s decade-plus long journey toward a unified forecasting model: While time-series modeling may not have the cachet of NLP and computer vision, I have always appreciated the importance and challenges of forecasting at scale. This post describes how Amazon gradually switched from statistical models (that relied on hand crafted features), to neural models with decoder-encoder attention mechanisms that improve forecasting accuracy and decrease the volatility of forecasts.

[Image: Blocks outside The Source in Sioux Falls by Ben Lorica.]

Generative Models

Generative models are used to learn to model the true data distribution p(x) from observed samples x. Instead of traditional ML tasks like clustering, prediction, and classification, generative models with esoteric names like diffusion, VAE, GANs, and ELBO have recently been used to enable exploration, creation, and creative expression.

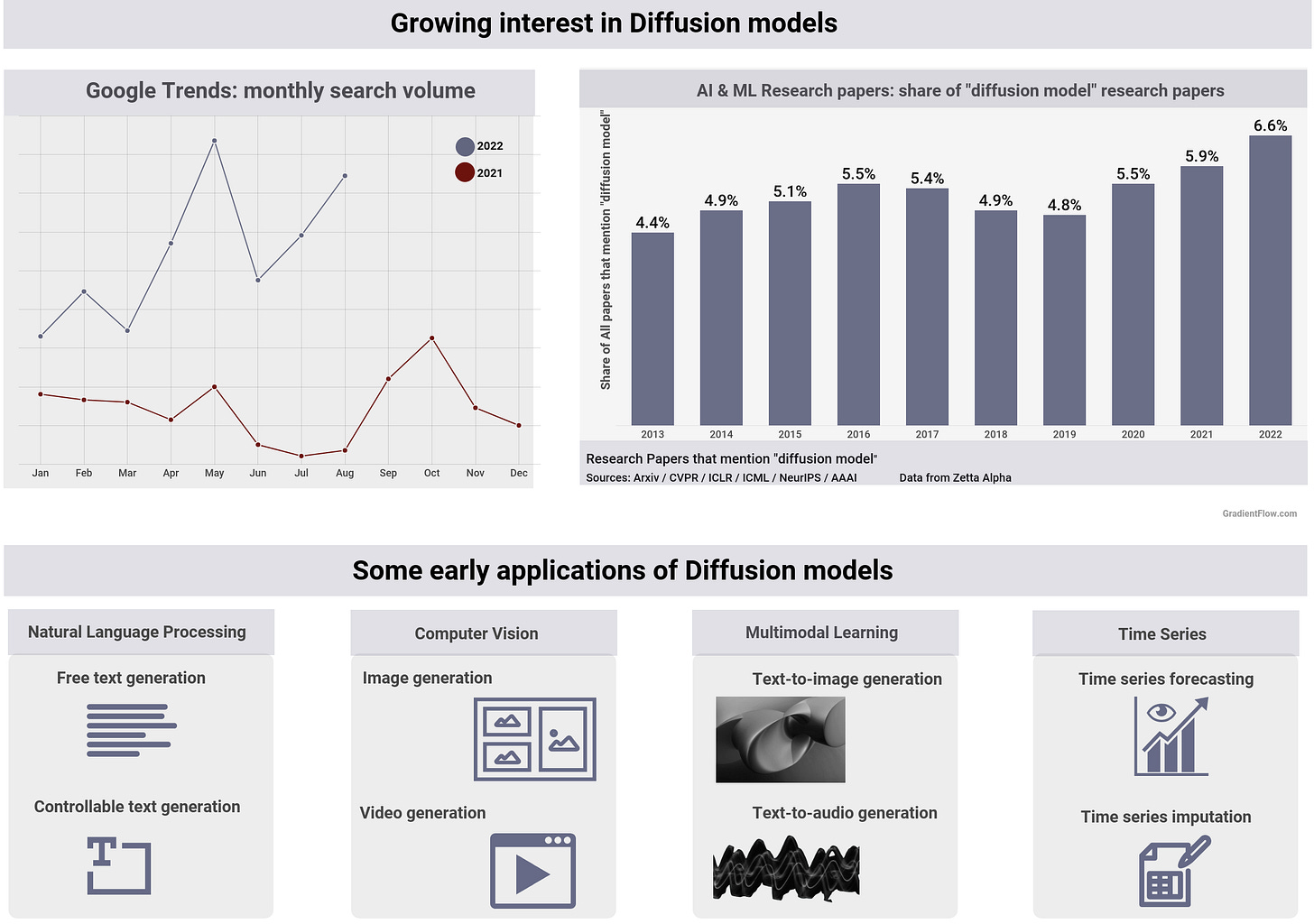

Diffusion models have shown incredible capabilities as generative models, but what really caught people's attention was their application in state-of-the-art text-to-image models (text-conditioned image generators) such as DALL-E 2 and Imagen. Worldwide search volume for diffusion models went up 60% from 2020-2021.

Research activity has been a good leading indicator for AI products and services. Taking this into consideration, diffusion models are receiving considerable attention in the research community: the percentage of papers that mention them continues to rise (projected to be one in fifteen papers in 2022). In fact, implementations of diffusion and other generative models are already becoming more widely available to developers and I expect to see more products and services based on them soon.

If you are wanting to explore something new (side project, work project, or even a startup), more tools are available to facilitate your venture. I think the timing is perfect. Platforms and tools are gradually getting better, while applications are still in their infancy.

2022 NLP Summit is next week

This year's program will cover many real-world use cases, updates on major open source projects, and cutting-edge research from OpenAI, Hugging Face, Primer, and Bloomberg. If you work in NLP and text, you need to attend this FREE online conference.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Twitter @BigData. This newsletter is produced by Gradient Flow.