Decoding Apple's AI Ambitions

Deciphering Apple's AI Strategy and Priorities

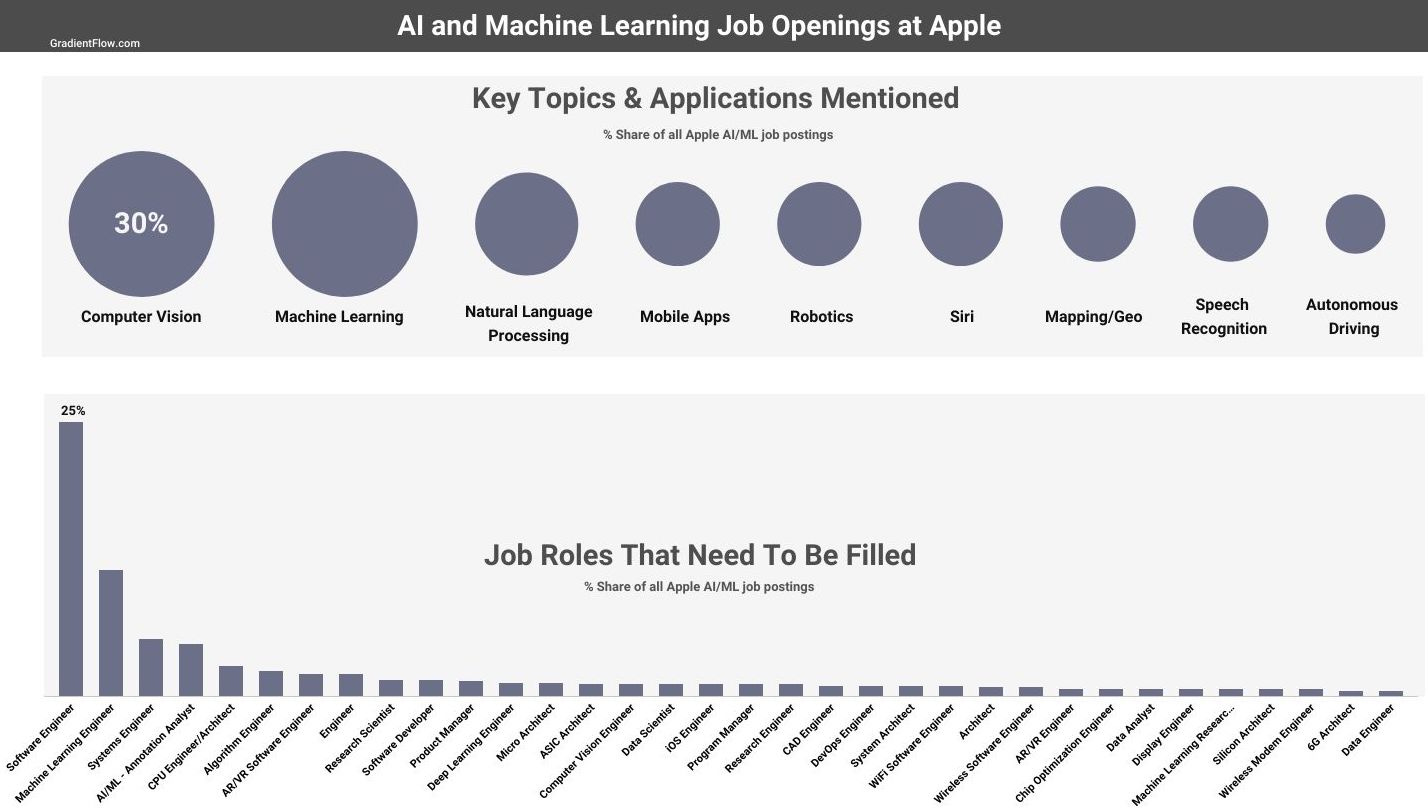

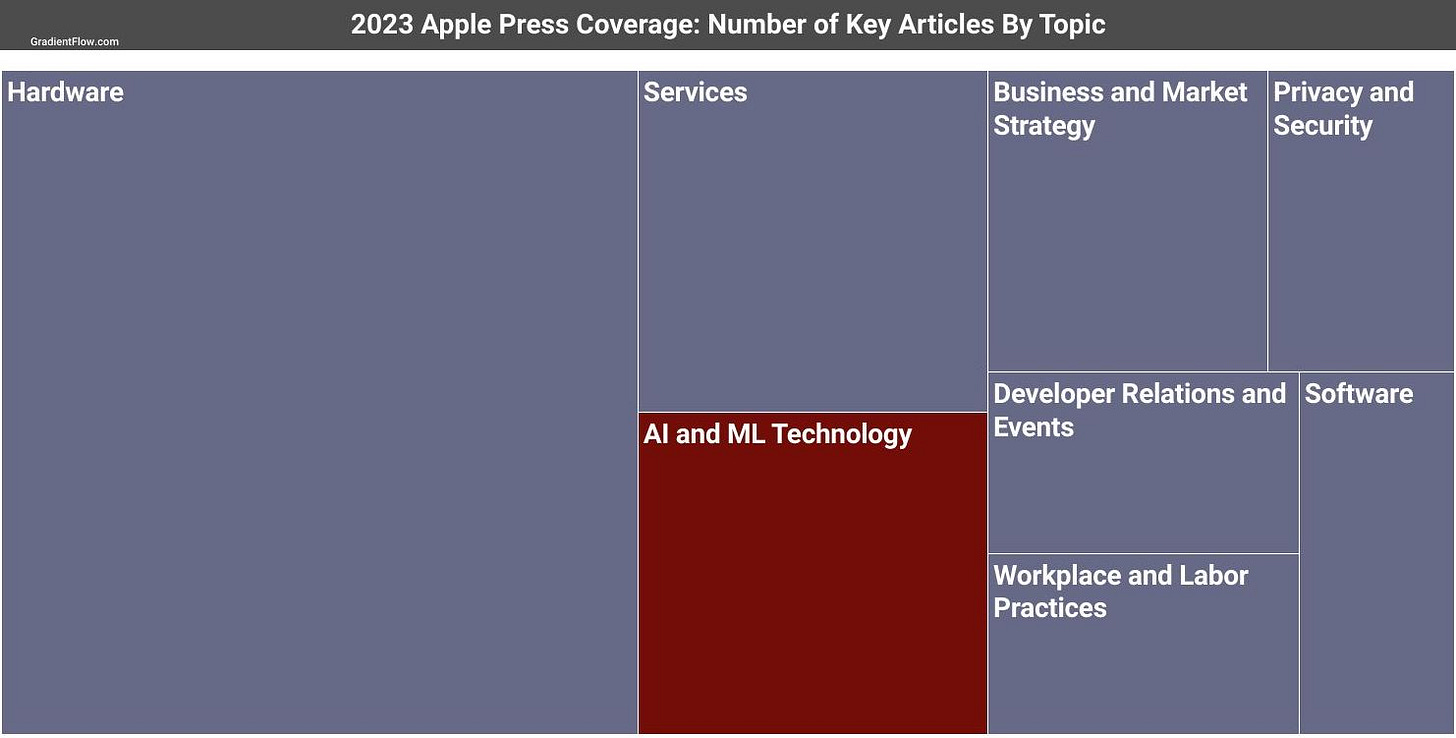

As the world's most valuable company, Apple's priority areas and investment strategies draw attention globally. Curious about the company’s moves in AI, I recently analyzed online job postings from Apple in the US, Europe, and APAC, which I extracted in mid-June 2023. These postings specifically mention [AI or machine learning or deep learning], and offer insights into the company's current priority areas within these fields. While Apple's AI announcements may not be as flashy as those from Google, Meta, OpenAI, Microsoft, or a cohort of startups, their job postings reveal a significant commitment to this field.

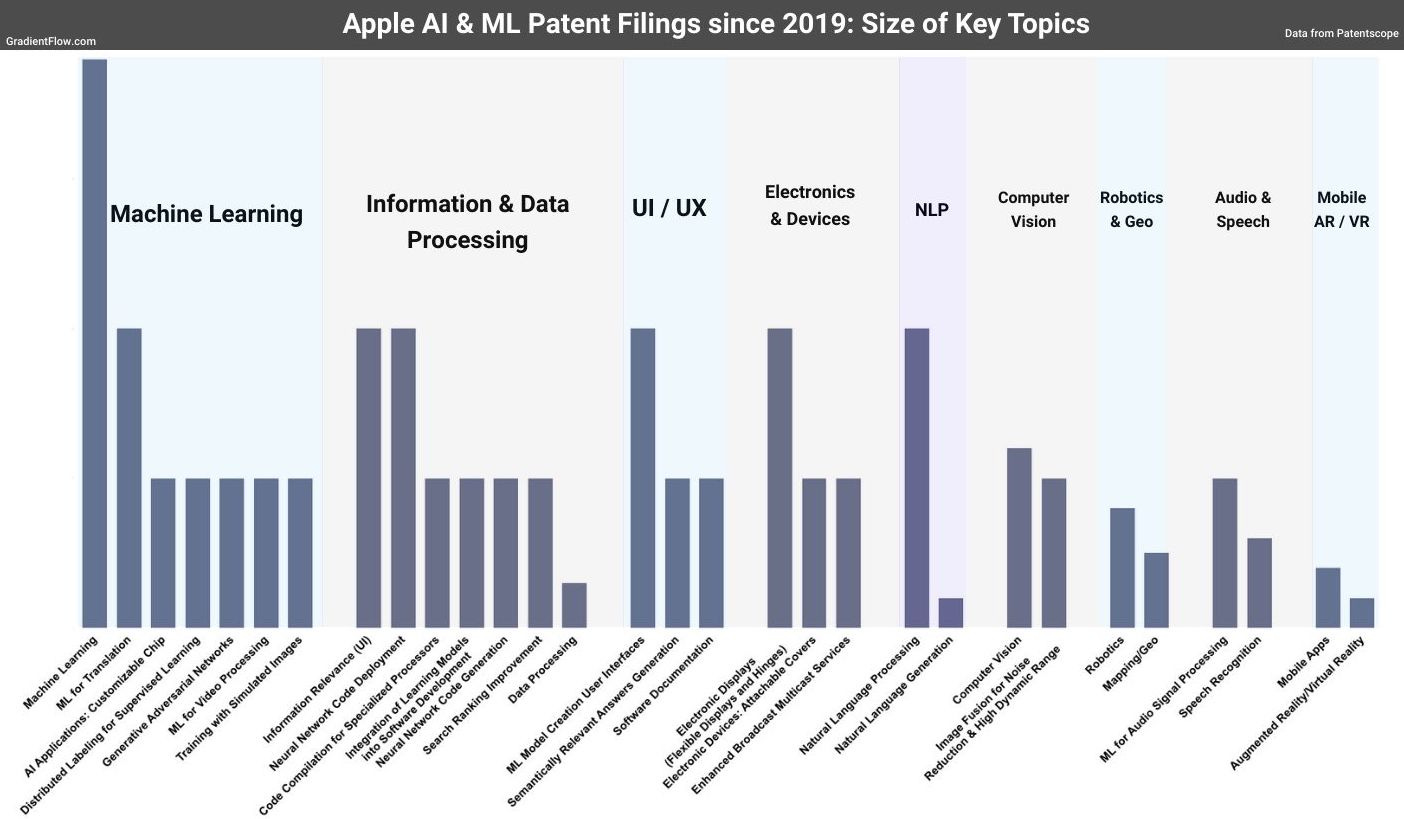

Apple’s job postings underscores the significant user benefits they anticipate. They show a keen interest in Machine Learning and Computer Vision, with emerging focus areas including Robotics, Mapping/Geo, and Speech Recognition, hinting at potential enhancements in services like Siri. Mentions of Autonomous Driving/Systems in recent job postings suggest the continuation of Apple's rumored self-driving car project.

While Apple has not publicly announced significant initiatives in Generative AI or large language model (LLM) technologies, clues from their job postings hint at an active interest and likely investment in these areas. The abundance of roles including Machine Learning Engineer, AI/ML - Annotation Analyst, and Research Scientist, in addition to software and systems engineering roles, suggests a focus on advancing AI expertise. This indicates an initiative to build resilient infrastructures that facilitate the deployment of state-of-the-art AI systems. Interest in Deep Learning Engineers particularly emphasizes the role of deep learning in their strategic blueprint - a fundamental aspect of generative AI and LLMs.

A deep dive into the skills for these roles reveals Python, C++, and Swift, along with specific references to PyTorch and TensorFlow, underlie the importance of deep learning skills. These programming languages and frameworks are fundamental to training and implementing deep learning models, including generative AI models and LLMs. The frequent mentions of Siri, coupled with an AI and machine learning-centric recruitment drive, is most likely a strategic move towards enhancing Siri's conversation abilities or even developing new AI-based features.

Central to the evolution of AI in the Apple ecosystem are the company’s silicon chips and Neural Engine, which optimize battery life and performance while effectively harnessing on-device AI capabilities. These assets run personalized AI models on the “edge”, contributing to the efficient processing of complex AI tasks. Apple also has access to fine-grained personalized data from various sources like contacts, calendars, emails, music, workout history, tasks, and real-time location, equipping them to provide highly personalized, context-rich experiences.

A closer look at recent product announcements affirms Apple's user-centered machine learning approach. They recently announced the "Better autocorrect" in iOS 17 powered by on-device machine learning, personalized volume features for AirPods, and a new iPad lock screen that animates live photos using machine learning models. Apple's AI and machine learning investments extend to its anticipated iOS 17 features, such as suggesting recipes from iPhone photos using computer vision and enhancing Journal, an interactive diary that offers personalized suggestions based on other app activities.

In conclusion, the future of AI at Apple appears to be highly promising, as its carefully engineered hardware and software are tailored to deliver enriched, customized experiences. The promising outlook hinges on the convergence of Apple Silicon chips, Neural Engine, and various AI initiatives. Coupled with personalized on-device data and seamless integration with external services, this combination lays a robust foundation for future AI products and applications.

Data Exchange Podcast

The Rise of Custom Foundation Models. Andrew Feldman is CEO and co-founder of Cerebras, a startup that has released the fastest AI accelerator, based on the largest processor. We explored the Cerebras-GPT family of large language models, renowned for setting unprecedented standards in computational efficiency, with sizes spanning from 111 million to 13 billion parameters.

Deploying and Developing with a Next-Generation AI Platform. Tim Davis, Co-Founder & Chief Product Officer of Modular, introduces us to Mojo, a unique programming language combining Python's usability with C's performance, streamlining AI hardware programming and extending AI models' flexibility. He also elaborates on Modular's Inference Engine, a versatile tool for executing and deploying TensorFlow and PyTorch models.

Entity Resolution: Insights and Implications for AI Applications

Software systems may start simple, but adding sophisticated features and ensuring maintainability leads to complexities, contributing to the age-old 'build versus buy' dilemma in software acquisition. With every team needing to weigh the pros and cons of developing new technology in-house versus procuring it from third parties, factors such as cost, implementation timeline, and technical risk come into play, necessitating a tailored decision based on individual business needs, resources, and risk tolerance.

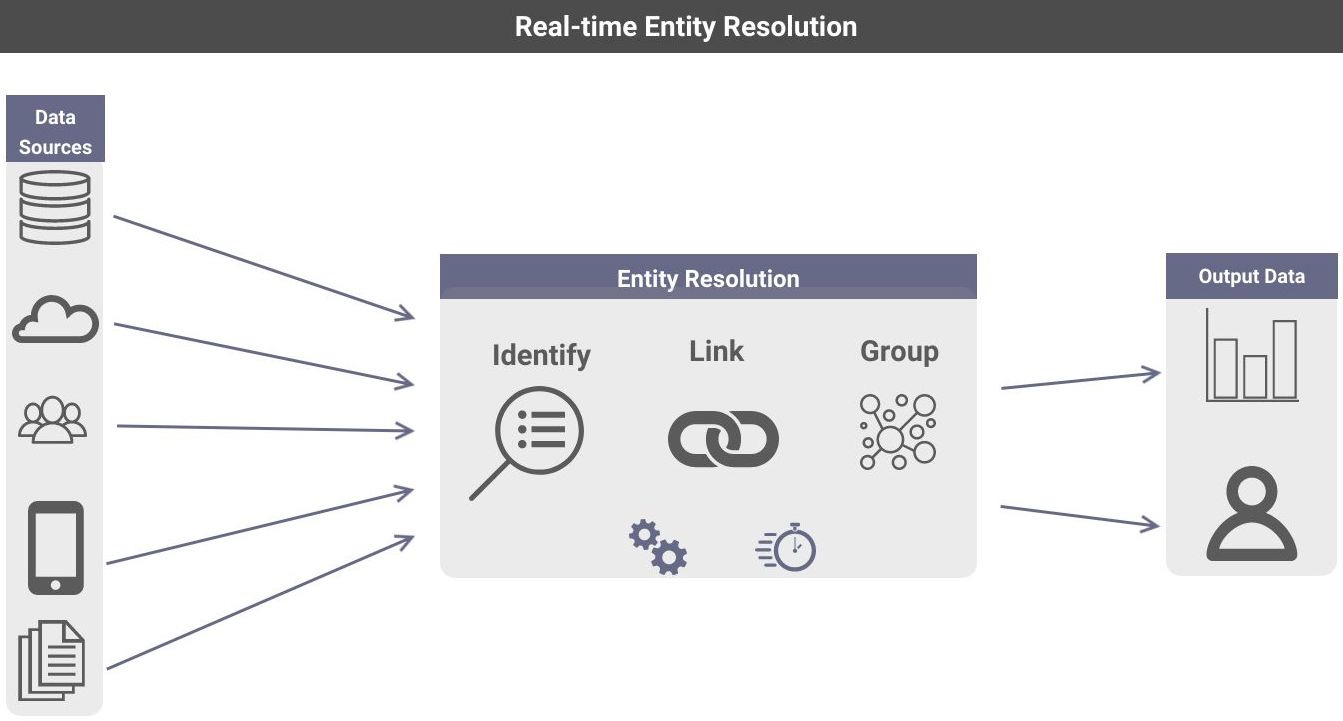

Entity resolution (ER), crucial software I've included in my "Don't Try This At Home" list, involves systematically connecting disparate data records representing the same real-world entity, such as customer, product, or company names. This process is extremely important because poor data quality adversely affects downstream analytics and AI applications. Although ER may appear straightforward initially, it's a complex problem teeming with applications including customer data management, fraud detection, data quality enhancement, data integration, data governance, and business intelligence.

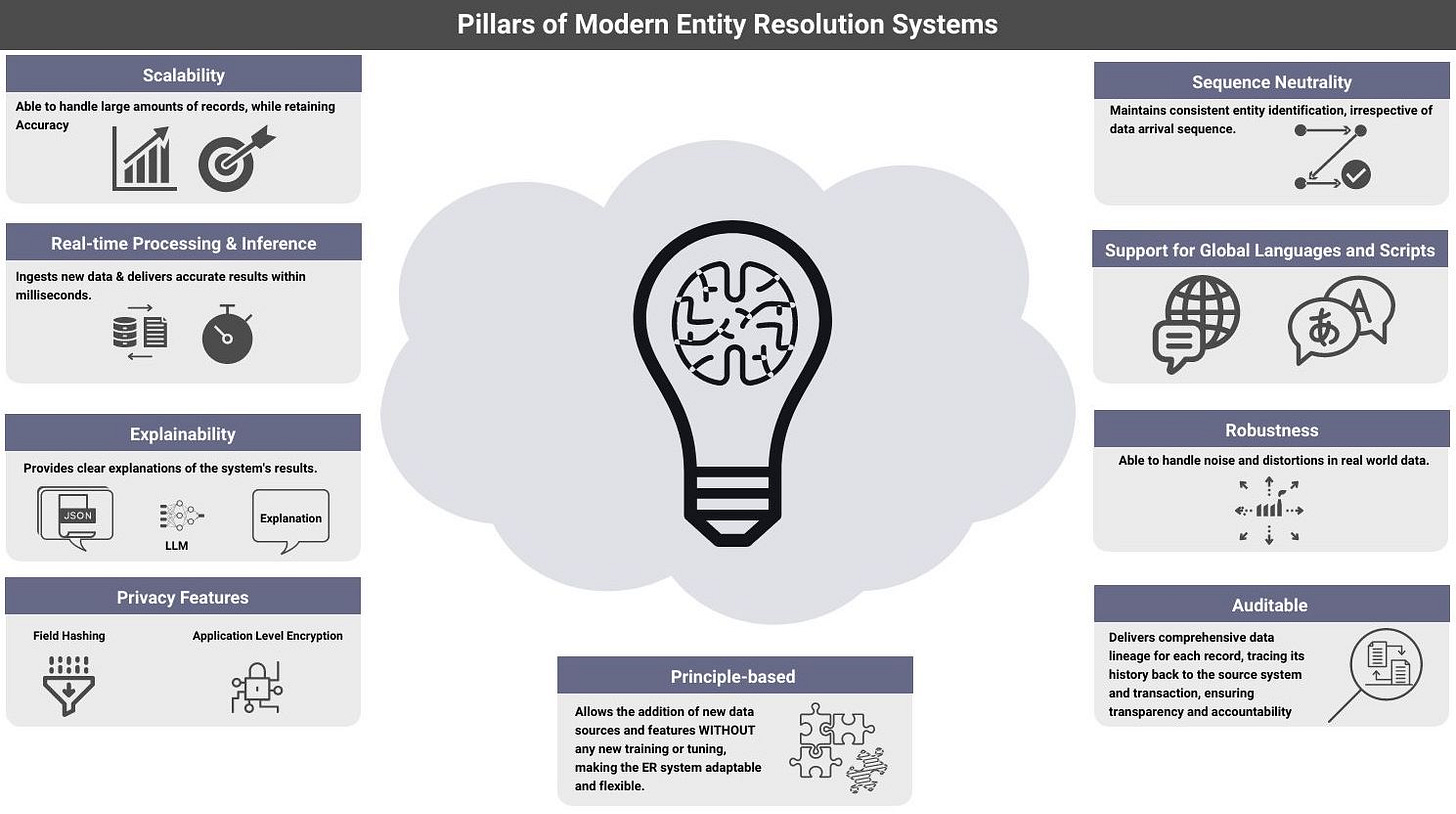

More importantly, ER is an outstanding example of an application that combines big data, real-time processing, and AI. The lessons learned from ER, in terms of accuracy, scale, and complexity, are transferrable and highly beneficial to other AI applications. As we delve deeper into the age of LLMs, insights from building ER systems are universal and invaluable to numerous AI applications confronting similar challenges.

I believe that building an in-house entity resolution system presents a multitude of challenges, making it an unwise investment for most teams. Firstly, maintaining accuracy at scale is a colossal task. While comparison of each record against others is feasible with small datasets, it becomes increasingly computationally expensive and impractical with the influx of large volumes of data, which can reach up to millions or even billions of records. This difficulty is exacerbated by the dynamic nature of data, with new records continuously streaming in real-time that need to be linked to existing ones accurately and promptly.

Sequence neutrality is an important requirement. This concept refers to the ability of a system to maintain consistent decision-making regardless of the order in which data arrives. It's crucial because new data can often retrospectively change our understanding of past data. Sequence neutrality means that your entity resolution system can consistently identify the same entities, regardless of the order in which the related data was received, similar to how new information can change our understanding of a past conversation. However, most ER systems lack sequence neutrality, which can lead to inconsistent and inaccurate outcomes. The common solution is to periodically reload all data, a time-consuming process that can lead to ongoing inaccuracies. Designing a system that implements sequence neutrality is exceptionally complex, and it can be a daunting task to undertake, especially when considering the potential need for real-time correction of prior assertions based on new information.

Latency issues are a crucial factor, especially in light of the need for real-time data processing. While vector databases might bring a level of semantic understanding, they may fall short in meeting millisecond latency requirements, which are critical for some real-time applications (including KYC and fraud detection). In scenarios where a customer signs up and returns minutes later on a different channel, your entity resolution system must recognize them immediately.

These days, I recommend Senzing to teams looking for an entity resolution solution. Senzing is a robust, efficient, and flexible entity resolution system that is cost-effective, and can be deployed on premises or in the cloud. Senzing takes data privacy seriously, offering field hashing and application-level encryption. It is also accurate at scale, capable of processing large volumes of data efficiently and in real time. Senzing can efficiently manage thousands of transactions per second, execute entity resolution in just 100 to 200 milliseconds, and conduct queries within tens of milliseconds, all while handling billions of records. Finally, Senzing upholds sequence neutrality, ensuring consistent identification of entities regardless of the order in which data arrives.

Summary

Entity resolution is a powerful example of how big data, real-time processing, and AI can be combined to solve complex problems. The insights garnered from ER's challenges in maintaining accuracy, managing scale, and dealing with complexity can enrich other AI applications, enhancing their precision, scalability, and sophistication. The principles of ER can directly be applied to any AI application that involves identifying and linking entities. This includes applications such as fraud detection, customer relationship management, and natural language processing.

Building and maintaining an in-house entity resolution system can be costly and time-consuming. An advanced and easy-to-deploy solution like Senzing provides best-of-breed features, while freeing up your resources to focus on other more important priorities.

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, Ray Summit, the NLP Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, or Twitter, or Mastodon, or T2, on Post. This newsletter is produced by Gradient Flow.