Building better AI agents, for less

From Monoliths to Specialists: The New Era of AI

In a previous analysis, I examined how a company could build a highly effective AI application for writing database queries without any fine-tuning, relying instead on semantic catalogs and validation loops to mirror how experienced analysts write SQL. This approach worked exceptionally well for that specific, targeted application. However, it represents just one narrow slice of what AI can accomplish.

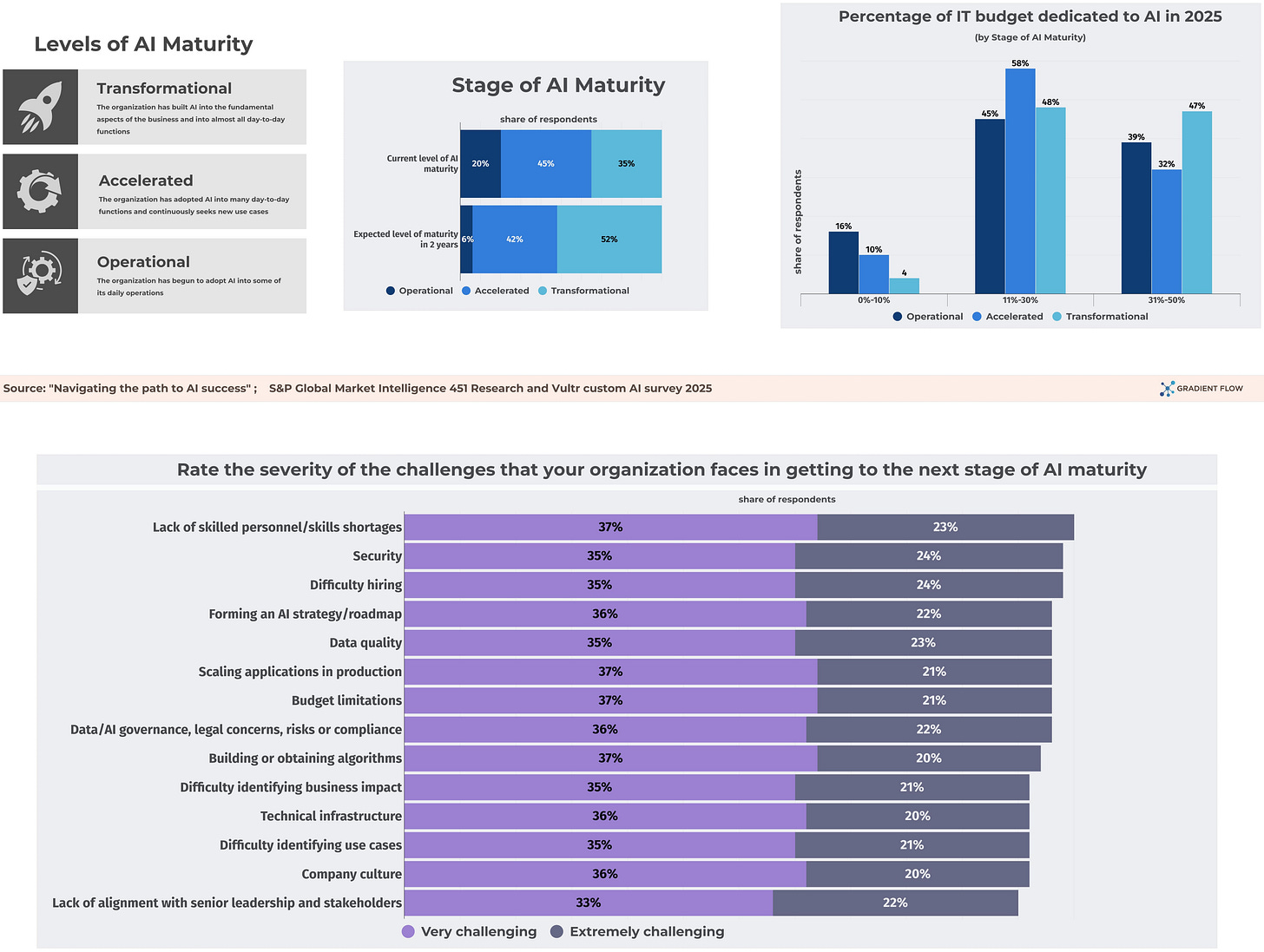

For businesses deploying AI, the forward-looking vision is a shift away from giant pre-trained models and toward ecosystems of smaller, specialized agents. With open foundation models rapidly closing the gap on proprietary ones, the key differentiator is no longer scale, but specialization. Smaller agents can think and act faster in domain-specific settings, much like how the power of a modern smartphone comes not from the device itself, but from its ecosystem of countless specialized apps, each designed for a specific purpose.

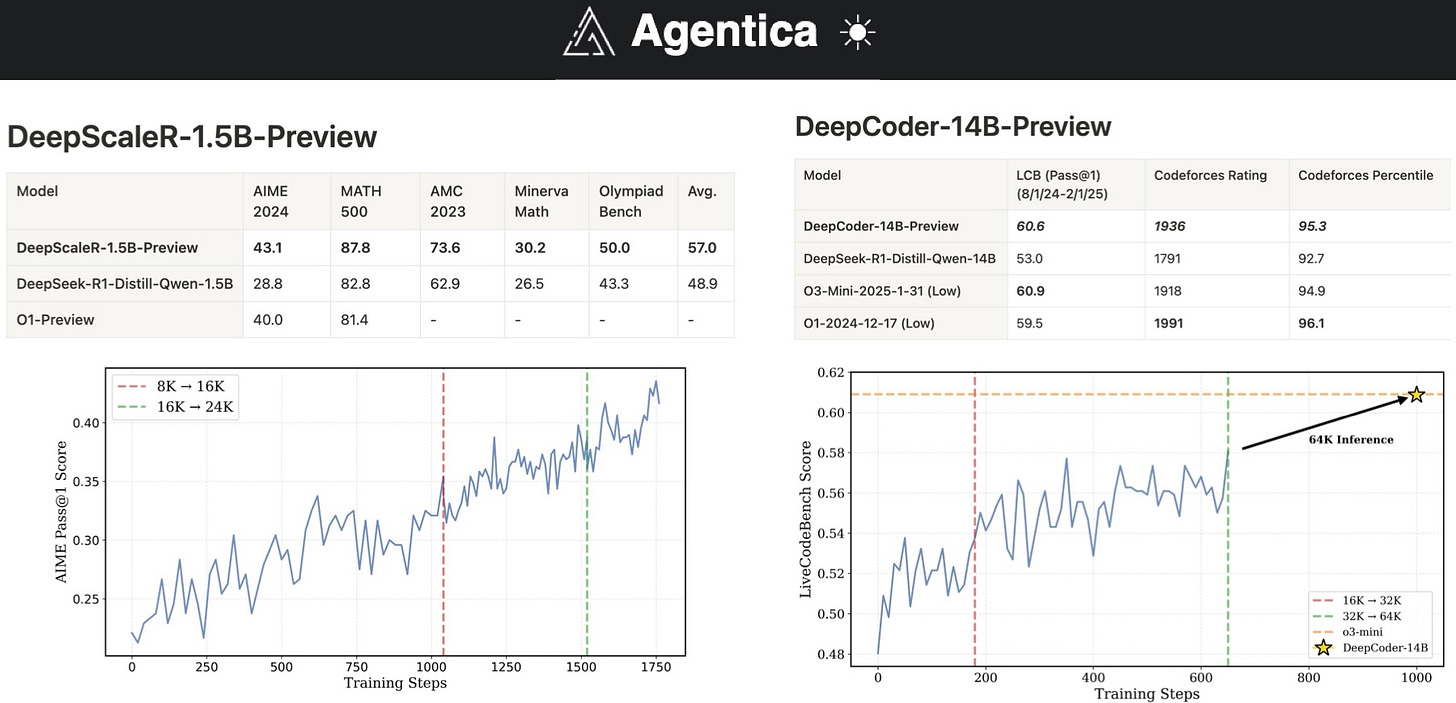

Consider DeepCoder, a 14 billion parameter coding model that achieves high performance across coding benchmarks. What makes DeepCoder notable is not just its size but its training methodology—reinforcement learning forms a core component of its development. The model uses a reward-based approach where it receives one point for passing all tests and zero for failing any, then iteratively improves through specialized reinforcement learning techniques. This exemplifies how post-training methods, particularly reinforcement learning, have become essential for creating capable AI systems. It underscores a point made by observers like Andrej Karpathy in the context of coding tools: building modern AI requires fluency not just in traditional code and data, but in the craft of refining and specializing these powerful new models. The ML engineers who can fine-tune and distill models represent a critical piece of this evolving landscape.

The Art of Model Refinement

Post-training represents the crucial phase that transforms raw, pre-trained models into practical, deployable systems. While pre-training gives us powerful foundation models by processing vast amounts of text, these base models are essentially sophisticated next-token predictors. They lack the ability to follow instructions consistently, maintain conversation structure, or excel in specific domains without additional refinement.

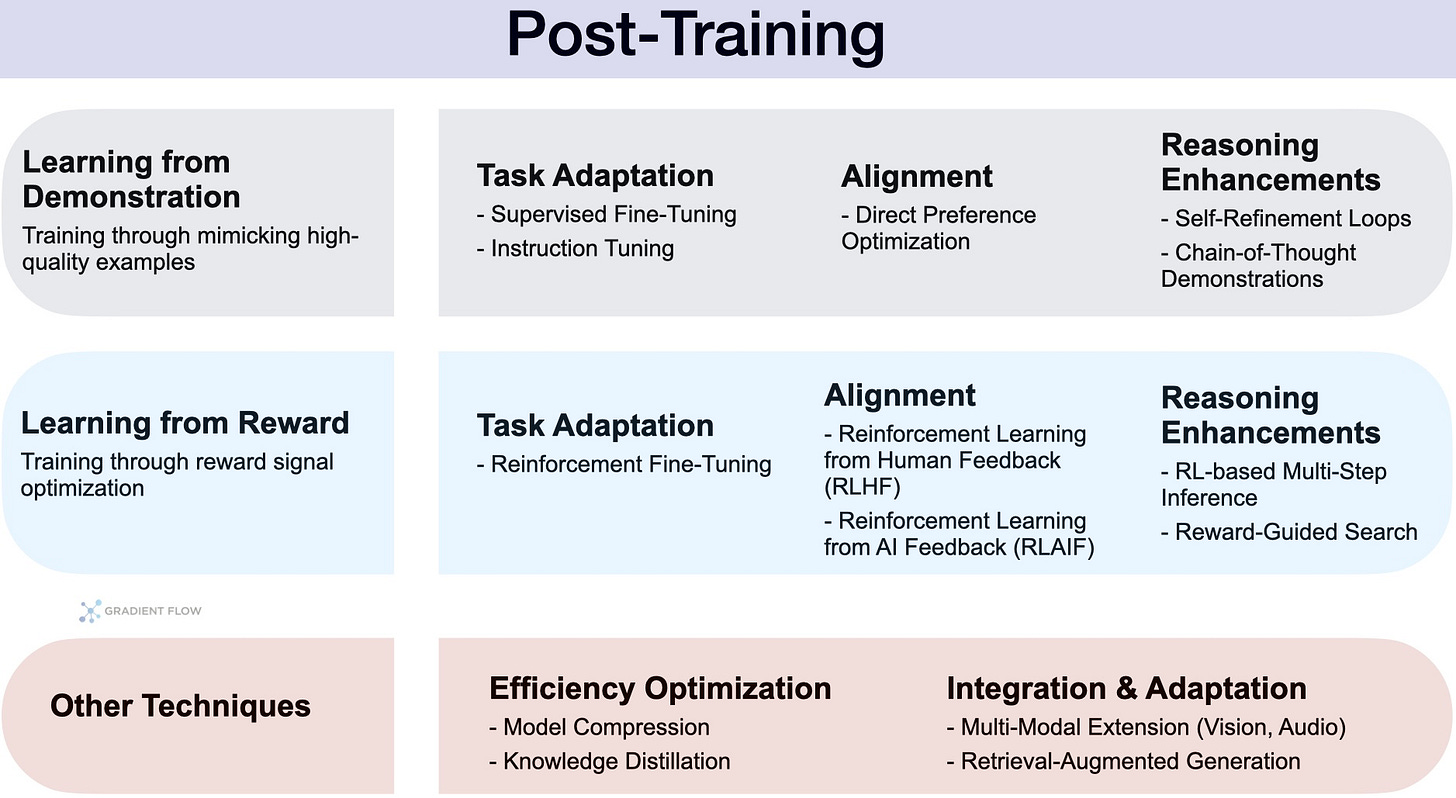

The landscape of post-training encompasses two main paradigms. The first is learning from demonstration, where the model is fine-tuned on high-quality examples of a desired output, much like an apprentice mimicking a master. The second, and often more powerful, approach is learning from reward. Rather than mimicking a perfect example, the model learns to improve through trial and error, guided by a reward signal for successful outcomes. It does not need a perfect example to copy; it only needs a way to distinguish a better outcome from a worse one. Reinforcement learning is the engine for this paradigm.

The technical hurdles get much higher when an AI has to reason step-by-step and produce page-long answers. Reinforcement learning requires large batch sizes for stability, and each training iteration can take significant time and computational resources. The computational and engineering costs are the admission price for turning next-token prediction into reliable, context-aware assistance.

This raises an important question about the relationship between capable agents and post-training. Even if future AI agents can effectively use external tools and resources—essentially scaled-up versions of the text-to-SQL example I described previously—post-training remains a key differentiator. External tools can provide factual grounding and a means of verification, but they cannot teach a model how to reason with nuance, navigate ambiguity, or decompose complex problems—skills that are critical for mastering domain-specific tasks.

Making Advanced AI Accessible

Recent developments offer encouraging signs that sophisticated post-training techniques are becoming more accessible to smaller teams. NovaSky, an open-source initiative from Berkeley researchers, demonstrates how demonstration-based training can achieve near-GPT-4 level reasoning with surprisingly modest resources. Their Sky-T1 model matched OpenAI's o1-preview performance on mathematical and coding benchmarks using only 17,000 curated reasoning demonstrations and 19 hours of training on commodity hardware—roughly $450 in compute costs. This is why the project's true ambition is so critical: NovaSky is building a full-stack platform for post-training, providing a toolkit needed to accelerate the industry’s shift from monolithic models to specialized agents.

While learning from demonstration is powerful, reinforcement learning unlocks the next level of capability, enabling models to tackle long-horizon tasks and improve through exploration. Here, the challenge has been one of scale and cost. Agentica, another open source project, has focused on building infrastructure that makes sophisticated reinforcement learning practical for more teams. By designing systems that cleverly disaggregate the components of training—separating the model’s learning process from its interactions with a simulated environment—they have reduced the cost and complexity of these techniques.

The focus on accessible, scalable, and open source tools is crucial because it decouples cutting-edge performance from specialized talent and colossal budgets. It allows smaller, more focused teams to refine highly effective models for their specific domains, whether for scientific discovery, specialized code generation, or optimizing internal business processes. This movement is making the most advanced AI techniques available to a wider array of builders, fostering a more diverse and competitive ecosystem.

The Path Forward

The current moment in AI development resembles an inflection point where assumptions are being reconsidered. The opportunity today isn’t to build a single, all-knowing AI that runs everything on its own. Instead, the most promising path lies in building products that feature practical, partial autonomy. This means designing tight, collaborative loops where humans retain strategic control and provide judgment, while AI agents handle increasingly complex sub-tasks.

Smaller agents can think and act faster in domain-specific settings

To build these systems, we need more than just powerful base models. We need agents that are reliable, aligned with our goals, and specialized for the work at hand. It is through the careful art of post-training—refining, specializing, and guiding these models with techniques from supervised fine-tuning to reinforcement learning—that we will forge the dependable, task-specific AI that defines this new era of computing.

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.