AI Deep Research Tools: Landscape, Future, and Comparison

By Louis Bouchard, Ben Lorica, and Samridhi Vaid.

You've seen how large language models (LLMs) like GPT-4o (in ChatGPT) and Gemini handle everyday tasks—summarizing documents, brainstorming ideas, and answering customer queries. While tools like ChatGPT's web browsing or Perplexity extend these capabilities by gathering context from the internet, they remain limited for complex analytical work.

That has changed with the recent development of deep research tools—hybrid systems that combine conversational AI with autonomous web browsing, tool integrations, and sophisticated reasoning capabilities. These tools function like human researchers, methodically breaking down problems, gathering relevant data from multiple sources, and conducting comprehensive analysis. While still benefiting from human oversight, they significantly accelerate both the pace and quality of complex research tasks.

In this article, we'll provide a detailed comparative analysis of popular deep research platforms, examining their unique approaches and explaining why they represent a fundamental shift in knowledge work.

What Are Deep Research Tools?

Deep research tools represent a new generation of AI designed to autonomously conduct comprehensive investigations on complex topics. What distinguishes them is their ability to browse diverse sources—including academic papers, news articles, user-generated content, and specialized review sites—while adapting their search strategies in real time. The result is structured, properly cited reports that reflect a human-like investigative approach rather than simple prompt responses.

Why choose AI agents over standard chatbots? Consider planning a budget European trip with specific interests. A typical chatbot would provide a generic itinerary using limited data sources.

Enjoy our newsletter for free—go paid for bonus content! 🎁

In contrast, a deep research tool would methodically tackle your request—checking real-time train schedules, exploring traveler forums, comparing costs across booking sites, and finding local festivals during your dates. It would deliver a comprehensive itinerary with original source links, expense estimates, and visual comparisons of routes. What makes these research agents truly powerful is their ability to analyze initial findings and dynamically adjust their research strategy to explore new leads.

Separate from Research Agents, more General AI Agents are also emerging. These might automate more action-based tasks like booking hotels, purchasing train tickets, or sending you reminders. While it could gather some travel data, it generally wouldn’t produce the same in-depth research report that a deep research tool would, since it focuses on completing tasks rather than delivering a comprehensive, multi-sourced analysis.

In essence, deep research tools handle the heavy lifting of finding, validating, and assembling diverse information before delivering a thorough answer. They show more “agency” by breaking down tasks, performing iterative searches, and documenting their intermediate reasoning. Some even run in the background, returning a complete report without interactive chat. Their autonomy and structured process make them indispensable for complex professional research, informed consumer decisions, and academic work.

Example Workflow of A Deep Research Tool

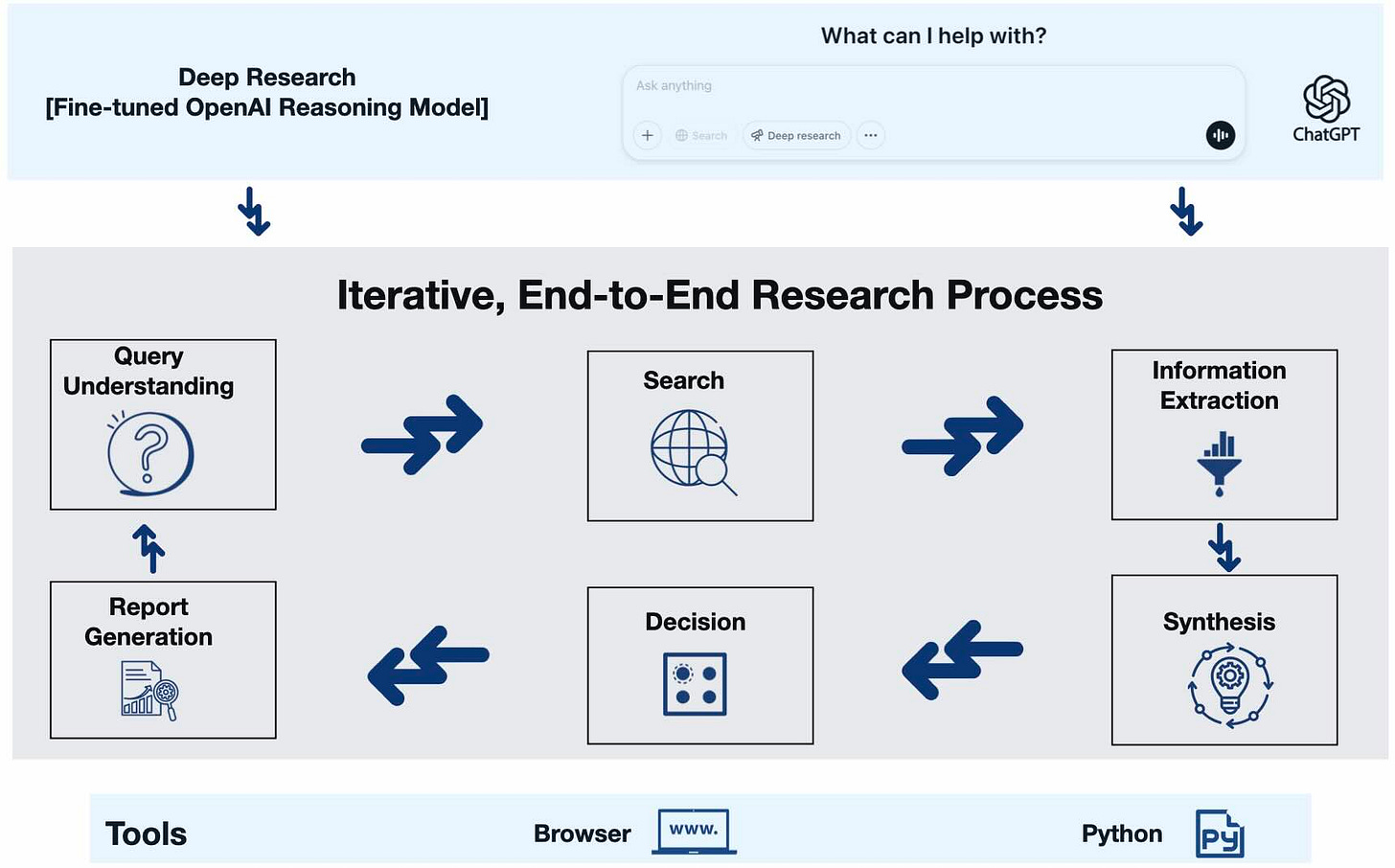

To better understand how a deep research tool typically works, let's look at GPT researcher, an open-source deep research project. Figure-1 (below) shows its process. The system uses distinct AI agents working together: First, a planner agent breaks the main research query into focused sub-questions. These sub-questions are given as an input to the researcher agents, which sequentially perform targeted web queries to gather relevant data. Once the relevant information is collected, a publisher module synthesizes and refines everything into a final, comprehensive report.

More recent advanced Agents like OpenAI’s Deep Research take this a step further with multiple iterations of this loop - gathering data before analysing it and then making its next plan for further searches. As these tools evolve, they introduce diverse capabilities and specializations, shaping an ever-expanding landscape of solutions.

Evolving Landscape

A growing number of AI tools now offer deep research capabilities. Broadly, they can be categorized into general-purpose and open-source/experimental systems. This distinction between these tools is based on their scope (web-wide vs. domain-specific) and their accessibility (commercial vs. open-source).

Several major commercial platforms have launched sophisticated deep research features for complex tasks. OpenAI’s ChatGPT with Deep Research—though not first to market—currently leads in capability, leveraging its o3 reasoning model to generate thoroughly cited reports in 5-30 minutes. Beyond pulling information from the web, it can also process documents, PDFs, and URLs provided by the user. Fine-tuned via reinforcement learning, it scores 26.6% on Humanity’s Last Exam benchmark but costs $200/month for full access (120 tasks). If you wish to see an output but don’t want to pay up, here’s a research report we asked it to create on AI Agents!

Google Gemini’s Deep Research takes a slightly different route, allowing you to refine your research plan before you set it in motion, thus granting you greater control over the process. It uses Google's expertise in search and integrates seamlessly with tools like Google Search and Workspace applications, making it particularly effective for general business research, competitive analysis, and trend monitoring. The tool employs advanced reasoning capabilities, iterative searches, and synthesis to deliver comprehensive reports quickly, typically within 5-15 minutes. Its integration with the Gemini 2.0 Flash Thinking model enhances its ability to tackle complex topics efficiently.

Grok 3 from xAI prioritizes speed with real-time X data integration, making it ideal for breaking news. Perplexity AI delivers quick, citation-backed summaries but struggles with complex reasoning, while Kompas AI excels in generating professional-grade, evidence-rich reports for academic and professional applications.

Open-Source and Experimental

GitHub hosts numerous experimental projects combining LLMs with search APIs. Prototypes like GPT-Researcher serve as open-source agents for online research, recursively branching to create unbiased, citation-rich reports. While less polished than commercial alternatives, these tools help pave the way for future open research innovations.

Hybrid models like Stanford STORM blur traditional categories—functioning as general-purpose tools while remaining free and open-source, merging diverse perspectives into Wikipedia-style overviews for public use.

Each platform occupies a unique niche, with significant advancements expected as OpenAI and competitors push boundaries. This competition will likely drive capable Agents to more accessible price points.

Using these tools effectively requires knowing not just how they work, but when to deploy them, how to structure interactions, and why their decision-making matters. For those interested in the LLM development techniques behind these Agents, our ‘From Beginner to Advanced LLM Developer’ course offers valuable insights.

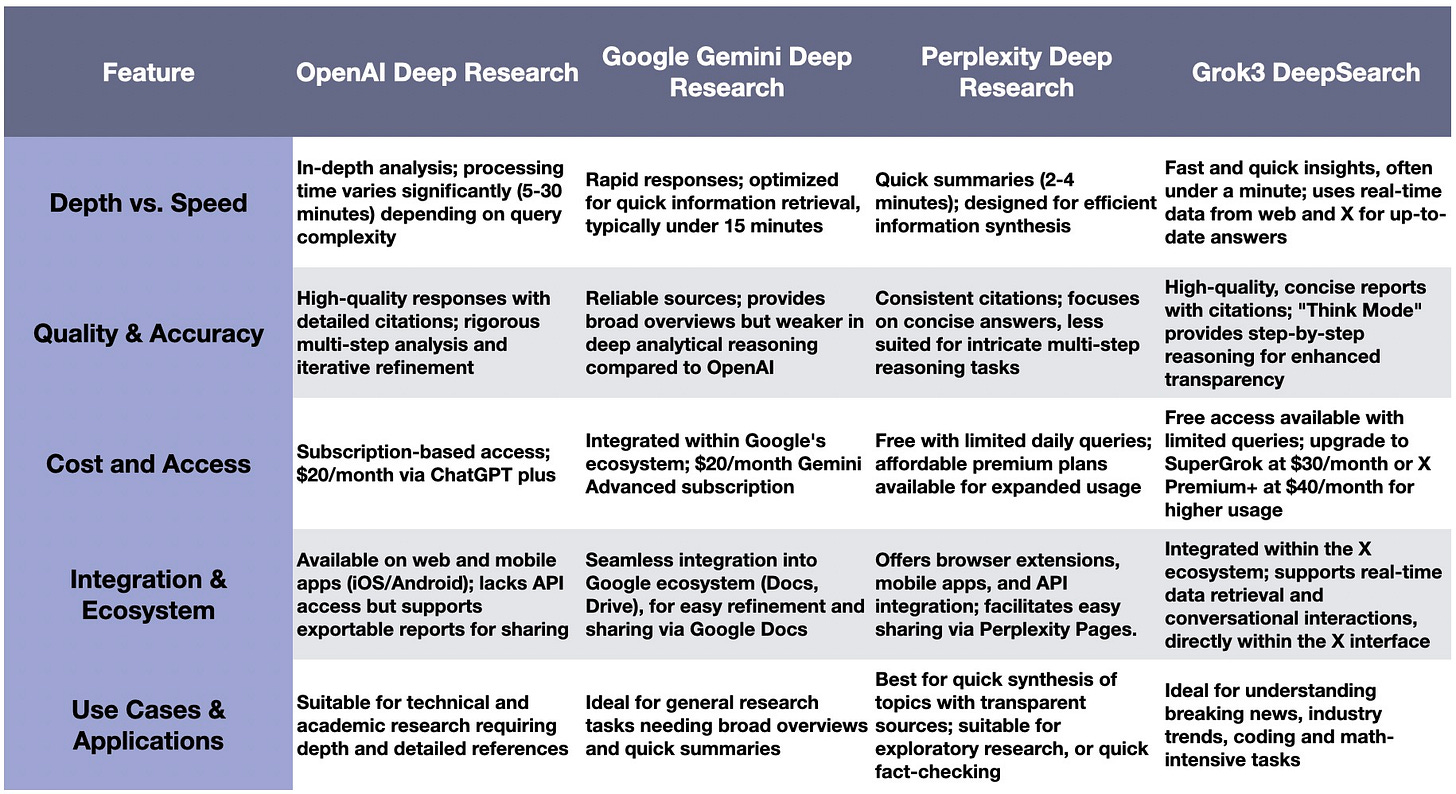

The comparison table below highlights several tools to experiment with, each serving a distinct purpose for your needs.

Real-World Applications

Deep research tools are redefining how we approach information gathering and analysis by automating complex, multi-step research processes. These tools are already changing how industries like consulting, finance, and academia work, but their full potential is just starting to be realized. As they improve, these research tools will become essential in many fields.

Business and Consulting: At Bain & Company, OpenAI's Deep Research transforms industry analysis, reducing days of work to hours. Single consultants now instruct AI to process hundreds of reports and datasets, focusing their expertise on insight interpretation and strategy development. Consulting firms increasingly adopt such AI tools for competitive and consumer research, enhancing efficiency and extracting deeper insights from data volumes beyond human capacity alone.

Finance and Investing: OpenAI has highlighted use cases like scanning financial documents and news for investment risk analysis. Their Deep Research tool elevates this capability, offering superior automation and analytical depth. Deutsche Bank's test demonstrates this power: in just eight minutes, it produced a 9,000-word report with 22 sources on U.S. steel tariffs. Analysts confirmed the tool's significant improvements in clarity, relevance, and accuracy over previous AI models, highlighting its ability to rapidly synthesize complex information.

Scientific Research and Academia: Deep research tools assist researchers and students with literature reviews, thesis development, and hypothesis generation by analyzing vast data to identify patterns and hidden connections. In science, these tools transform research by automating study discovery, summarizing findings, and revealing knowledge gaps. OpenAI's Deep Research creates cited multi-page reports that quickly convey topic knowledge. Students benefit by exploring complex subjects, finding credible sources, and generating detailed reports.

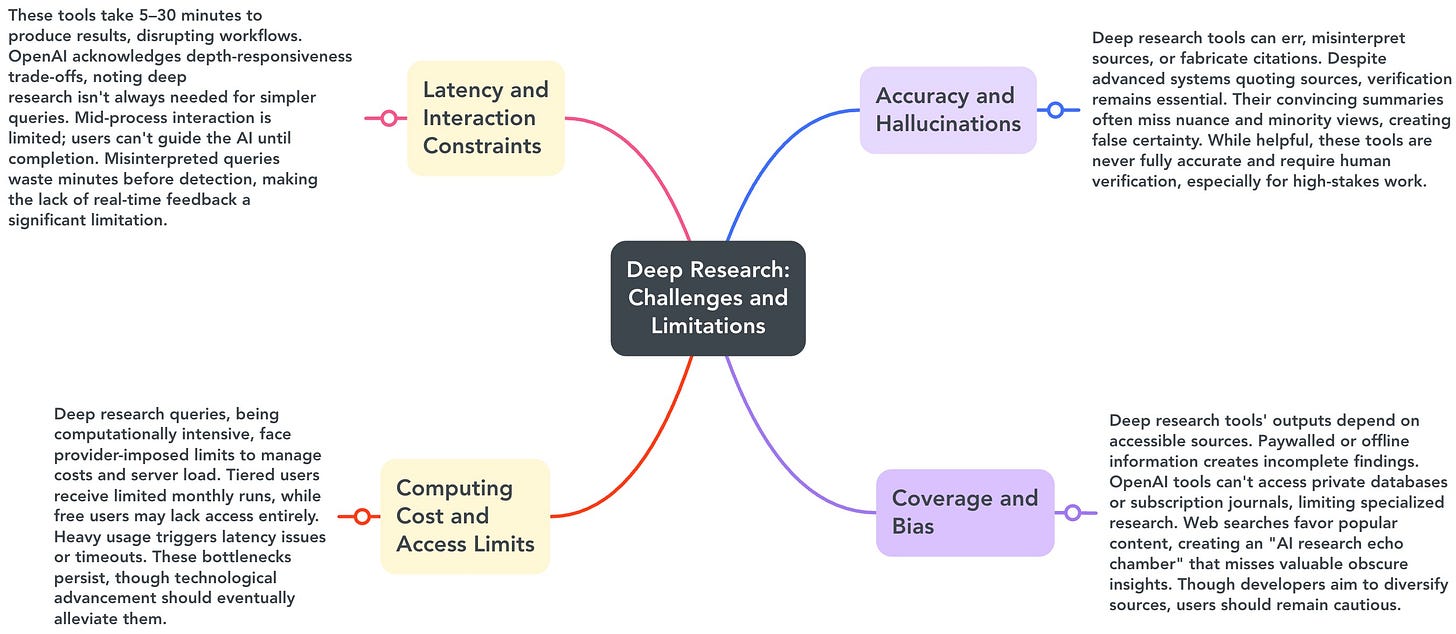

Deep research tools are transforming how research is conducted in business, finance, and academia through complex, multi-step analyses. At the same time, they expose certain areas that still need improvement. For example, while these tools can generate detailed, cited reports, they can also still face issues like errors, slow responses and usage limits.

Future Trends and Predictions (Next 6–12 Months)

Deep research tools are evolving rapidly. Here are the key trends shaping their future:

AI models are improving at solving complex problems. OpenAI's "o3" (an o1 iteration with more reinforcement learning on GPT-4o) marks just the beginning. Future models will likely feature better reasoning, research customization, and context windows processing hundreds of pages simultaneously—enabling swifter, more comprehensive analyses of numerous sources.

Multimodality is expanding AI beyond text. Leading LLMs already handle images, and advanced models like Gemini Flash 2.0 process videos seamlessly. This powers research agents' web browsing capabilities, while further progress will enable visual summaries, interactive presentations, and multimedia reports combining text, visuals, and audio.

Future deep research tools will better integrate with specialized tools, automatically invoking them for tasks beyond browsers. Enterprise versions will securely access private SharePoint, Confluence, or wikis.

Accessibility to advanced research capabilities is set to expand as well. With OpenAI’s CEO Sam Altman hinting at free limited-access to Deep Research in the future. Google Deepmind has also just made a version of a revamped Deep Research tool available within its Gemini Chatbot.

The Future of Knowledge Work: Human-AI Partnership

Deep research tools represent more than improved chatbots—they fundamentally transform how AI integrates into professional workflows. By shifting from passive assistant to active research partner, these tools empower professionals to make decisions that are simultaneously faster, smarter, and better-informed.

In the coming year, expect these technologies to become more powerful, accessible, and affordable. Advancements in information processing, multimodal capabilities, and workflow integration will likely expand their reach across professions and industries. The real challenge will be figuring out how to strike the right balance, combining the speed and scale of AI with the depth and critical thinking that only humans can provide. Issues like occasional latency, accuracy limitations, and the need for oversight remind us that these tools complement rather than replace human expertise.

At their best, these AI tools don’t replace human insight, they enhance it. Used wisely and responsibly, they’re on track to become indispensable partners in our ongoing pursuit of knowledge and discovery.

Many open source Agent projects are already available to build upon and OpenAI also plans to release “reinforcement fine-tuning” options for its reasoning models. You can create your own specialized Agents with custom interfaces, integrations, and industry-expertise—transforming these tools into autonomous task handlers. Building custom agents requires mastering the essential LLM toolkit (Data Curation, Prompting, Fine-Tuning, RAG and Tool use). You can access this complete toolkit and the roadmap to becoming a certified LLM Developer in our course, ‘From Beginner to Advanced LLM Developer’.

Data Exchange Podcast

Building the Operating System for AI Agents. Google DeepMind's Chi Wang discusses AG2, an open-source platform for building sophisticated multi-agent AI systems that dramatically reduces effort in complex knowledge work.

Prompts as Functions: The BAML Revolution in AI Engineering. BAML is a domain-specific language that transforms AI prompts into structured functions with defined schemas, enabling developers to build more deterministic and maintainable applications that can adapt to new models without significant refactoring.

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the AI Agent Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.