AI's biggest enterprise test case is here

Legal AI Unpacked: What Works, What Fails, What’s Next

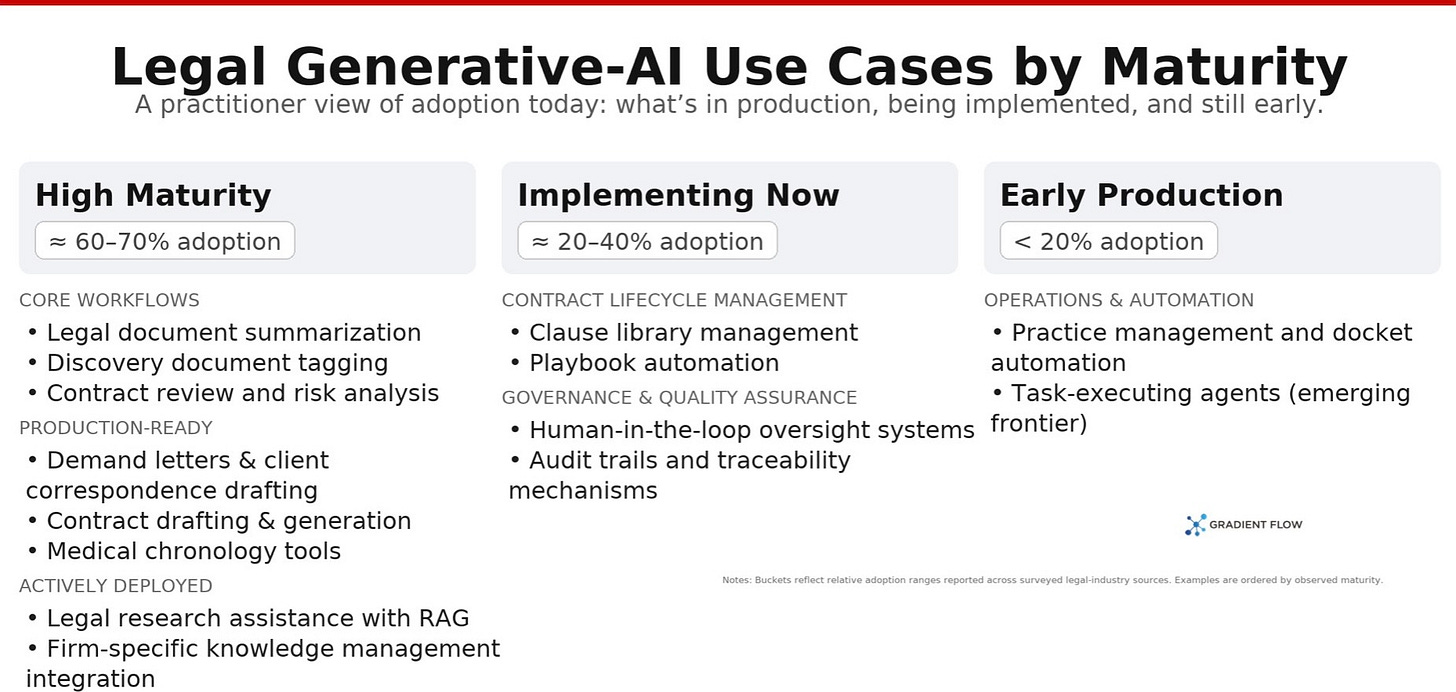

Law firms were early adopters of tools for searching and classifying large document collections, so their current enthusiasm for generative AI follows a familiar pattern. Business press coverage reinforces this interest: articles forecasting the disruption of knowledge work routinely single out legal services as particularly vulnerable to automation, given the profession’s reliance on text-intensive research, drafting, and document analysis.

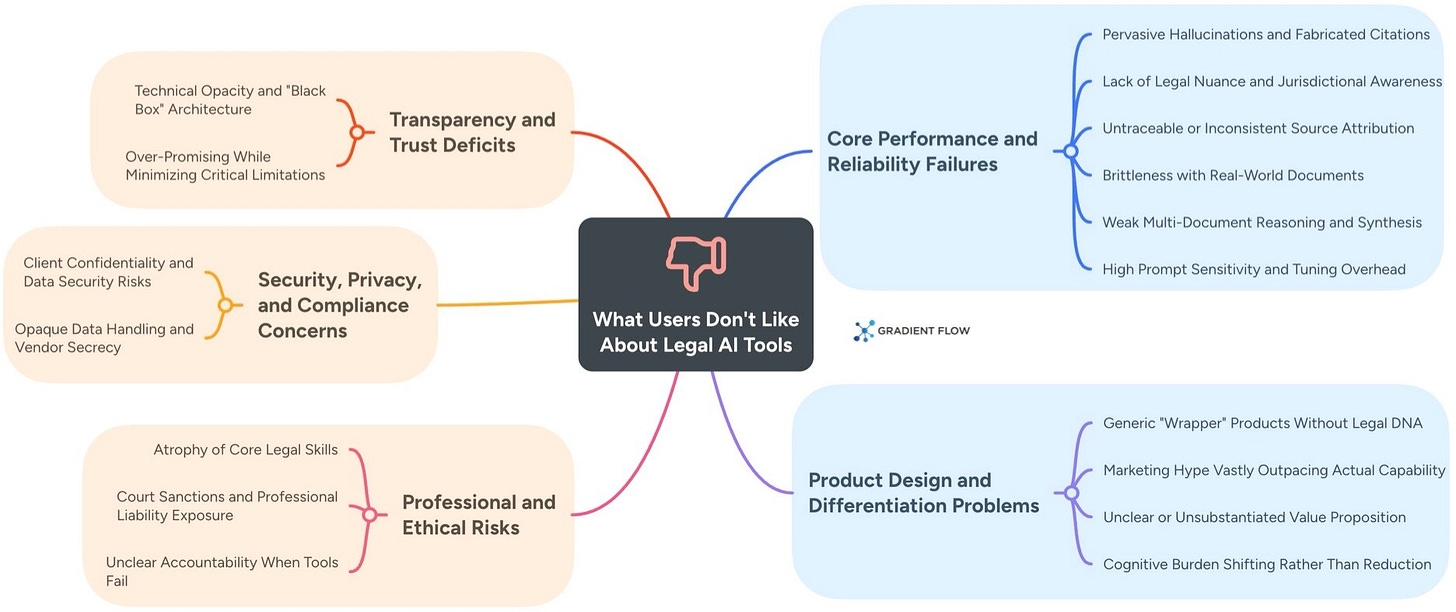

The view from online forums is much more guarded. Today’s tools still miss basic requirements for high-stakes work: they invent citations, blur jurisdictional boundaries, and falter on messy, real-world documents. Most Legal AI products are nothing more than thin layers over general-purpose models, with limited legal tuning, opaque behavior, and weak audit trails. Integration with older systems is brittle, confidentiality constraints are strict, and reliability is uneven. The prevailing pattern is cautious use for drafting, summarizing, and triage — always with human verification.

This tension between potential and peril makes the legal industry an interesting case study. While today’s foundation models are far from perfect, their adoption in legal settings offers a preview of how other high-stakes professions will grapple with the technology’s limitations. What follows maps the current landscape: where generative AI is gaining traction in legal practice today, the points at which it fails, and the engineering and governance work required to close the gap between prototype and production. For any leader considering AI’s role in knowledge work, the legal field provides an indispensable, if cautionary, guide.

Where Legal AI Works Today

Document Review & Analysis

Each of the following address a distinct workflow: contract review happens before deals close, summarization turns lengthy records into readable briefs, and discovery sorts evidence during litigation.

Contract Review and Risk Analysis. Law firms deploy generative AI to parse contracts, classify clauses (indemnity, termination, change-of-control), extract obligations, spot departures from standard terms, and assign risk scores. Roughly 28% of in-house teams rank this as AI’s highest-value application.

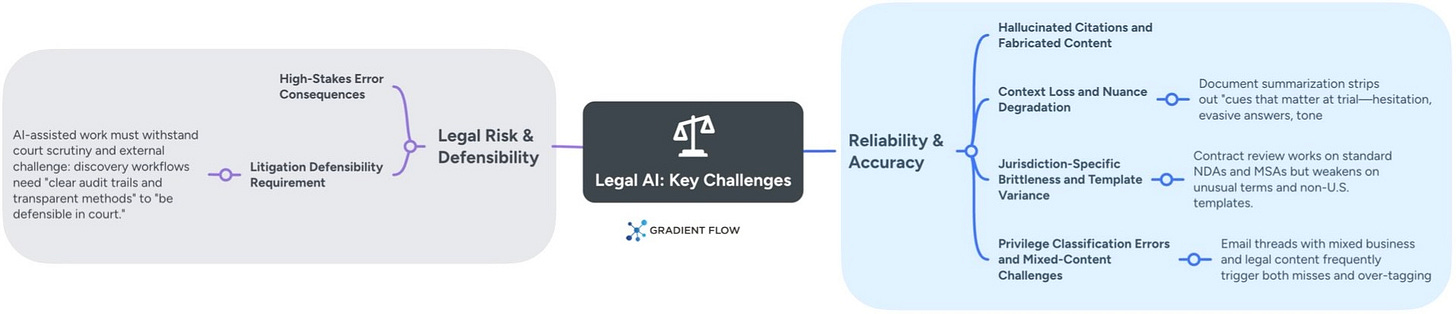

Where it breaks. Foundation models struggle with legal subtlety. Error rates climb on unusual deals and non-U.S. agreements. Poor formatting trips the systems. The real hazard is overconfidence: models generate plausible but incorrect clauses. Without legal grounding and rigorous checks, speed gains turn into liability exposure.

What’s next. Developers are building hybrid systems — LLMs anchored to legal knowledge graphs, retrieval methods tied to jurisdiction-specific sources, and independent citation checks. Emerging “agent” workflows chain tasks like intake, clause comparison, and exception reports, all with audit logs. Priorities include training on European, UK, and Asia-Pacific templates.

Legal Document Summarization. Generative AI compresses depositions, hearing transcripts, evidence files, and regulatory submissions into short summaries. Adoption is broad: 61% of practitioners now use AI for case-law summaries.

Where it breaks. Smooth prose can hide factual mistakes. Models lose context, omit critical details, or inject small errors that resurface later. Quality hinges on precise prompts, and even strong output requires verification. Summaries can also strip out cues that matter at trial — hesitation, evasive answers, tone — making them useful for initial review, not final work product.

What’s next. Tools are moving from single-document notes to multi-file synthesis across entire case records. Expect RAG pipelines with inline citations and visible reasoning chains as baseline features. Future versions will incorporate metadata — audio tempo, objection frequency — to retain nuance in deposition digests.

Discovery Document Tagging and Classification. In electronic discovery, generative AI labels documents for relevance, privilege (attorney-client or confidential), and case themes, interpreting context beyond keyword matching. Current adoption stands at 37%, with 56% planning use. Active users save one to five hours weekly; one litigation team cut first-pass review time when combining AI with human oversight.

Where it breaks. Errors carry litigation risk. Missing key documents can draw court sanctions. To be defensible in court, workflows need clear audit trails and transparent methods.

What’s next. Best practices are converging on a hybrid model: lightweight classifiers, RAG-backed explanations, and targeted human spot-checks, with every decision logged. New tools will build case timelines and narratives directly from tagged evidence. As courts issue guidance on AI-assisted discovery over the next two years, processes should standardize and adoption accelerate.

Document Drafting & Generation

Litigation correspondence handles adversarial communications; contract drafting serves commercial transactions; medical chronologies solve a document-processing bottleneck specific to personal injury cases.

Demand Letter and Legal Correspondence Drafting. Law firms now use AI to generate demand letters — pre-litigation settlement proposals — along with court complaints and client emails. GPT-4-class models produce usable first drafts in minutes by pulling from case facts and firm templates. EvenUp and similar vendors embed automated quality checks.

Where it breaks. Models miss persuasive nuance and make factual errors, including fabricated case citations, particularly for matters outside well-covered U.S. jurisdictions. Lawyers retain full legal responsibility and must review every output.

What’s next. Expect in-editor co-pilots (e.g., Harvey’s Word plug-in) that suggest language in real time and enforce consistency with firm knowledge bases. Builders are adding negotiation strategy, tone calibration, and links to matter-management systems so drafts reflect live case context.

Contract Drafting and Generation. AI tools now convert term sheets into draft contracts by drawing on clause libraries and house style. Robin AI and competitors can propose revisions (redlines) and adjust language for different commercial contexts, aiming to cut junior-lawyer time on routine deals.

Where it breaks. Stakes are high: contract errors create direct liability. Models sometimes insert inappropriate boilerplate, overlook jurisdiction-specific rules, or stray from firm precedent. Lawyers sign the final document and bear accountability.

What’s next. Expect default co-pilot interfaces where AI proposes and lawyers approve. Adoption hinges on clear version control that tracks every machine-generated change, plus integrations surfacing relevant statutes during drafting. Vendors are tightening links to firm research platforms and building comprehensive audit trails.

Medical Chronology Tools. Personal injury and malpractice attorneys must construct timelines from hundreds of pages of treatment records. AI now automates this work, extracting dates, diagnoses, and treatment sequences.

Where it breaks. Errors carry weight: a missed date or diagnosis can distort case value or trial strategy. Optical-character-recognition failures and inconsistent medical notation corrupt outputs, forcing manual line-by-line fixes. Current systems also overlook subtle clinical details that establish negligence or causation — the core disputes in these cases.

What’s next. Developers are refining medical terminology in training data and plan to add confidence scores for each extracted item.

Most Legal AI products are thin layers over general-purpose models, with limited legal tuning, opaque behavior, and weak audit trails.

Legal Research & Knowledge Retrieval

The first addresses finding published legal authorities (case law, statutes); the second addresses searching a firm’s internal work product and institutional memory.

Legal Research Assistance with RAG. These systems retrieve statutes, regulations, and prior court decisions, then generate answers to legal questions. Accuracy climbs 12–18 percentage points when retrieval works well, and vendors increasingly display source documents and reasoning chains.

Where it breaks. Integrating with older document management platforms is expensive and fragile. Weak retrievers surface irrelevant or outdated material. Security controls must prevent leaks between client matters or practice groups.

What’s next. Firms are building test environments to validate retrieval accuracy and permission boundaries before wider rollout, investing in finer indexing and audit trails that log every query and response.

Firm-Specific Knowledge Management Integration. Firms are wiring generative AI into their internal document stores and matter databases so lawyers can query prior work in plain English. Answers draw on the firm’s own briefs, playbooks, and outcomes—kept inside private infrastructure—to surface similar matters, reusable clauses, and relevant precedents without exposing data externally.

Where it breaks. Connecting to legacy document systems is costly and brittle. Poor tuning can surface stale guidance or weak analogies. The stakes are high for security: role-based access and ethical walls must be precise to prevent leaks across clients or practices.

What’s next. Expect stronger indexing (document- and clause-level), tamper-evident logs of prompts and responses for audit, and cleaner APIs to plug AI into knowledge systems. Many firms are standing up sandboxes to test retrieval quality and permissions before production rollout.

Contract Lifecycle Management

Clause Library Management and Playbook Automation. Law firms are deploying AI to manage databases of pre-approved contract clauses and recommend language for specific transactions. These systems consider deal type, jurisdiction, and counterparty details, then refine suggestions based on which drafts lawyers accept or reject. Data remains within each firm’s infrastructure.

Where it breaks. Performance varies significantly by legal specialty and software vendor. Getting reliable output often requires staff trained in prompt engineering. Accountability is murky: when AI-suggested language makes it into a contract, firms must be clear about who owns the outcome.

What’s next. Newer systems provide complete audit trails, documenting each step from prompt to final language. Firms are testing integrations with proprietary databases in sandbox environments before full deployment.

Law is the stress test for generative AI: if your system can survive citations, permissions, and audit trails, it can survive anywhere.

Governance & Quality Assurance

The first addresses documentation and record-keeping (creating an audit trail after the fact), while the second covers real-time supervision (active human oversight during AI use).

Audit Trails and Traceability Mechanisms. Legal platforms are recording AI interactions — prompts, model versions, outputs — often with cryptographic hashing. This creates audit trails for compliance reviews and client questions about how conclusions were reached.

Where it breaks. This is not yet standard practice. Detailed records add to storage budgets, and vendors lack common export formats. Extracting data for regulators or moving between tools requires custom integration work.

What’s next. Standardized prompt logs are emerging. Firms are also categorizing systems by autonomy level (assistant, advisor, agent) and setting oversight requirements to match.

Human-in-the-Loop Oversight Systems. Production systems require human review. Attorneys validate outputs and provide corrections, keeping systems aligned with legal standards while enabling improvement.

Where it breaks. Expert time is scarce. Without clear confidence scores and prompts for uncertainty, reviewers can “rubber-stamp” results, weakening quality control.

What’s next. New implementations add role-based permissions, escalation logic for ambiguous cases, and feedback quality metrics. Firms are formalizing governance — defining who reviews, how work is attributed, and what documentation is required.

Workflow Automation (Early Production)

The first covers basic workflow automation — tracking deadlines and managing intake. The second describes autonomous agents that execute multi-step tasks independently.

Practice Management and Docket Automation. Generative AI automates routine workflows and watches court calendars for new filings tied to specific case numbers, sending alerts to the team. Platforms like Forlex centralize tasks, cut manual tracking, and lower the risk of missed deadlines. Many firms also use AI for intake and triage, gathering key facts during onboarding.

Where it breaks. Missing a deadline can constitute professional negligence, so error rates must approach zero. A lot of legal software dates from the pre-cloud era, making integration difficult. Data security is paramount, and intake systems can be tripped up by ambiguous language or incorrect assumptions.

What’s next. Development will move toward tools that flag workflow delays and surface operational data for firm management. Intake systems will blend conversational interfaces with structured forms and decision trees to reduce errors.

Task-Executing Agents. Newer tools automate sequences rather than single tasks: extracting data, routing approvals, coordinating reviews across teams, and escalating problems to lawyers. Early users report lower costs for repetitive work by linking steps that previously required human handoffs.

Where it breaks. The difficulty is accountability. When a five-step process produces an incorrect result, pinpointing the failure — and assigning responsibility — grows complicated. Mistakes in early steps cascade forward. In practice, these agents sit at the frontier rather than the mainstream.

What’s next. Expect stricter governance: audit logs for every decision, permission controls, and isolated testing environments. Most firms will move incrementally — deploying assistive tools first, then advisory systems, and finally autonomous agents — starting in low-risk practice areas where mistakes carry limited consequences.

Ben Lorica edits the Gradient Flow newsletter and hosts the Data Exchange podcast. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. You can follow him on Linkedin, X, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.