Why AI Benchmarks Don't Predict Consumer Success: The Gemini Paradox

As someone who works with AI, my toolkit is rarely limited to a single provider. I regularly switch between models from Google (Gemini), OpenAI (GPT-X), Anthropic (Claude), and Grok. I also work with increasingly capable open-weight models from Alibaba (Qwen), DeepSeek, and Moonshot AI (kimi). The right model is always use-case specific, but before starting a task, I, like many others, glance at the LM Arena leaderboard to see which model families are worth considering. Today, if forced to narrow my toolkit to three providers, I'd choose Google, OpenAI, and Anthropic—but with open-weight models advancing at their current pace, this answer could look very different in six months.

Here's what puzzles me: Gemini has dominated or nearly dominated LM Arena rankings for months, yet ChatGPT remains the default choice for most regular users—those who interact with AI through web interfaces or mobile apps rather than APIs. This consumer market disconnect hits closer to home when considering how I use the consumer version of these models. Despite Gemini's technical superiority, I gravitate toward ChatGPT's web interface while avoiding Gemini's and Claude's consumer-facing platforms entirely, preferring AI Studio and Console respectively. To understand this apparent contradiction between technical benchmarks and consumer adoption, I sifted through user reviews, discussion threads, and social media commentary through August 6, 2025 — just before GPT-5's announcement — seeking to understand why Gemini struggles to translate technical excellence into consumer market success.

How Consumers Experience AI

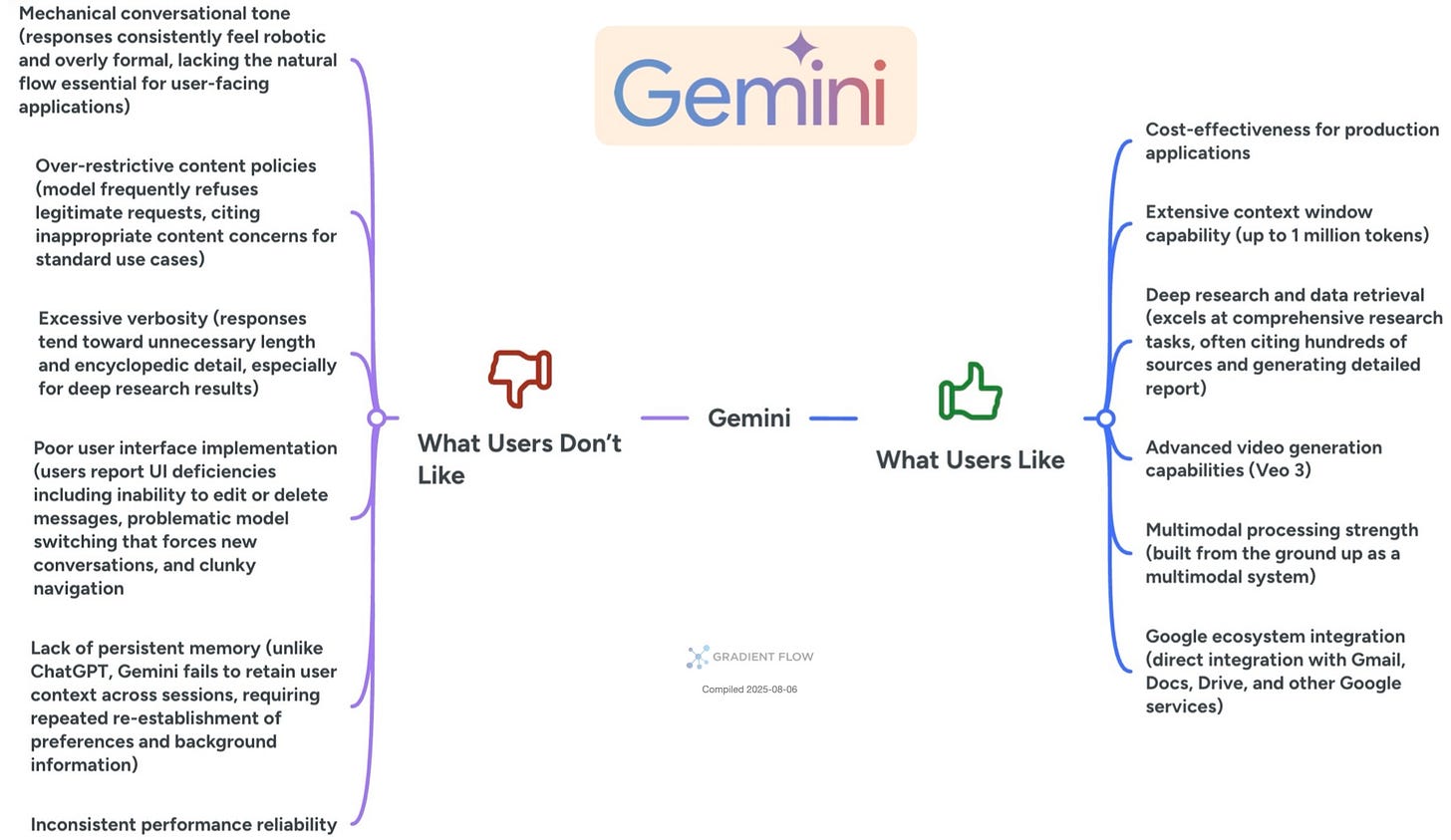

Google's Gemini is a fascinating contradiction in user perception. Its technical capabilities impress power users — the million-token context window handles entire codebases without breaking a sweat, while Veo 3 produces genuinely impressive text-to-video content with synchronized audio. Its native multimodal processing, built in from the ground up, seamlessly handles mixed media in a way I now find indispensable. For organizations already embedded in Google's ecosystem, the native integration with Gmail, Docs, and Drive creates compelling workflow advantages. However, these technical strengths are consistently undermined by fundamental user experience flaws. The inability to edit or delete messages, the requirement to start new conversations when switching models, and the persistently mechanical, encyclopedic tone create friction that drives users away. The overly restrictive content policies and verbose, unfocused responses — particularly in Deep Research reports — compound these frustrations, leaving users with a technically superior tool wrapped in an inferior interface.

ChatGPT, on the other hand, has built its dominance on user experience. It excels at maintaining natural, engaging dialogue that feels less like a machine and more like a capable partner. Its persistent memory feature, which retains context and user preferences across sessions, creates a personalized and continuous experience that users value highly. The interface is a model of refinement: navigation is intuitive, managing projects is simple, and core functions just work as expected. While its image generation is slower than some rivals, having frequently compared results across ChatGPT, Gemini, and Grok, I almost always opt for ChatGPT's superior image quality and text rendering. The trade-offs are a smaller context window and a higher price point for premium tiers. For the average consumer, however, these limitations are a small price to pay for a polished, reliable, and intelligent-feeling tool.

With the new GPT-5 update, model selection has been streamlined through a default router, and while legacy models have returned after a brief absence, the community is still evaluating the impact on performance. Throughout these model updates, the interface has fortunately retained its clean and familiar feel.

Anthropic’s Claude has carved out a distinct niche as the professional’s choice, excelling where precision and quality are paramount. It is widely regarded as the best-in-class model for coding, consistently producing higher-quality code with fewer errors. For writers, its ability to adopt and maintain a specific authorial voice is invaluable for creating on-brand content. Claude’s tone strikes a balance between ChatGPT’s affability and Gemini’s formality, projecting a professional helpfulness that resonates in business contexts. This focus comes with clear trade-offs for the consumer market. Claude is the most expensive of the three, lacks competitive image or video generation, and has no persistent memory. This suggests a deliberate strategy by Anthropic to target the high-end enterprise and developer markets rather than compete for mass-market adoption.

Matching Models to Real-World Needs

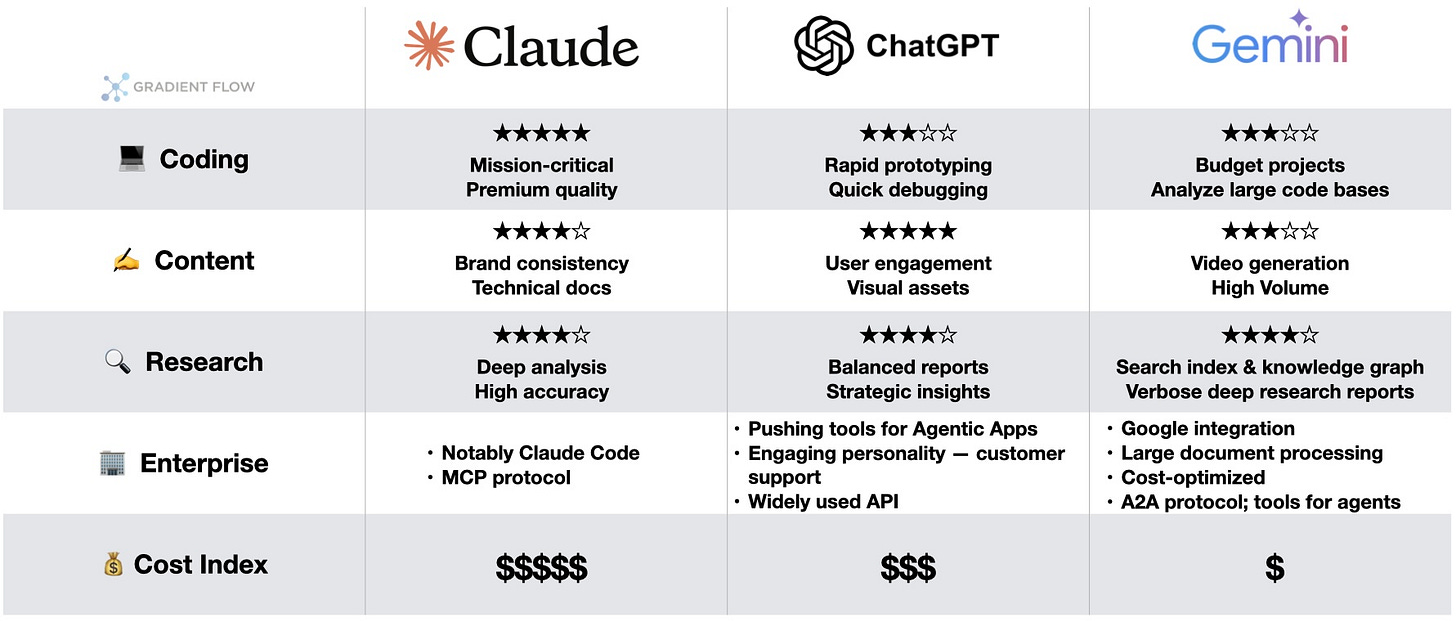

When selecting a model for technical or development work, a clear hierarchy of strengths emerges. For mission-critical software projects where code quality and sophisticated architecture justify a premium price, Claude is the standout choice; its superior output can save significant time on debugging and refinement. For more budget-sensitive or high-volume coding tasks, Gemini Flash offers a compelling alternative, providing adequate performance at a fraction of the cost. ChatGPT finds its niche in rapid prototyping and iterative development, where its fluid conversational interface and strong user experience reduce friction. And for analyzing massive codebases or document sets, Gemini’s unparalleled million-token context window makes it the only option for holistic, single-pass analysis.

For content creation and research, the decision hinges on the specific nature of the task. Claude’s talent for stylistic adaptation makes it the go-to for maintaining a consistent brand voice in marketing copy or documentation. For generating visual assets like infographics or social media graphics, ChatGPT generally produces higher-quality results. Gemini’s Veo 3, in turn, opens up new possibilities for short-form video. In research, each model serves a different need: Gemini for exhaustive, deeply sourced reports (if you can tolerate the length); ChatGPT for balanced, readable summaries suitable for strategic decision-making; and Claude for precise, accurate analysis of complex technical topics. Understanding these nuances helps explain why different user communities gravitate toward different platforms, regardless of benchmark scores.

Why User Experience Trumps Benchmarks

My goal was to understand the disconnect between AI leaderboards and consumer loyalty, particularly why the technically impressive Gemini lags behind ChatGPT. The analysis of user sentiment and my own experience point to a clear conclusion: this is not a failure of model capability, but of product execution. The consumer market rewards more than just raw power; it values usability, conversational quality, and a polished experience. While Google has the technical foundation and distribution via Android to be a dominant force, it must invest seriously in fixing the user-facing issues we've surfaced. The challenge is steep, as ChatGPT's brand has become so strong that many consumers refer to any AI interaction as "chatting with ChatGPT," a powerful moat that technical specs alone cannot cross.

For Google, several strategic improvements could make a significant difference:

Refine Deep Research: The current default reports are often too long to be actionable.

Introduce a "Search+" Feature: Both ChatGPT and Claude offer a lightweight web search integration for quick, grounded answers. While this middle-ground search capability exists for developers in AI Studio, it's conspicuously absent from the main Gemini app, where users are forced to choose between a basic query and a full-blown deep research project.

Fix the Interface: Basic features like editing messages and seamless model switching (without having to start new conversations) are table stakes for a modern chat application.

Leverage Its Strengths: The massive context window and superior multimodality are killer features that feel underutilized in the current consumer product.

Improve Image Generation: While perhaps a secondary feature, ChatGPT’s slower but higher-quality image output wins on the dimension that matters most to users: the final result.

Anthropic appears to have made a strategic decision to focus on enterprise markets, investing heavily in Claude Code and professional tools rather than consumer features. This positioning makes sense given their pricing structure and technical strengths, though it cedes the consumer market to competitors. The absence of image generation capabilities and persistent memory features reinforces this enterprise-first strategy.

In consumer AI, benchmarks impress—but user experience wins the market

For consumers choosing between these AI apps today, the decision ultimately comes down to prioritizing either technical capability (Gemini), user experience (ChatGPT), or professional-grade precision (Claude) — a choice that benchmarks alone cannot resolve.

Setting Grok aside, there's another potentially formidable consumer AI app that may start disrupting this landscape in the months ahead — Meta.ai, which under Mark Zuckerberg's personal leadership has launched an extraordinary hiring campaign for its new AI division. With billions of users already inside Meta's ecosystem of Facebook, Instagram, and WhatsApp — and if it can sidestep Gemini's UX missteps — Meta.ai could rapidly emerge as an essential AI assistant in consumers' toolkits.

Join Us in San Francisco

Ben Lorica edits the Gradient Flow newsletter and hosts the Data Exchange podcast. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. You can follow him on Linkedin, X, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.