Agentic AI: Challenges and Opportunities

Navigating the Complex World of AI Agents

Last year, the buzz in the AI community revolved around the concept of AI co-pilots - systems designed to work alongside humans, assisting them in tasks and decision-making processes. These co-pilots, such as GitHub Copilot for programming assistance and Grammarly for writing, focused on augmenting human capabilities while maintaining human control and responsibility. They were reactive, responding to human inputs and providing suggestions and recommendations.

This year, more and more developers are talking about AI agents - autonomous or semi-autonomous systems capable of handling a wider range of tasks and making decisions on their own. Unlike co-pilots, agents have a higher degree of autonomy and can take proactive actions based on their goals and understanding of the environment. They can complete tasks without constant human intervention, learning and adapting based on their interactions and experiences.

AI Agents in the Enterprise: early examples

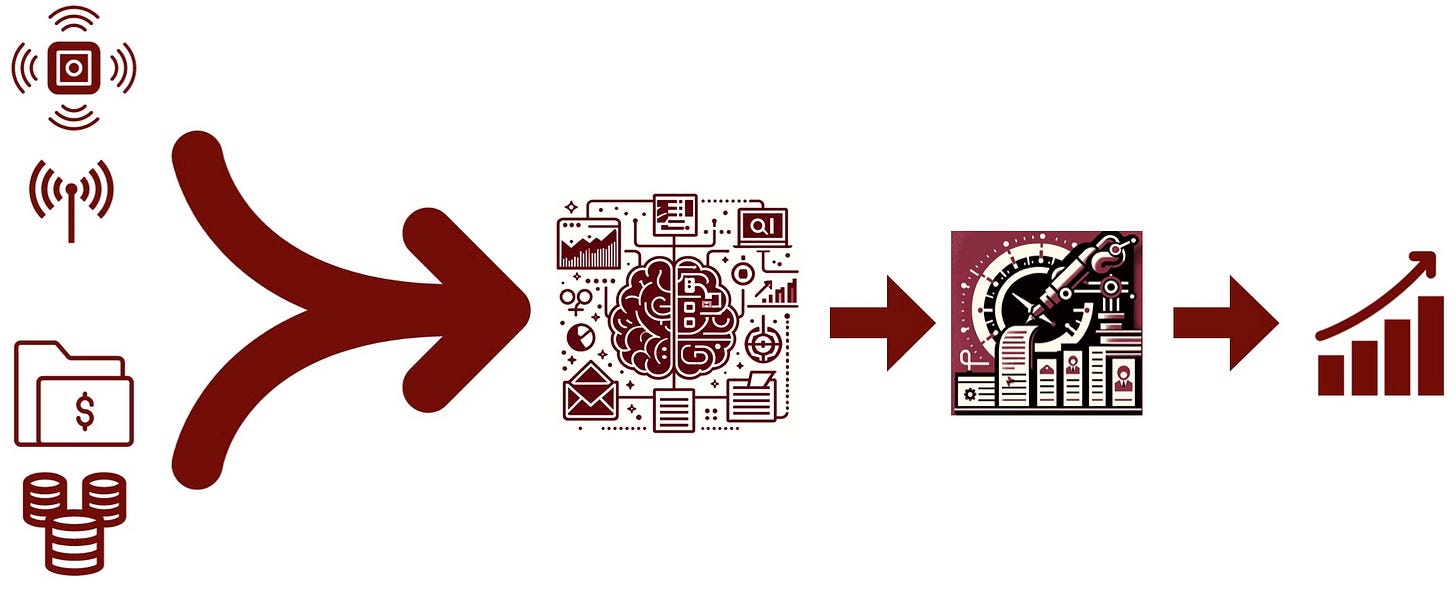

Recent enterprise examples of AI Agents, particularly ones backed by foundation models, are demonstrating the potential to automate and streamline various business processes. These agents are being used for tasks such as manufacturing monitoring, where they analyze data from machines and processes, cross-reference it with guidelines and standard operating procedures, and generate reports on any deviations. They are also being employed for auditing purposes, combining financial documents with external data to provide insights, and for lead scoring, where they research leads, compare them to ideal customer profiles and offerings, and provide sales representatives with talking points and initial email drafts.

Key aspects of many enterprise AI Agent applications include efficient data collection, effective data integration from multiple sources, and advanced analysis of the aggregated data to generate valuable, actionable insights that drive informed decision-making and optimize business processes. By leveraging foundation models and machine learning, these AI Agents can process vast amounts of data, identify patterns, and provide recommendations, essentially automating and augmenting the role of an analyst. Improvements in the underlying large language models (LLMs) could further enhance the quality and consistency of the final analyses generated by these agents, making them even more reliable and valuable for enterprises looking to save time and resources on repetitive, time-consuming tasks.

Navigating the world of AI Agents

As I explore the world of AI agents, I find myself navigating a complex landscape of architectural classifications, functional categories, and modality-specific capabilities. Single-agent architectures, suitable for well-defined tasks, coexist with multi-agent architectures that enable collaborative problem-solving in dynamic environments. Agents can manipulate objects in physical environments, like industrial robots, or operate within virtual realms, such as in gaming and simulations.

The rise of multimodal agents, capable of processing and integrating information from multiple sensory inputs, opens up new possibilities for holistic understanding and interaction. Cross-modal understanding agents take this a step further, enhancing generalist capabilities by responding to inputs from various sensory channels. Interactive AI agents engage users in natural language conversations, while ethical agents adhere to guidelines to ensure responsible decision-making.

Moreover, the emergence of iterative and agentic workflows, where AI agents engage in a process of writing, thinking, critiquing, and refining their output, is revolutionizing the way these systems operate. This approach, which mimics the human writing process, has been shown to produce significantly better results compared to the traditional 'zero-shot prompting' method.

One simple yet effective design pattern in agentic workflows is reflection, where an AI agent critiques its own output, identifies strengths and weaknesses, and uses this feedback to generate an improved result. This iterative process has been shown to produce significantly better results compared to traditional approaches.

As the field of AI agents continues to evolve, new tools and frameworks are emerging to support their development and deployment. CrewAI, for example, is an open-source framework that enables the orchestration of role-playing, autonomous agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a platform for building multi-agent systems, whether for smart assistants, automated customer service, or research teams.

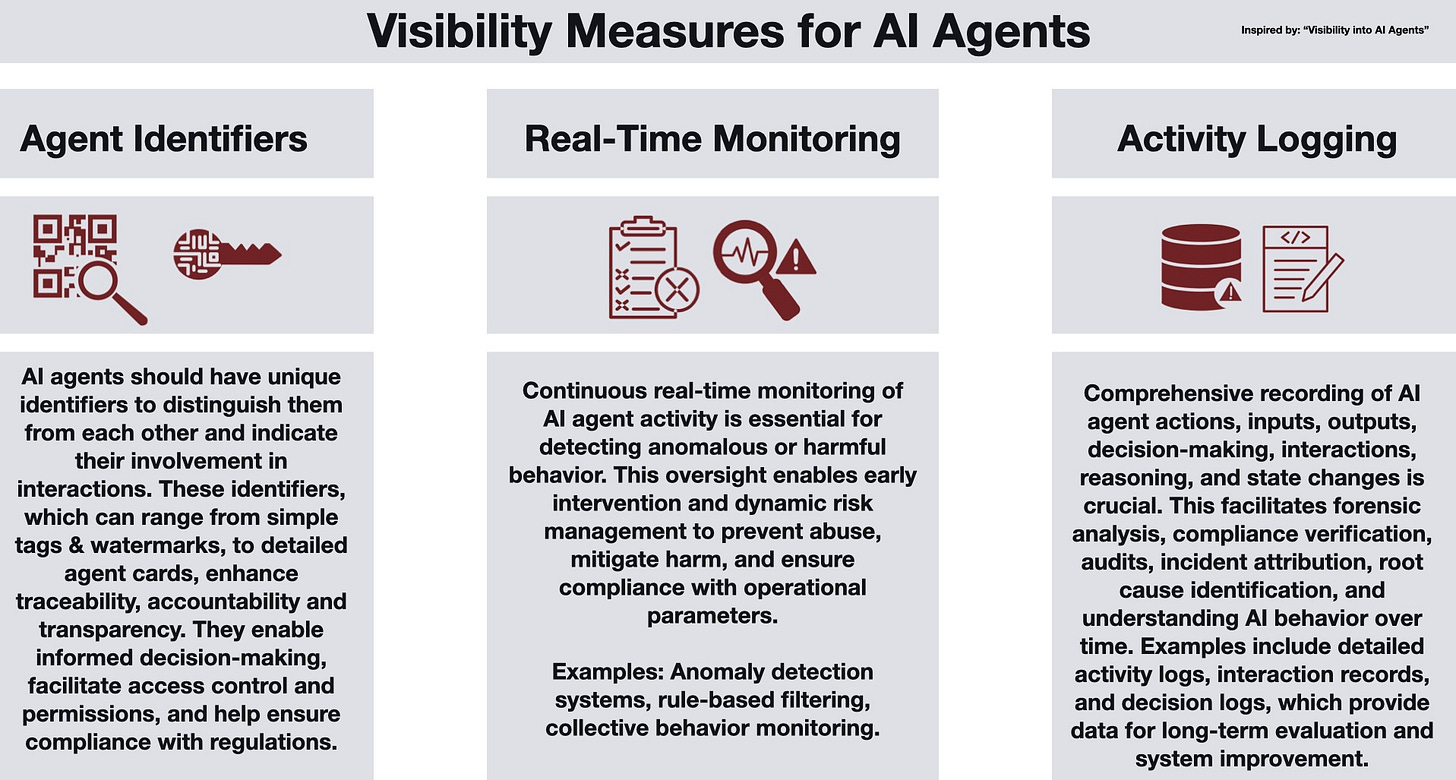

At the end of the day, agents are software applications that involve machine learning. This means you’ll need the MLOps systems and tools in place to ensure reliability, reproducibility, and quality. With the increasing autonomy and complexity of AI agents, visibility measures become crucial for ensuring accountability, precision, and trust. Agent identifiers, such as digital signatures or agent cards, enhance traceability and transparency by linking actions and outputs to specific agents. Real-time monitoring systems continuously analyze agent activity to detect anomalous or harmful behavior, enabling early intervention and risk management. Comprehensive activity logging facilitates forensic analysis, impact assessment, and compliance verification.

Researchers in the AI community are paying close attention to the challenges and opportunities presented by agentic AI. Generalization remains a significant hurdle, as developing agents that can adapt their skills and knowledge to new situations is essential for practical real-world applications. Continuous learning is another key area of focus, enabling agents to improve their performance over time in response to changing environments.

Safety and ethical concerns are at the forefront of the conversation, as the increasing autonomy of AI agents raises questions about potential misuse and unintended consequences. Addressing these issues is crucial for maintaining public trust and ensuring the responsible deployment of AI agents.

Despite the challenges, the potential of AI agents is immense. New benchmarks, such as AgentBench, provide standardized metrics for evaluating and comparing the capabilities of different agents, driving innovation and improvement. The use of large language models as foundations for agentic AI is another exciting trend, harnessing their robust language understanding and generation capabilities to enable more sophisticated interactions and decision-making processes.

AI agents will play an increasingly significant role in shaping the way we interact with technology, from personal assistants to autonomous systems in industries like healthcare, finance, and transportation. As we navigate this complex landscape, it is crucial that we prioritize the development of safe and responsible AI systems by establishing robust ethical guidelines, ensuring transparency in decision-making processes, and continuously monitoring and evaluating the performance of AI agents to identify and mitigate potential risks.

As AI agents become more autonomous and adaptable, we can expect instances of surprising and delightful behavior, where these systems solve problems or complete tasks in ways that were not explicitly programmed or anticipated by their creators. However, we must approach this emergent behavior with caution, ensuring that AI agents remain aligned with human values and goals. By fostering collaboration between researchers, developers, policymakers, and the public, we can work towards unlocking the full potential of AI agents to benefit society while minimizing unintended consequences.

Data Exchange Podcast

LLMs for Data Access. In this episode, Gunther Hagleither, co-founder of Waii, discusses how their API enables businesses to integrate text-to-SQL functionality into their products, revolutionizing data interaction. The conversation explores the potential of this technology to make data more accessible and empower data-driven decision-making across organizations.

Monthly Roundup: Llama 3, Agents, Evaluation Metrics, Cyc, TikTok, and more. Paco Nathan and I discuss recent developments in large language models, the rise of AI agents, and the limitations of leaderboards in evaluating AI models. They also touch upon the ethical implications of AI development and remember Doug Lenat's pioneering work on Cyc and generative AI approaches.

Recent Articles

If you enjoyed this newsletter please support our work by encouraging your friends and colleagues to subscribe:

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Twitter, Reddit, or Mastodon. This newsletter is produced by Gradient Flow.