AI Agents: 10 Key Areas You Need to Understand

1. GUI-Based Interaction

AI agents (that rely on foundation models) increasingly interact with software through graphical user interfaces (GUIs), much like a human using mouse and keyboard inputs. This is in contrast to older approaches limited to bespoke or specialized APIs. Frameworks like OpenAI Operator, CogAgent, and Skyvern exemplify this trend.

Why It’s Important

Broad Applicability: Most existing software lacks robust APIs but offers GUIs for human use. Enabling AI to “see” the screen and “click” the buttons expands automation possibilities to nearly any platform—legacy systems, complex web apps, desktop tools.

Reduced Integration Overhead: Developers do not have to build custom integrations for every tool or website; the agent interfaces directly with the GUI.

Current State & Challenges

Agents handle routine tasks well but struggle with highly dynamic interfaces, pixel-perfect text editing, or confusing layouts.

Real-world reliability, safety, and interpretability remain areas of active research and development.

2. Layered Agent Architecture

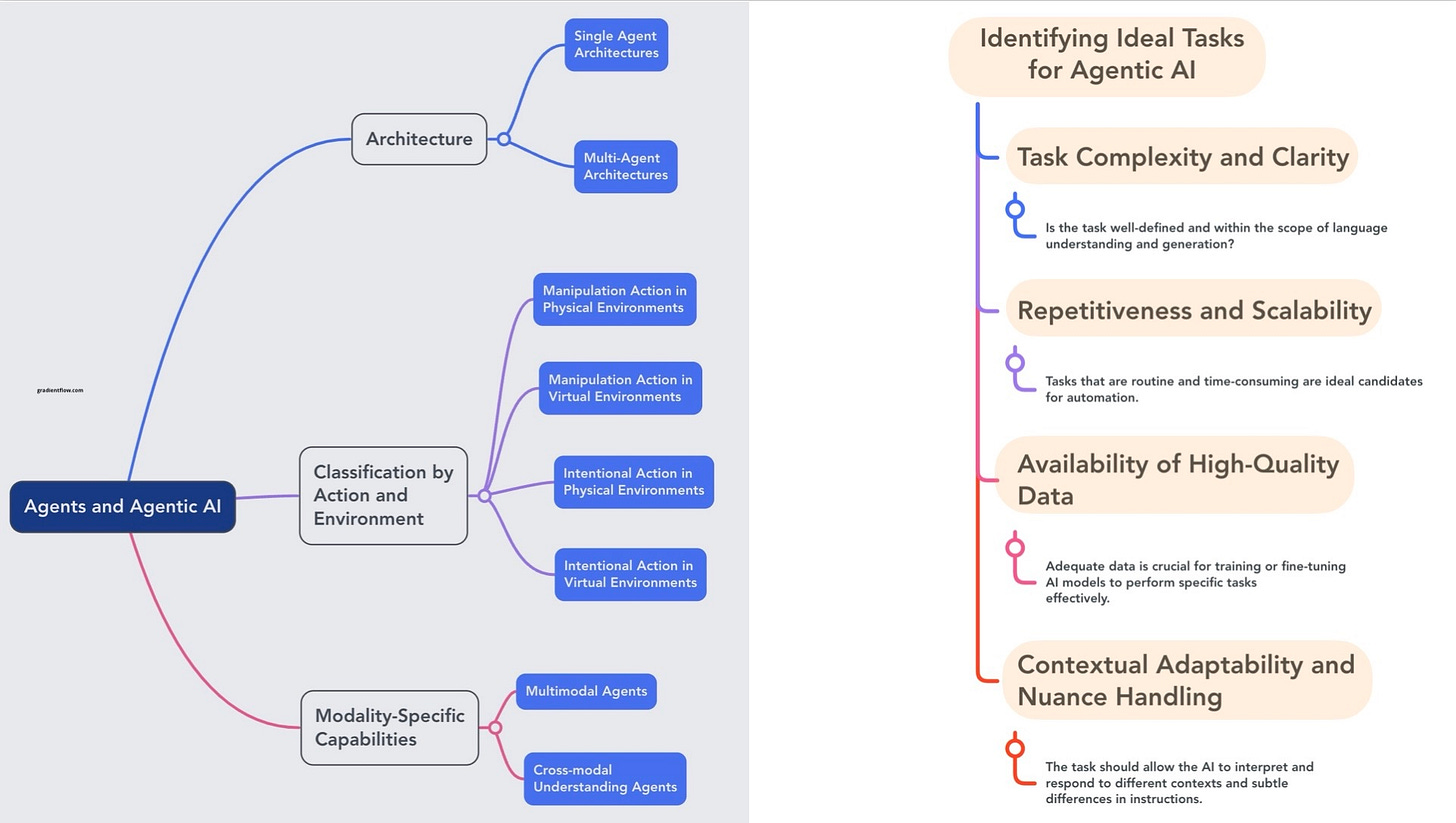

The AI Agent stack is a layered architecture for building Agents. It includes: Vertical Agents (user applications), Agent Hosting & Serving (deployment), Observability (monitoring), Agent Frameworks (logic), Tools & Libraries (external actions), Memory (state), Model Serving (LLMs), and Storage (data). These layers work together to enable complex agent behavior.

Why It’s Important

Structured Development: Divides complex agent building into manageable components.

Scalability & Interoperability: Enables scaling to many agents and integrating best-of-breed components.

Reusability: Promotes the reuse of components across different agent applications.

Current State

Fragmented Ecosystem: Multiple frameworks with overlapping functionality, lacking a single standard.

Security & Deployment Challenges: Handling authentication, access control, and real-time monitoring at scale remains complex.

Rapid Evolution: The ecosystem is rapidly evolving, making it challenging to keep up with the latest advancements.

3. Modular Agent Design

A common recommendation is to start with simple LLM-driven flows (one-off calls or minimal tool usage) and only add complexity (multi-step reasoning, advanced memory, etc.) as needed. This modular approach often includes carefully documented “agent-computer interfaces” (ACIs) and an iterative development cycle.

Why It’s Important

Prevents Over-Engineering: Complex orchestration can become unmanageable if the use case doesn’t truly require it.

Facilitates Debugging & Maintenance: Smaller, composable pieces let teams pinpoint failures quickly and adjust designs without unraveling the entire system.

Current State

Growing Adoption: Many teams are finding that a straightforward approach is more reliable, cost-effective, and easier to scale.

Next Steps: Refining best practices and formalizing design patterns (e.g., usage boundaries for each tool, fallback logic).

4. Agent Design Patterns: Planner-Actor-Validator and Tool Use

Common patterns for structuring agent logic include:

Planner-Actor-Validator: Breaks large tasks into steps, executes each step, and validates results before proceeding.

Tool-Use Workflows: Agents selectively invoke external APIs or functions (e.g., calculators, search engines) to improve correctness.

Why It’s Important

Enhanced Reliability: Decomposing tasks clarifies each sub-step, reducing confusion and enabling better error handling.

Context-Specific Expertise: By integrating domain-specific tools, agents can perform specialized tasks more accurately (e.g., financial calculations, data analysis).

Current State

Growing Adoption: These patterns exist in popular frameworks (LangChain, Haystack, custom orchestrators).

Further Validation Needed: Real-world feedback is crucial to refine patterns and ensure they scale without overwhelming complexity.

5. Accountability and Safety Infrastructure

As AI agents become more autonomous and capable of performing complex tasks, often with high-level privileges, a robust infrastructure for accountability and safety is crucial. This infrastructure includes mechanisms for attribution, which involves identifying which agent performed a specific action, and controlled interaction, which ensures agents operate within their authorized scope through sandboxing and permissioning. It also requires response and remediation capabilities, such as rollbacks, throttling, and quarantines, to mitigate harmful or erroneous agent actions. Examples of this include agent IDs that tag each action to a specific persona, and real-time monitoring dashboards that trigger alerts for anomalous agent behavior.

Why It’s Important

Trust and Compliance: Organizations need auditable logs and traceability to comply with regulations (especially in finance and healthcare).

Operational Safety: Preventing accidental or malicious actions by agents is paramount as tasks become more complex.

Current State

Early-Stage Solutions: Efforts involve agent identity bindings, standardized agent APIs, and real-time oversight.

Need for Standardization: Fragmentation leads to duplicated work and inconsistent safety measures across different platforms.

6. Real-World Evaluation and Control

Moving AI agents from controlled lab settings to real-world production environments exposes their behavior under unpredictable circumstances, highlighting the need for reliable deployment strategies. This requires robust observability and logging to track each decision and tool invocation, a combination of offline pre-deployment tests and real-time online monitoring, and the implementation of safety mechanisms such as rate limiting, kill switches, and constraint-based validators to ensure safe and effective operation.

Why It’s Important

Practical Reliability: Benchmarks rarely capture the complexity of real user data, security constraints, and system integrations.

Continuous Improvement: Monitoring real-world performance (error rates, user satisfaction) informs iterative refinement.

Current State

Active Investment: Teams are implementing advanced logging, “human in the loop” approvals, and automated alarm systems.

Challenges: Balancing safety with user experience, especially under large workloads or latency constraints.

7. Challenges in AI Agent Adoption

When deploying AI agents in critical domains such as healthcare, finance, and enterprise workflows, teams face several significant hurdles. These include concerns about reliability and performance, as AI agents can hallucinate or make unexpected mistakes; safety and compliance issues, where high-stakes industries demand rigorous proof that agents will not harm customers or data; and knowledge gaps, where internal staff may lack the expertise to design, debug, and maintain complex agent pipelines.

Why It’s Important

Widespread Adoption Hangs in the Balance: Until these issues are resolved, many enterprises remain hesitant to integrate agents into production.

Technical & Non-Technical Solutions: Balancing user training, robust frameworks, and systematic evaluations.

Current State

Gradual Adoption: Surveys show a minority (roughly half) of AI-oriented companies have deployed agents in production.

Key Mitigation Strategies: Offline evaluation, thorough guardrails, and specialized open-source tools are becoming more common.

8. Transparency and Explainability

Given the opaque nature of LLM-based agent behavior, transparency and explainability are crucial, leading teams to experiment with several measures. These include detailed logs and replay capabilities to track each decision and piece of context used, selective exposure of chain-of-thought reasoning to allow developers or end-users to understand how an agent arrived at a conclusion, and clear tool documentation to ensure that the function and purpose of each tool invocation are readily apparent.

Why It’s Important

Trust Building: Users are more comfortable with AI systems whose reasoning can be inspected and questioned.

Regulatory & Ethical Considerations: Explainability is increasingly important for compliance (GDPR, potential AI regulation), and for ensuring AI does not discriminate or produce harmful results.

Current State

Partial Implementations: Some frameworks allow optional “chain-of-thought” logging, though privacy and efficiency concerns arise when storing these logs.

Ongoing Work: Techniques to provide interpretability without compromising performance or revealing proprietary data are a major research direction.

9. Skepticism and Societal Impact

While there is considerable excitement surrounding AI agents, there is also growing skepticism and concern about overhyped claims and the potential negative impacts. Some observers argue that many so-called "AI agents" are simply rebranded workflow automation tools, and there are ongoing debates about job displacement, the potential for misuse in phishing, fraud, or content manipulation, and the ethical implications of deploying autonomous systems. Companies often promote advanced "agents" that cannot consistently handle complex, real-world scenarios, leading to a gap between hype and reality.

Why It’s Important

Acknowledging these limitations and concerns helps to keep expectations realistic, fosters responsible usage, and encourages teams to proactively address ethical implications.

Current State

Current sentiment is mixed, with practitioners remaining optimistic about productivity gains but cautious about overstating capabilities. There is a need to focus on demonstrating real-world, production-level success stories to reduce skepticism and build trust. Furthermore, developing ethical guidelines and effective guardrails is crucial to protect against misuse and ensure responsible development and deployment of AI agents.

10. Need for a Unified Framework

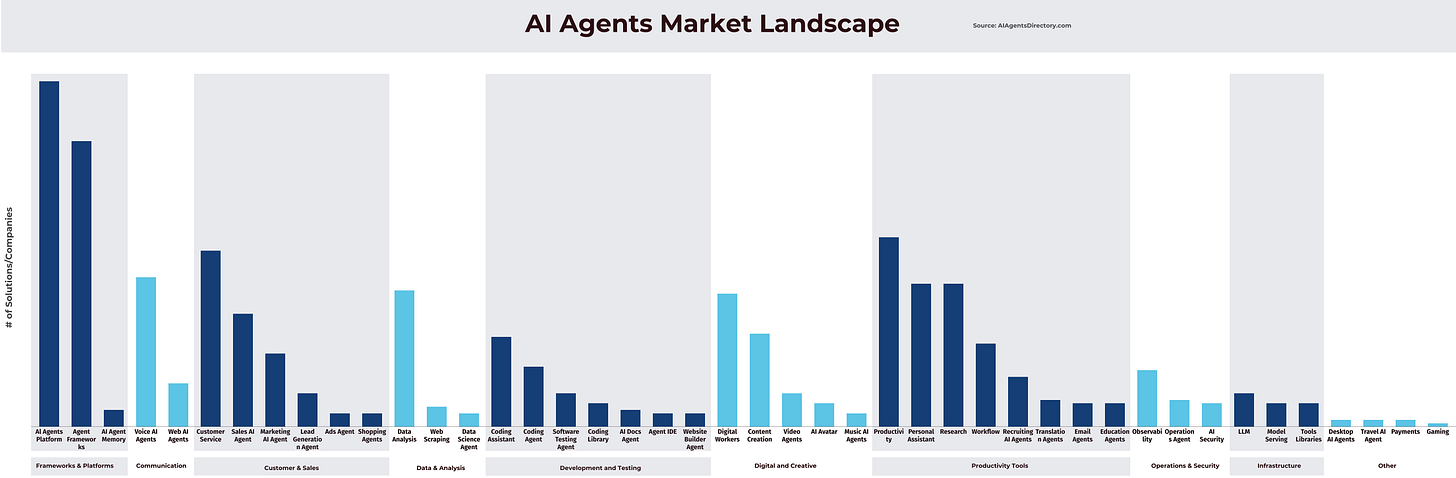

The AI agent field is fragmented: researchers, startups, and large vendors all employ different paradigms, definitions, and tooling. A unified framework would consolidate core concepts (e.g., tasks, memory, planning) and offer clearer evaluation guidelines.

Why It’s Important

Easier Comparisons: Practitioners need a common yardstick to gauge agent performance or features.

Less Reinvention: A standard framework or reference architecture cuts down on duplicated efforts, letting teams focus on unique improvements.

Current State

Growing Consensus on Components: Many groups now agree on the broad strokes of agent architecture (planning, memory, tool usage), but naming conventions and integration details vary widely.

Path Forward: Community-driven initiatives, open-source standards, and industry coalitions could unify the field.

Interested in diving deeper into the world of AI agents? Join us at the AI Agent Conference, a focused gathering in NYC this coming May 6-7. Organized by FirsthandVC, and co-chaired by myself, this is a unique opportunity to connect with leading experts and practitioners. Share your expertise and apply to speak HERE.

Foundation Models: Trends To Watch

Data Exchange Podcast

Unlocking Spreadsheet Intelligence with AI. Hjalmar Gislason of GRID, explains how AI is revolutionizing spreadsheets, moving beyond basic data entry to intelligent analysis and automation. This episode explores the future of these ubiquitous tools, from natural language interfaces to AI-powered insights.

Why Legal Hurdles Are the Biggest Barrier to AI Adoption. Deploying AI at scale is fraught with challenges, from legal hurdles to the unique risks of generative models. Andrew Burt of Luminos AI explores the disconnect between tech and compliance teams and the need for new solutions to navigate this complex landscape.

If you enjoyed this newsletter, consider supporting our work by leaving a small tip💰 here and inviting your friends and colleagues to subscribe 📩

Ben Lorica edits the Gradient Flow newsletter. He helps organize the AI Conference, the AI Agent Conference, the NLP Summit, Ray Summit, and the Data+AI Summit. He is the host of the Data Exchange podcast. You can follow him on Linkedin, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.

Very helpful! I think there definitely needs to be more attention to numbers 9 and 10. I recently posted a piece about the national security implications of AI agents.

If you thought the robocall telemarketing and email scams of the past were bad, wait until malicious AI agents are unleashed to cross reference illicit email registries with publicly available user information. We will soon enter an era of unprecedented phishing scams and completely novel cybersecurity threats.